Automated Morning Briefing Email with n8n: Turn RAG + Embeddings into Your Daily Advantage

Every morning, you and your team wake up to a familiar challenge: too much information, not enough clarity. Slack threads, dashboards, tickets, emails, docs – the signal is there, but it is buried in noise. Manually pulling it all together into a focused briefing takes time and energy that you could spend on real work and strategic decisions.

This is where automation can change the game. In this guide, you will walk through a journey from scattered data to a calm, curated Morning Briefing Email, powered by n8n, vector embeddings, Supabase, Cohere, and an Anthropic chat model. You will not just build a workflow. You will create a system that turns raw information into daily momentum.

The workflow uses text splitting, embeddings, a Supabase vector store, a RAG (retrieval-augmented generation) agent, and simple alerting and logging. The result is a reliable, context-aware morning briefing that lands in your inbox automatically, so you can start the day aligned, informed, and ready to act.

From information overload to focused mornings

Before diving into nodes and configuration, it is worth pausing on what you are really building: a repeatable way to free your brain from manual status gathering. Instead of chasing updates, you receive a short, actionable summary that highlights what truly matters.

By investing a bit of time in this n8n workflow, you create a reusable asset that:

- Saves you from daily copy-paste and manual summarization

- Aligns your team around the same priorities every morning

- Scales as your data sources and responsibilities grow

- Becomes a foundation you can extend to other automations

Think of this Morning Briefing Email as your first step toward a more automated workday. Once you see how much time one workflow can save, it becomes easier to imagine a whole ecosystem of automations doing the heavy lifting for you.

Why this n8n architecture sets you up for success

There are many ways to send a daily email. This one is different because it is built for accuracy, context, and scale. The architecture combines vector embeddings, a Supabase vector index, and a RAG Agent so your summaries are not just generic AI text, but grounded in your real data.

Here is what this architecture gives you:

- Context-aware summaries using Cohere embeddings and a Supabase vector store, so the model pulls in the most relevant pieces of information.

- Up-to-date knowledge retrieval via a RAG Agent that blends short-term memory with retrieved documents, rather than relying on a static prompt.

- Scalability and performance through text chunking and vector indexing, which keep response times predictable as your data grows.

- Operational visibility with Google Sheets logging and Slack alerts, so you can trust this workflow in production and quickly spot issues.

You are not just automating an email. You are adopting a modern AI architecture that you can reuse for many other workflows: internal search, knowledge assistants, support summaries, and more.

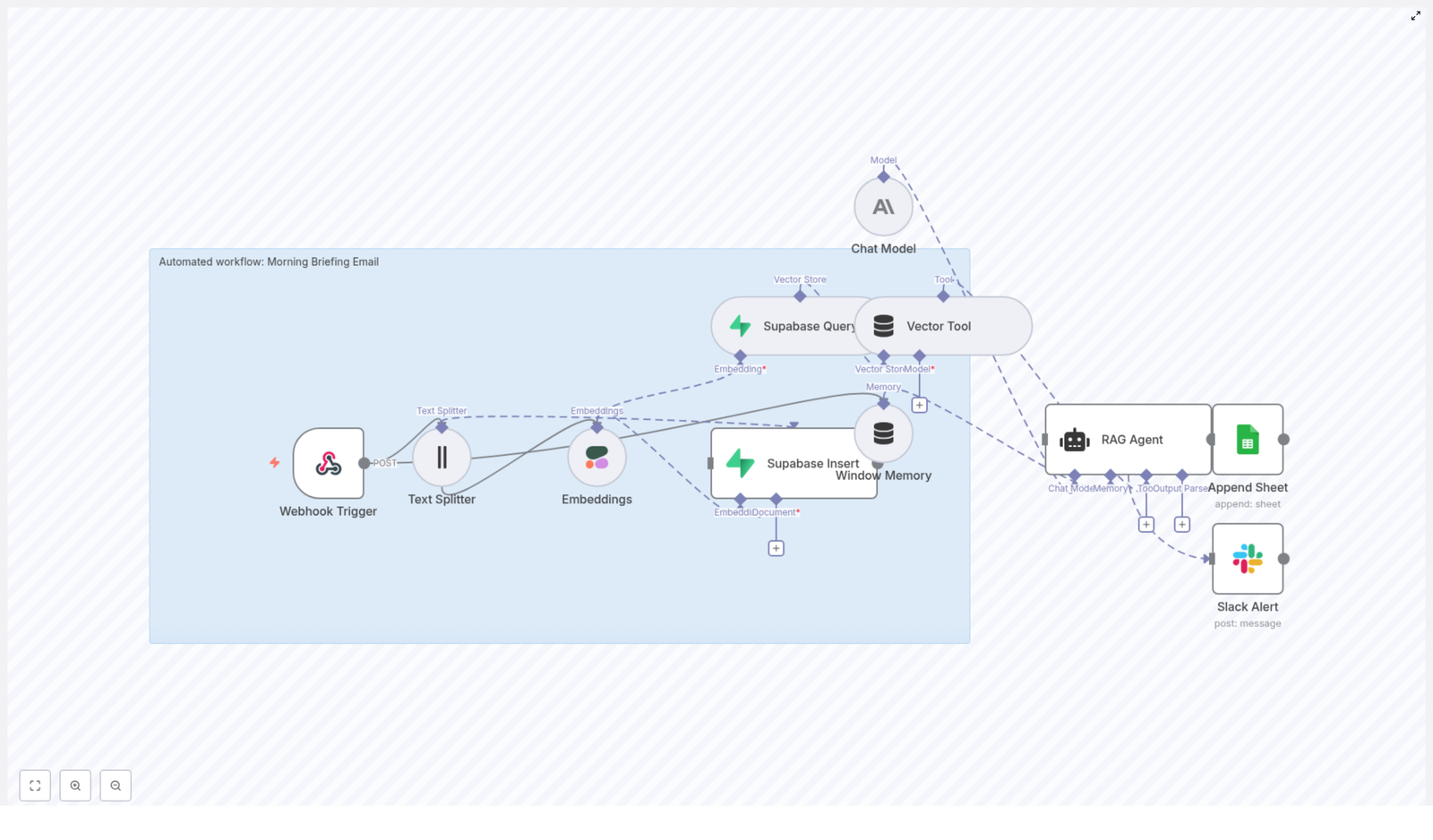

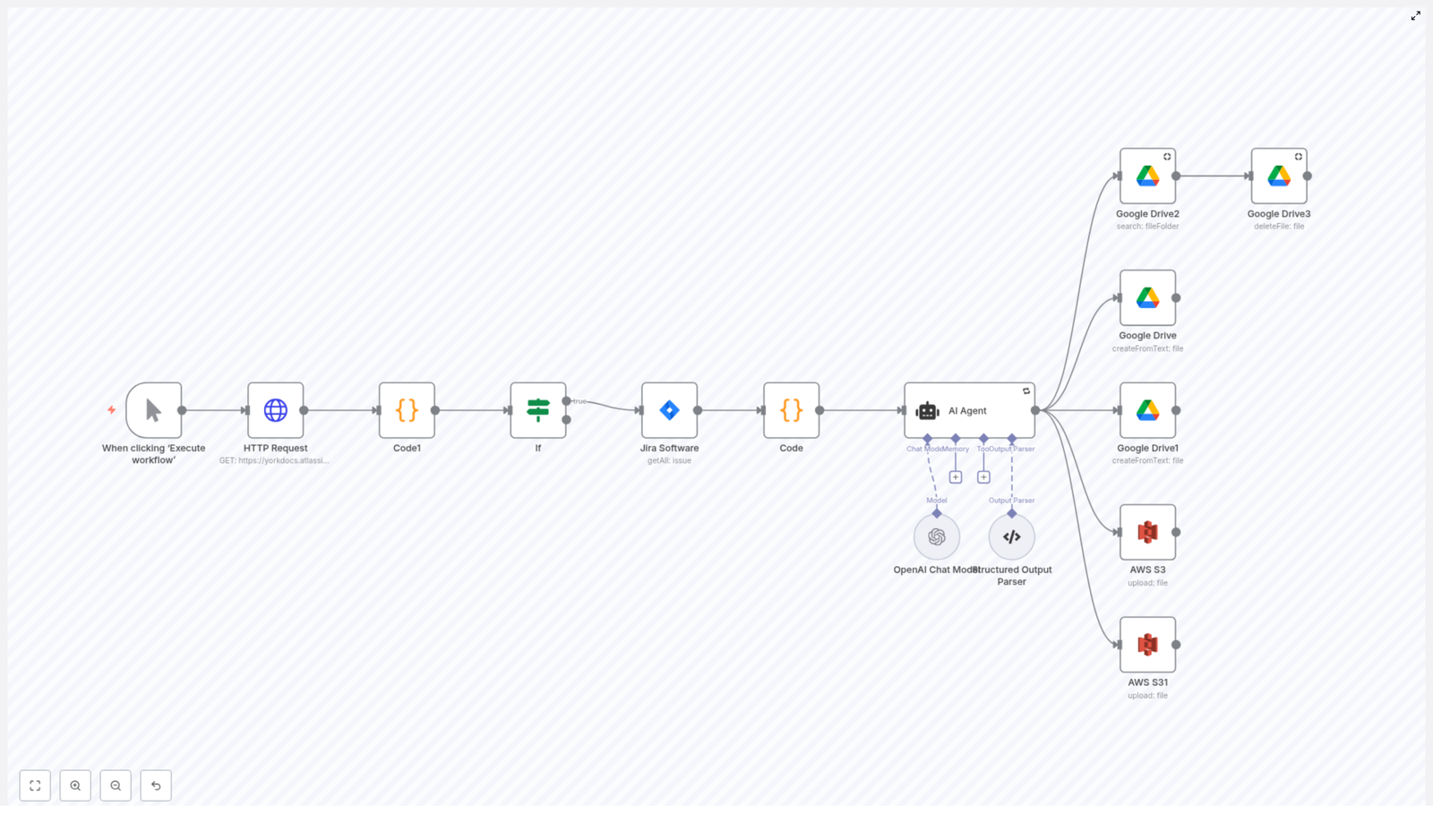

The workflow at a glance

Before we go step by step, here is a quick overview of the building blocks you will be wiring together in n8n:

- Webhook Trigger – receives the incoming content or dataset you want summarized.

- Text Splitter – breaks long content into manageable chunks (chunkSize: 400, chunkOverlap: 40).

- Embeddings (Cohere) – converts each chunk into vectors using

embed-english-v3.0. - Supabase Insert – stores those vectors in a Supabase index named

morning_briefing_email. - Supabase Query + Vector Tool – retrieves the most relevant pieces of context for the RAG Agent.

- Window Memory – maintains a short history so the agent can stay consistent across runs if needed.

- Chat Model (Anthropic) – generates the final briefing text based on the retrieved context and instructions.

- RAG Agent – orchestrates retrieval, memory, and the chat model to produce the email body.

- Append Sheet – logs the final output in a Google Sheet tab called

Log. - Slack Alert – posts to

#alertswhen something goes wrong, so you can fix issues quickly.

Each of these pieces is useful on its own. Together, they form a powerful pattern you can replicate for other AI-driven workflows.

Building your Morning Briefing journey in n8n

1. Start with a Webhook Trigger to receive your data

Begin by creating an HTTP POST Webhook node in n8n and name it something like morning-briefing-email. This will be your entry point, where internal APIs, ETL jobs, or even manual tools can send content for summarization.

Once this is in place, you have a stable gateway that any system can use to feed information into your briefing pipeline.

2. Split long content into smart chunks

Next, add a Text Splitter node. Configure it as a character-based splitter with:

chunkSize: 400chunkOverlap: 40

This balance is important. Smaller chunks keep embeddings efficient and retrieval precise, while a bit of overlap preserves context across chunk boundaries. You can always tune these numbers later, but this starting point works well for most use cases.

3. Turn text into embeddings with Cohere

Now it is time to give your workflow a semantic understanding of the text. Add an Embeddings node configured to use Cohere and select the embed-english-v3.0 model.

Make sure your Cohere API key is stored securely in n8n credentials, not hard-coded in the workflow. Each chunk from the Text Splitter will be passed to this node, which outputs high-dimensional vectors that capture meaning rather than just keywords.

These embeddings are the foundation of your retrieval step and are what allow the RAG Agent to pull in the most relevant context later.

4. Store vectors in a Supabase index

With embeddings in hand, add a Supabase Insert node to push the vectors into your Supabase vector index. Use an index named morning_briefing_email so you can easily reuse it for this workflow and related automations.

Alongside the vector itself, store useful metadata such as:

- Title

- Source (for example, which system or document it came from)

- Timestamp or date

This metadata helps later when you want to audit how a briefing was generated or trace a specific point back to its origin.

5. Retrieve relevant context with Supabase Query and the Vector Tool

When it is time to actually generate a morning briefing, you will query the same Supabase index for the most relevant chunks. Add a Supabase Query node configured for similarity search against morning_briefing_email.

Wrap this query with a Vector Tool node. The Vector Tool presents the retrieved documents in a format that the RAG Agent can easily consume. This is the bridge between your stored knowledge and the AI model that will write your briefing.

6. Add Window Memory and connect the Anthropic chat model

To give your workflow a sense of continuity, add a Window Memory node. This short-term conversational memory lets the RAG Agent maintain a small history, which can be helpful if you extend this workflow later or chain multiple interactions together.

Then, configure a Chat Model node using an Anthropic-based model. Anthropic models are well suited for instruction-following, which is exactly what you need for clear, concise morning briefings.

At this point, you have all the ingredients: context from Supabase, a memory buffer, and a capable language model ready to write.

7. Orchestrate everything with a RAG Agent

Now comes the heart of the workflow: the RAG Agent. This node coordinates three inputs:

- Retrieved documents from Supabase via the Vector Tool

- Window Memory history

- The Anthropic chat model

Configure the RAG Agent with a clear system prompt that defines the style and structure of your briefing. For example:

System: You are an assistant for Morning Briefing Email. Produce a short, actionable morning briefing (3-5 bullet points), include urgent items, outstanding tasks, and a short quick-glance summary.This is where your workflow starts to feel truly transformative. Instead of a raw data dump, you get a focused, human-readable summary you can act on immediately.

8. Log every briefing and protect reliability with alerts

To keep a record of what is being sent, add an Append Sheet node and connect it to a Google Sheets document. Use a sheet named Log to store each generated briefing, along with any metadata you find useful. This gives you an audit trail and makes it easy to analyze trends over time.

Finally, add a Slack Alert node that posts to a channel such as #alerts whenever the workflow encounters an error. This simple step is what turns an experiment into a system you can trust. If something breaks, you will know quickly and can respond before your team misses their morning update.

Configuration tips to get the most from your automation

Once the basic pipeline is working, a few targeted tweaks can significantly improve quality and robustness.

- Chunk sizing: If your source documents are very long or very short, experiment with different

chunkSizeandchunkOverlapvalues. Larger chunks reduce the number of API calls but can blur the boundaries between topics. Smaller chunks increase precision at the cost of more calls. - Rich metadata: Capture fields like source URL, timestamp, and author with each vector. This makes it easier to understand why certain items appeared in the briefing and to trace them back to the original data.

- Security best practices: Store all API keys (Cohere, Supabase, Anthropic, Google Sheets) in n8n credentials. Protect your webhook with access controls and request validation, such as an API key or HMAC signature.

- Rate limit awareness: Monitor your Cohere and Anthropic usage. For high-volume workloads, batch embedding requests where possible to stay within rate limits and keep costs predictable.

- Relevance tuning: Adjust how many nearest neighbors you retrieve from Supabase. Too few and you might miss important context, too many and you introduce noise. Iterating on this is a powerful way to improve briefing quality.

Testing your n8n Morning Briefing workflow

Before you rely on this workflow every morning, take time to test it end to end. Testing is not just about debugging. It is also about learning how the system behaves so you can refine it confidently.

- Send a test POST payload to the webhook. For example:

{ "title": "Daily Ops", "body": "...long content...", "date": "2025-01-01" } - Check your Supabase index and confirm that vectors have been inserted correctly, along with the metadata you expect.

- Trigger the RAG Agent and review the generated briefing. If it feels off, adjust the system prompt, tweak retrieval parameters, or fine-tune chunk sizes.

- Verify that the Google Sheets Append node logs the output in the

Logsheet and simulate an error to ensure the Slack Alert fires in#alerts.

Each test run is an opportunity to learn and improve. Treat this phase as a chance to shape the exact tone and depth you want in your daily emails.

Scaling your Morning Briefing as your needs grow

Once you see how effective this workflow is, you may want to expand it to more teams, more data sources, or more frequent runs. The architecture you have chosen is ready for that.

- Separate ingestion from summarization: If live ingestion becomes expensive or complex, move embeddings creation and vector insertion into a scheduled job. Your morning briefing can then query an already up-to-date index.

- Use caching for hot data: For information that changes slowly but is requested often, introduce caching to speed up retrieval and reduce load.

- Consider specialized vector databases: If you outgrow Supabase in terms of performance or scale, you can migrate to a dedicated vector database such as Pinecone or Milvus, as long as it fits your existing tooling and architecture.

The key is that you do not need to rebuild from scratch. You can evolve this workflow step by step as your organization and ambitions grow.

Troubleshooting: turning issues into improvements

Even well designed workflows hit bumps. When that happens, use these checks to quickly diagnose the problem and turn it into a learning moment.

- No vectors in Supabase? Confirm that the Embeddings node is using valid credentials and that the Text Splitter is producing non-empty chunks.

- Briefings feel low quality? Refine your system prompt, increase the number of retrieved neighbors, or adjust chunk sizes for better context.

- Rate limit errors from Cohere or Anthropic? Implement retry and backoff strategies in n8n and consider batching embedding requests.

- n8n workflow failures? Use n8n execution logs together with your Slack Alert node to capture stack traces and pinpoint where things are breaking.

Each fix you apply makes the workflow more resilient and prepares you for building even more ambitious automations in the future.

Prompt ideas to shape your Morning Briefing

Your prompts are where you translate business needs into instructions the model can follow. Here are two examples you can use or adapt:

Prompt (summary): Produce a 3-5 bullet morning briefing with: 1) urgent items, 2) key updates, 3) blockers, and 4) action requests. Use retrieved context and keep it under 150 words.Prompt (email format): Write an email subject and short body for the team’s morning briefing. Start with a one-line summary, then list 3 bullets with actions and deadlines. Keep tone professional and concise.Do not hesitate to experiment. Small prompt changes can dramatically shift the clarity and usefulness of your briefings.

From one workflow to a culture of automation

By building this n8n-powered Morning Briefing Email, you have created more than a daily summary. You have built a reusable pattern that combines a vector store, embeddings, memory, and a RAG Agent into a reliable, production-ready pipeline.

The impact is tangible: accurate, context-aware briefings that save time, reduce cognitive load, and keep teams aligned. The deeper impact is mindset. Once you see what a single well designed workflow can do, it becomes natural to ask, “What else can I automate?”

As you move this into production, make sure you:

- Protect your webhook with strong authentication and request validation

- Monitor usage and costs across Cohere, Supabase, and Anthropic

- Maintain a clear error-notification policy using Slack alerts and n8n logs

From here, you can branch out to automated weekly reports, project health summaries, customer support digests, and more, all built on the same RAG + embeddings foundation.

Call to action: Spin up this Morning Briefing workflow in your n8n instance and make tomorrow morning the first where your day starts with clarity, not chaos. If you want a downloadable n8n workflow export or guidance on configuring credentials for Cohere, Supabase, Anthropic, or Google Sheets, reach out to our team or leave a comment below. Use this template as your starting point, then iterate, refine, and keep automating.

n8n If & Switch: Conditional Routing Guide

n8n If & Switch: A Practical Guide to Smarter, Growth-Focused Automation

From manual decisions to automated clarity

Every growing business eventually hits the same wall: too many tiny decisions, not enough time. You start with simple workflows, then suddenly you are juggling edge cases, exceptions, and “if this, then that” rules scattered across tools and spreadsheets. It gets noisy, and that noise steals focus from the work that really moves you forward.

This is exactly where conditional logic in n8n becomes a turning point. With the If and Switch nodes, you can teach your workflows to make decisions for you. They quietly handle routing, filtering, and branching so you can spend your energy on strategy, creativity, and growth.

In this guide, you will walk through a real n8n workflow template that reads customer records from a datastore and routes them based on country and name. Along the way, you will see how a few well-placed conditions can turn a basic flow into a powerful, reliable automation system.

Adopting an automation mindset

Before diving into the nodes, it helps to shift how you think about automation. Instead of asking “How do I get this one task done?” try asking:

- “How can I teach my workflow to decide like I do?”

- “Where am I repeating the same judgment calls again and again?”

- “Which decisions could a clear rule handle, so my team does not have to?”

The n8n If and Switch nodes are your tools for encoding that judgment. They let you build logic visually, without code, so you can:

- Filter out noise and focus only on what matters

- Handle different customer types or regions with confidence

- Keep workflows readable and maintainable as they grow

Think of this template as a starting point. Once you understand how it works, you can extend it, adapt it to your data, and gradually automate more of the decisions that currently slow you down.

When to use If vs Switch in n8n

Both nodes help you route data, but they shine in different situations:

If node: simple decisions and combined conditions

Use the If node when you want a clear yes/no answer. It is perfect when:

- You have a single condition, such as “Is this customer in the US?”

- You need to combine a few checks with AND / OR logic, for example:

- Country is empty OR

- Name contains “Max”

The If node returns two paths: true and false. That simple split is often enough to clean up your flow and make it easier to follow.

Switch node: many outcomes, one clear router

Use the Switch node when you need to handle three or more distinct outcomes. Instead of chaining multiple If nodes, a Switch node lets you define clear rules and send each item to the right branch, such as routing customers by country.

Together, If and Switch let you express complex business logic in a way that stays understandable and scalable, even as your automation grows.

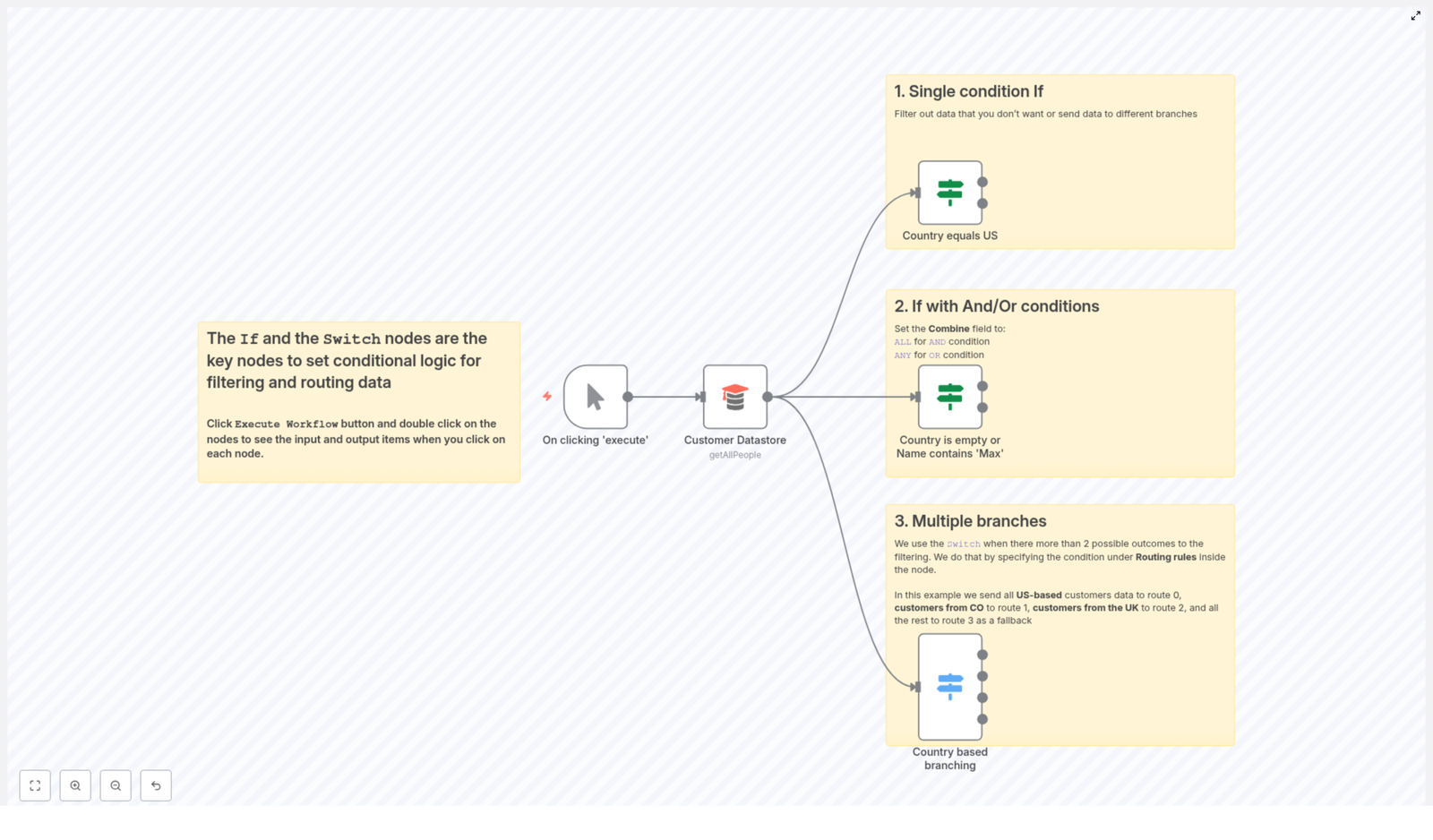

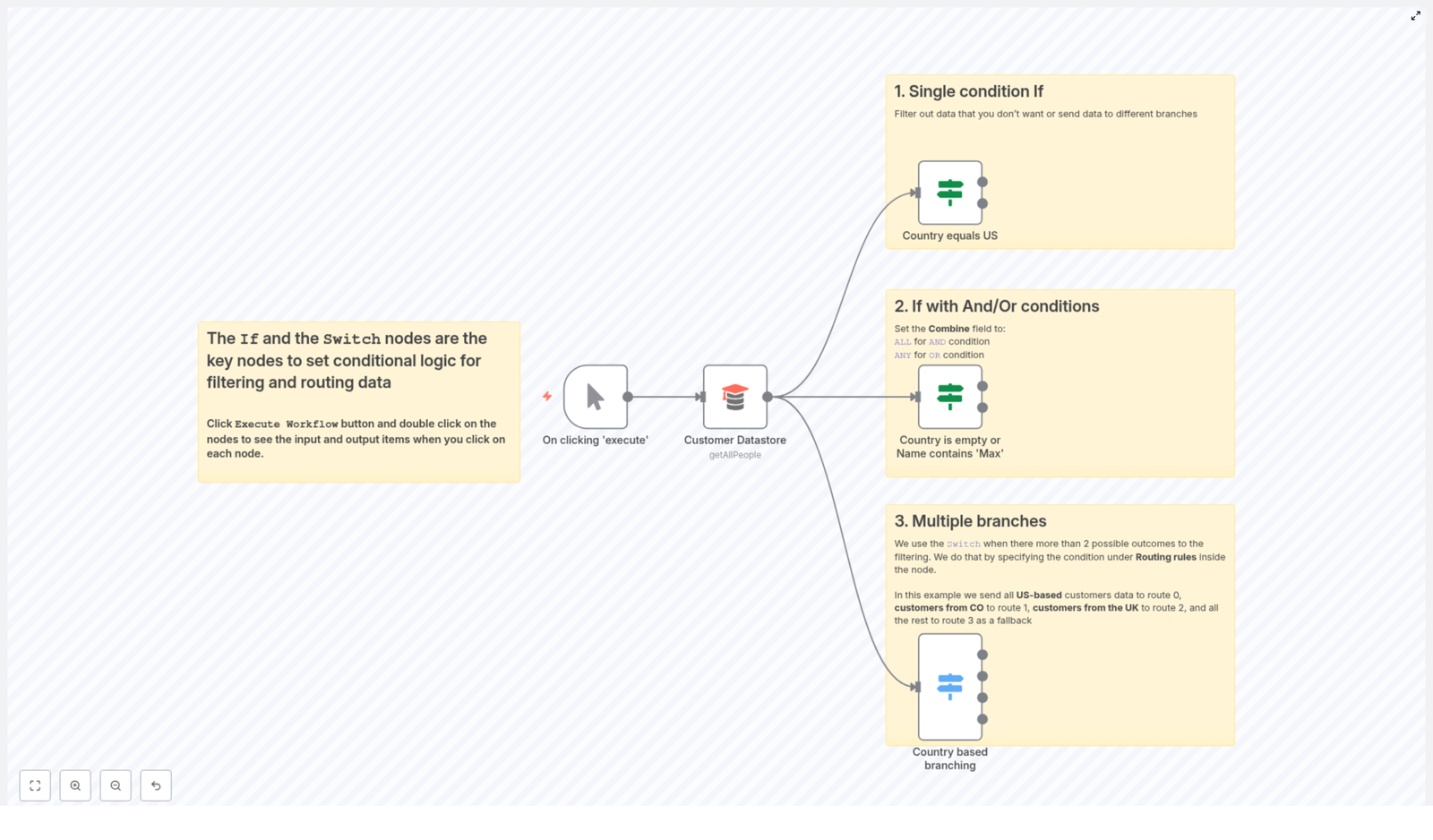

Meet the example workflow template

The n8n template you will use in this guide is built around a simple but powerful scenario: reading customer data and routing records based on country and name. It is small enough to understand quickly, yet realistic enough to reuse in your own projects.

The workflow includes:

- Manual Trigger – start the flow manually for testing and experimentation

- Customer Datastore – fetches customer records using the

getAllPeopleoperation - If nodes – handle single-condition checks and combined AND / OR logic

- Switch node – routes customers into multiple branches by country, with a fallback

Within this single template, you will see three essential patterns that apply to almost any automation:

- A single-condition If to filter by country

- An If with AND / OR to combine multiple checks

- A Switch node to create multiple branches with a safe fallback

Once you grasp these patterns, you can start recognizing similar opportunities in your own workflows and automate them with confidence.

Step 1: Build the foundation of the workflow

Let us start by creating the basic structure. This foundation is where you will plug in your conditions and routing rules.

- Add a Manual Trigger node. Use this to run the workflow on demand while you are experimenting and refining your logic.

- Add your Customer Datastore node. Set the operation to

getAllPeopleso the node retrieves all customer records you want to route. - Connect the Datastore to your logic nodes. In n8n you can connect a single node to multiple downstream nodes. Connect the datastore output to:

- The If node for the single-condition example

- The If node for combined AND / OR logic

- The Switch node for multi-branch routing

- Prepare to use expressions. You will reference fields like

countryandnameusing expressions such as:={{$json["country"]}}={{$json["name"]}}

- Run and inspect. Click Execute Workflow as you go and inspect the input and output of each node. This habit helps you trust your automations and refine them faster.

With this structure in place, you are ready to add the decision-making logic that will turn this workflow into a smart router for your customer data.

Step 2: Single-condition If – filtering by country

Imagine you want to treat US-based customers differently, for example to send them region-specific notifications or apply US-only business rules. A single If node can handle that routing for you, reliably and automatically.

Configuration for a simple country filter

Set up your If node like this:

- Condition type: string

- Value 1:

={{$json["country"]}} - Value 2:

US

With this configuration the If node checks whether $json["country"] equals US.

- If the condition is true, the item goes to the true output.

- All other items flow to the false output.

How this small step creates leverage

This simple split unlocks a lot of possibilities:

- Send US customers into a dedicated notification or marketing sequence

- Apply region-specific logic, taxes, or compliance steps only where needed

- Route customers into different tools or services based on their country

One clear condition, one If node, and you have turned a manual decision into an automated rule that runs every time, without you.

Step 3: If with AND / OR – combining multiple checks

Real-world data is rarely perfect. You might have missing fields, special cases, or customers who need extra attention. That is where combining conditions in an If node becomes powerful.

In this template you will see an example that handles records where either the country is empty or the name contains “Max”. This could represent incomplete data, test accounts, or VIPs that require special handling.

Key settings for combined conditions

Configure your If node with multiple string conditions, for example:

{{$json["country"]}} isEmpty{{$json["name"]}} contains "Max"

Then use the Combine field to decide how these conditions interact:

- Combine operation:

ANYfor OR logic - Combine operation:

ALLfor AND logic

In this template, the configuration uses combineOperation: "any". That means the If node returns true when either condition matches.

- If the country is empty, the item matches.

- If the name contains “Max”, the item matches.

- If both are true, it also matches.

Practical ways to use combined conditions

Once you understand combined conditions, you can start using them to clean data and treat important records differently:

- Data validation Route records with missing country values to a cleaning or enrichment step, such as a manual review queue or an external API.

- Special handling Flag customers whose name matches certain keywords, such as VIPs, test accounts, or internal users, and route them into dedicated flows.

This is how you gradually build smarter automations: by capturing the small rules you already follow in your head and turning them into reusable, visible logic in n8n.

Step 4: Switch node – routing to multiple branches by country

As your automation grows, you will often have more than two possible outcomes. Maybe you want different flows for the US, Colombia, and the UK, with a safety net for all other countries. A Switch node makes this kind of branching clean and easy to understand.

Example Switch configuration

Configure your Switch node as follows:

- Value to check:

={{$json["country"]}} - Data type:

string - Rules & outputs:

- Rule 0:

US(routes to output 0) - Rule 1:

CO(routes to output 1) - Rule 2:

UK(routes to output 2)

- Rule 0:

- Fallback output:

3– catches all records that do not match a rule

Why the fallback output matters

The fallback output is your safety net. It ensures that any unexpected or new country values are still processed. Without it, data could silently disappear from your workflow.

Use the fallback branch to:

- Log unknown or new country values for review

- Send these records into a manual validation queue

- Apply a default, generic flow when no specific rule exists yet

This approach gives you confidence that your automation will behave predictably, even as your data changes or your customer base expands into new regions.

Best practices to keep your automations scalable

As you build more If and Switch logic into your workflows, a few habits will help you stay organized and avoid confusion:

- Use Switch for clarity when you have 3+ outcomes. A single Switch node is almost always easier to read than a chain of nested If nodes.

- Always include a fallback route in Switch nodes. This protects you from silent data loss and makes your workflow more resilient.

- Standardize your data before comparing. If you are unsure about capitalization, use expressions like

={{$json["country"]?.toUpperCase()}}to normalize values before checking them. - Document your logic on the canvas. Use sticky notes or comments in n8n to explain why certain conditions exist. This makes onboarding collaborators faster and helps your future self remember the reasoning.

- Use Code nodes for very complex logic. When you have many conditions or intricate rules, consider a Code node, but keep straightforward boolean checks in If nodes to maintain visual clarity.

These small practices compound over time, turning your n8n instance into a clear, maintainable system instead of a tangle of ad hoc rules.

Troubleshooting your conditions with confidence

Even with a strong setup, conditions may not always behave as expected. When that happens, treat it as an opportunity to deepen your understanding of your data and your automation.

If your conditions are not matching, try this checklist:

- Inspect Input and Output data. While executing the workflow, open each node and look at the actual JSON values under Input and Output. This often reveals small mistakes immediately.

- Check for spaces and case sensitivity. Leading or trailing spaces and inconsistent capitalization can cause mismatches. Use helpers like

trim()ortoUpperCase()in your expressions when needed. - Verify operators. Make sure you are using:

isEmptyfor missing fieldscontainsfor partial matches- Equality operators for exact matches

With a little practice, debugging conditions becomes straightforward, and each fix makes your automation more robust.

Real-world ways to apply If and Switch logic

The patterns in this template show up in many real automation scenarios. Here are a few examples you can adapt directly:

- Region-based notifications Send country-specific promotions, legal updates, or compliance messages by routing customers based on their country code.

- Data cleanup flows Detect incomplete or suspicious records and route them to manual review, enrichment APIs, or dedicated cleanup pipelines.

- Feature toggles and test routing Use name or email patterns to enable or disable parts of a flow for specific users, internal testers, or beta groups.

As you explore this template, keep an eye out for similar patterns in your own processes. Anywhere you are making repeated decisions by hand is a strong candidate for an If or Switch node.

Your next step: experiment, extend, and grow

The If and Switch nodes are not just technical tools. They are building blocks for a more focused, less reactive way of working. Each condition you automate is one less decision you have to make manually, one more piece of mental space you get back.

Use this template as a safe playground:

- Open n8n and import the example workflow.

- Run it with your own sample customer data.

- Adjust the conditions for your real-world rules, such as different countries, name patterns, or validation checks.

- Add new branches, new rules, and see how far you can take it.

Start simple, then iterate. Over time, you will build a library of automations that quietly support your business or personal projects, so you can focus on the work that truly matters.

Call to action: turn this template into your own automation engine

If you are ready to move from theory to practice, now is the moment. Open n8n, load this workflow, and begin shaping it around your data and your goals. Treat it as a starting point for a more automated, more intentional way of working.

If you would like a downloadable starter template or guidance on adapting these rules to your dataset, reach out to our team or leave a comment. We are here to help you refine your logic, improve your flows, and build automations you can rely on.

n8n If vs Switch: Master Conditional Routing

n8n If vs Switch: Master Conditional Routing

What you will learn

In this guide you will learn how to:

- Understand the difference between the If node and the Switch node in n8n

- Use conditional logic in n8n to filter and route data without code

- Configure a complete country-based routing workflow step by step

- Apply AND / OR conditions with the If node

- Create multiple branches with the Switch node using a fallback route

- Test, debug, and improve your conditional workflows using best practices

This tutorial is based on a real n8n workflow template that routes customers by country. You can follow along and then adapt it to your own data.

Core idea: Conditional logic in n8n

Conditional logic is the backbone of workflow automation. It lets you decide what should happen next based on the data that flows through your n8n nodes.

In n8n, two nodes are central to this kind of decision making:

- If node – evaluates one or more conditions and splits items into true or false paths

- Switch node – compares a value against multiple possible options and routes items to different outputs

Both are used for conditional logic in n8n, but they shine in different situations. Understanding when to use each is key to clean, maintainable workflow routing and data filtering.

If vs Switch in n8n: When to use which?

The If node

The If node is ideal when you need simple checks, such as:

- A yes/no decision, for example “Is this customer in the US?”

- A small number of conditions combined with AND or OR logic

- Pre-checks before more complex routing, such as skipping invalid records

It has two outputs:

- True – items that match your conditions

- False – items that do not match

The Switch node

The Switch node is better when you need to route data into more than two branches, for example:

- Different countries should be sent to different services

- Different statuses (pending, approved, rejected) require different actions

- You want a clear visual overview of many possible outcomes

Instead of chaining multiple If nodes, a Switch node lets you define multiple rules in one place and keep the workflow readable.

Quick rule of thumb:

- Use If for simple true/false checks or small sets of conditions

- Use Switch for multiple distinct routes from the same decision point

Related keywords: n8n If node, n8n Switch node, workflow routing, data filtering, conditional logic in n8n.

Workflow we will build: Country-based routing

To see all this in action, we will walk through a practical example: a workflow that fetches customer records and routes them based on their country field.

The template uses the following nodes:

- Manual Trigger – starts the workflow on demand

- Customer Datastore (getAllPeople) – returns all customer records

- If: Country equals US – filters customers whose country is

US - If: Country is empty or Name contains “Max” – demonstrates combining conditions with AND / OR logic

- Switch: Country based branching – routes customers to separate branches for

US,CO,UK, or a fallback route

Why this example works well for learning

This pattern is very common in automation:

- You pull records from a data source

- You check specific fields, such as

countryorname - You route each record to the right process or destination

It shows how to:

- Handle missing data (empty country)

- Use partial matches (name contains “Max”)

- Create multiple routes from one decision point with a fallback

Step 1: Trigger and load your customer data

Manual Trigger

Start with a Manual Trigger node. This lets you run the workflow on demand while you are building and testing it.

Customer Datastore (getAllPeople)

Next, add the Customer Datastore (getAllPeople) node:

- Connect it to the Manual Trigger

- Configure it so that it returns all customer records

Each item typically includes fields like name and country. These fields are what you will reference in your If and Switch nodes.

Step 2: Use the If node for a single condition

First, you will use the n8n If node to filter customers from a specific country, for example all customers in the United States.

Goal

Route all customers where country = "US" to the true output, and everyone else to the false output.

Configuration steps

- Add an If node and connect it to the Customer Datastore node.

- Inside the If node, create a new condition.

- Set the Type to

String. - For Value 1, use an expression that points to the country field:

{{$json["country"]}} - Set Operation to

equals(or the equivalent in your UI). - Set Value 2 to:

US - Save the node and keep the two outputs:

- True output – all items where

countryis exactlyUS - False output – all remaining items

- True output – all items where

Tip: Use consistent country codes, such as ISO alpha-2 (US, UK, CO), to avoid mismatches between your data and your conditions.

Step 3: Combine conditions with AND / OR in the If node

The If node in n8n supports multiple conditions. You can control how they are evaluated with the Combine field.

Combine options

ALL– acts like a logical AND. Every condition must be true for the item to follow the true path.ANY– acts like a logical OR. At least one condition must be true for the item to follow the true path.

Example: Country is empty OR Name contains “Max”

In the template, there is an If node that demonstrates this combined logic. It checks two things:

- Whether the

countryfield is empty - Whether the

namefield contains the stringMax

To configure this:

- Add two string conditions in the If node:

- Condition 1:

- Value 1:

{{$json["country"]}} - Operation:

isEmpty

- Value 1:

- Condition 2:

- Value 1:

{{$json["name"]}} - Operation:

contains - Value 2:

Max

- Value 1:

Now set Combine to ANY. The result:

- Items where

countryis empty will go to the true output - Items where

namecontains “Max” will also go to the true output - All other items will go to the false output

This is a powerful pattern for building flexible filters with the If node.

Step 4: Use the Switch node for multiple branches

When you have more than two possible outcomes, multiple If nodes can quickly become hard to follow. This is where the n8n Switch node is more suitable.

Goal

Route customers based on their country value into separate branches for:

USCOUK- Any other country or missing value (fallback)

Configuration steps

- Add a Switch node and connect it to the node that provides your items (for example the Customer Datastore or a previous If node).

- Inside the Switch node, set:

- Value 1 to:

{{$json["country"]}} - Data Type to

string

- Value 1 to:

- Add rules for the countries you care about. For example:

- Rule 1:

- Value:

US - Output:

0

- Value:

- Rule 2:

- Value:

CO - Output:

1

- Value:

- Rule 3:

- Value:

UK - Output:

2

- Value:

- Rule 1:

- Set a Fallback Output, for example:

- Fallback Output:

3

This will be used for any item where

countrydoes not match US, CO, or UK, or is missing. - Fallback Output:

At runtime, the Switch node evaluates the value of {{$json["country"]}} for each item:

- If it matches

US, the item goes to output 0 - If it matches

CO, the item goes to output 1 - If it matches

UK, the item goes to output 2 - If it matches none of the above, the item goes to the fallback output 3

This gives you a clear branching structure for your workflow routing.

Working with expressions and data normalization

Both If and Switch nodes rely on expressions to read data from incoming items. In n8n, the most common pattern is to reference fields from the JSON payload of each item.

Basic expressions

To reference fields in expressions:

- Country:

{{$json["country"]}} - Name:

{{$json["name"]}}

Normalizing data before comparison

Real-world data is often inconsistent. To avoid subtle mismatches, normalize values before you compare them. You can do this in a Set node or a Function node.

Examples:

- Trim whitespace and convert to uppercase:

{{$json["country"]?.trim().toUpperCase()}} - Map full country names to codes, for example:

- “United States” → “US”

- “United Kingdom” → “UK”

This mapping can be implemented in a Function node or via a lookup table.

Normalizing early in your workflow helps your If and Switch conditions behave predictably.

Testing and debugging your conditional workflow

As you build conditional logic, testing is essential. n8n offers several features that make it easier to see how items move through your workflow.

- Execute Workflow:

- Click Execute Workflow from the editor.

- After execution, double click any node to inspect its Input and Output items.

- Logger or HTTP Request nodes:

- Insert a Logger node or an HTTP Request node in a branch to inspect what data that branch receives.

- Triggers:

- Use a Manual Trigger while developing to control when the workflow runs.

- When integrating with external systems, you can switch to a Webhook trigger and still inspect items in the same way.

- Complex conditions in JavaScript:

- For very complex logic, use a Function node.

- In the Function node, you can evaluate multiple JavaScript conditions and return a simple route key, such as:

item.route = "US"; - Then use a Switch node to route based on

item.route.

Best practices for If and Switch nodes

- Prefer Switch for many outcomes:

- Use the Switch node when you have several distinct routes.

- This is usually more readable than chaining multiple If nodes.

- Normalize data early:

- Handle case differences, extra spaces, and synonyms as soon as possible.

- This reduces unexpected behavior in your conditions.

- Keep conditions simple and documented:

- Avoid very complex logic inside a single If or Switch node.

- Use node descriptions to explain what each condition is for.

- Use fallback routes:

- Always define a fallback output in Switch nodes when possible.

- This prevents items from being lost when they do not match any rule.

- Avoid deep nesting:

- Limit deeply nested

- Limit deeply nested

Fix ‘Could not Load Workflow Preview’ in n8n

Fix “Could not Load Workflow Preview” in n8n (Step-by-Step Guide)

Seeing the message “Could not load workflow preview. You can still view the code and paste it into n8n” when importing a workflow can be worrying, especially if you need that automation working immediately.

This guide explains, in a practical and educational way, why this happens and shows you exactly how to rescue, clean, and import the workflow into your n8n instance.

What You Will Learn

By the end of this tutorial, you will know how to:

- Understand the main causes of the “Could not load workflow preview” error in n8n

- Access and validate the raw workflow JSON safely

- Import workflows into n8n even when the preview fails

- Fix version, node, and credential compatibility issues

- Use CLI or API options when the UI import is not enough

- Apply best practices so exported workflows are easier to share and reuse

1. Understand Why n8n Cannot Load the Workflow Preview

When the preview fails, it usually means the UI cannot render the workflow, not that the workflow is lost. The underlying JSON is often still usable.

Common reasons for the preview error

- Unsupported or custom nodes

Workflows created in another n8n instance may use:- Third-party or community nodes that you do not have installed

- Custom nodes created specifically for that environment

These nodes can prevent the visual preview from loading.

- Version mismatch

The workflow JSON might rely on:- Node properties added in newer n8n versions

- Features your current n8n version does not recognize

- Missing credentials

Some nodes need credentials that:- Do not exist in your instance yet

- Use a different credential type name or structure

The preview can fail if these references are inconsistent.

- Very large or complex workflows

Large JSON payloads, many nodes, or deeply nested expressions can hit UI limits and stop the preview from rendering correctly. - Invalid or corrupted JSON

If the export is truncated, malformed, or edited incorrectly, the preview cannot parse it. - Browser or UI rendering issues

In rare cases, browser extensions, caching, or UI limitations interfere with the preview, even though the JSON itself is fine.

The key idea: the preview can fail while the workflow JSON is still recoverable and importable.

2. First Rescue Step: View and Validate the Raw Workflow JSON

When the preview fails, your main goal is to get to the raw JSON. That JSON file contains everything n8n needs to reconstruct the workflow.

How to open the raw workflow code

- In the n8n UI, look for a link such as “view the code” next to the error message.

Clicking it usually opens:- A modal window with the workflow JSON, or

- A new browser tab showing the JSON

- If you downloaded an exported workflow file (typically

.json):

Open it with a text or code editor, for example:- VS Code

- Sublime Text

- Notepad++

- Any plain text editor

- Run the JSON through a validator, such as:

- jsonlint.com

- Your editor’s built-in JSON formatter or linter

This helps you detect:

- Missing or extra commas

- Broken brackets

- Encoding issues

Tip: Before editing anything, save a backup copy of the original JSON file. You can always go back if something breaks.

3. Import the Workflow JSON into n8n (Even Without Preview)

Once you have valid JSON, you can import the workflow directly into your n8n instance. The preview is optional, the import is what matters.

Step-by-step: Import a workflow JSON via the UI

- Open your n8n instance and go to the Workflows page.

- Click the Import option:

- This might be in a three-dot menu

- Or labeled as “Import” or “Import from file”

- Choose how to provide the workflow:

- Paste RAW JSON directly into the import dialog, or

- Upload the

.jsonfile you previously downloaded

- Review the import summary:

- n8n may show warnings about missing credentials or unknown nodes

- Read these messages carefully before confirming the import

- Confirm to complete the import.

Typical warnings during import and what they mean

- Missing credentials

n8n imports the workflow structure but not the actual secrets. After import you will:- Create or map the required credentials in your instance

- Attach them to the relevant nodes in the editor

- Unknown nodes

n8n has detected node types that your instance does not recognize. These are often:- Custom nodes from other installations

- Community nodes not installed in your environment

- Version incompatibility

The workflow may include:- Node parameters or properties that your n8n version does not support

- Newer node versions referenced in the JSON

In this case, you might need to edit the JSON or update n8n.

4. Fix Version and Node Compatibility Problems

If the workflow was created with newer features or custom node types, you might need to adjust the JSON before or after import.

How to inspect and edit workflow JSON safely

- Open the JSON file in a code editor.

- Search for node definitions, especially:

"type"fields that represent the node name"typeVersion"fields that indicate the node version

Compare these with the nodes available in your n8n instance.

- For custom node types:

- Install the corresponding custom node package in your n8n instance, or

- Replace the custom node with a built-in node that can perform a similar task

- If some nodes completely block import:

- Make a copy of the JSON file

- Temporarily remove or comment out (in your editor, not in actual JSON syntax) the problematic nodes

- Import the simplified workflow first

- Then re-create or replace those nodes directly in the n8n editor

- Review expressions and advanced syntax:

- Look for complex expressions like

{{$json["field"]["nested"]}}or long function-style expressions - If the import keeps failing, simplify these to static placeholder values

- After a successful import, open the workflow in the editor and rebuild the expressions there

- Look for complex expressions like

Always keep your original JSON as a reference so you can copy expressions or node configurations back as needed.

5. Reattach Missing Credentials Safely

For security reasons, credentials are never exported with workflows. This is expected behavior, not an error.

After importing, reconnect all required credentials

- In your n8n instance, create new credentials for each service used in the workflow, for example:

- API keys

- Database connections

- Cloud provider logins

- Open the imported workflow in the editor:

- Click each node that requires authentication

- In the node settings, select or create the matching credential entry

- For teams or multiple environments (dev, staging, production):

- Use environment-specific credentials in each n8n instance

- Consider using a secret manager or environment variables to standardize how credentials are created and referenced

6. Use CLI or API When UI Import Fails

If the UI keeps failing or you prefer automation, you can import workflows using the n8n CLI or REST API, depending on your setup and n8n version.

CLI / API import concepts

- Use the REST API endpoint such as

/workflowsto:- POST workflow JSON directly into n8n

- Automate imports in scripts or CI pipelines

- On self-hosted instances, check for:

- Admin utilities or CLI commands provided by your specific n8n version

- Developer or migration tools that handle workflow import programmatically

- Before sending JSON to the API:

- Confirm that the payload matches the expected workflow schema

- Ensure required top-level fields (like

nodes,connections, and metadata) are present

Because CLI and API usage can differ between releases, always refer to the official n8n documentation for your exact version for the current commands and endpoints.

7. Quick Fixes for Frequent Problems

Use this section as a checklist when troubleshooting a stubborn workflow JSON.

- Validation errors

Run the JSON through a validator and fix:- Trailing commas

- Mismatched brackets

- Encoding or copy-paste issues

- Unknown node types

If n8n reports unknown nodes:- Install the missing custom or community nodes, then restart n8n

- Or edit the JSON to replace these nodes with supported ones

- Large JSON fails to preview

Skip the preview and:- Use the “Paste RAW JSON” option directly

- Or import via file upload or API

- Browser-related issues

If you suspect the UI:- Try another browser

- Disable extensions, especially those that modify page content

- Use a private or incognito window to bypass cached scripts

8. Best Practices When Exporting and Sharing n8n Workflows

Prevent future preview and import headaches by following these recommendations whenever you share workflows with others or between environments.

- Include a README

Alongside the JSON export, add a short text file that lists:- Required custom or community nodes

- Credential types needed (for example, “Google Sheets API credential”)

- Document the n8n version

Mention the exact n8n version used to create the workflow. This helps:- Match versions for compatibility

- Decide whether to upgrade or adjust the JSON

- Use environment variables for secrets

Avoid hardcoding:- API keys

- Tokens

- Passwords

Instead, rely on environment variables and credential entries inside n8n.

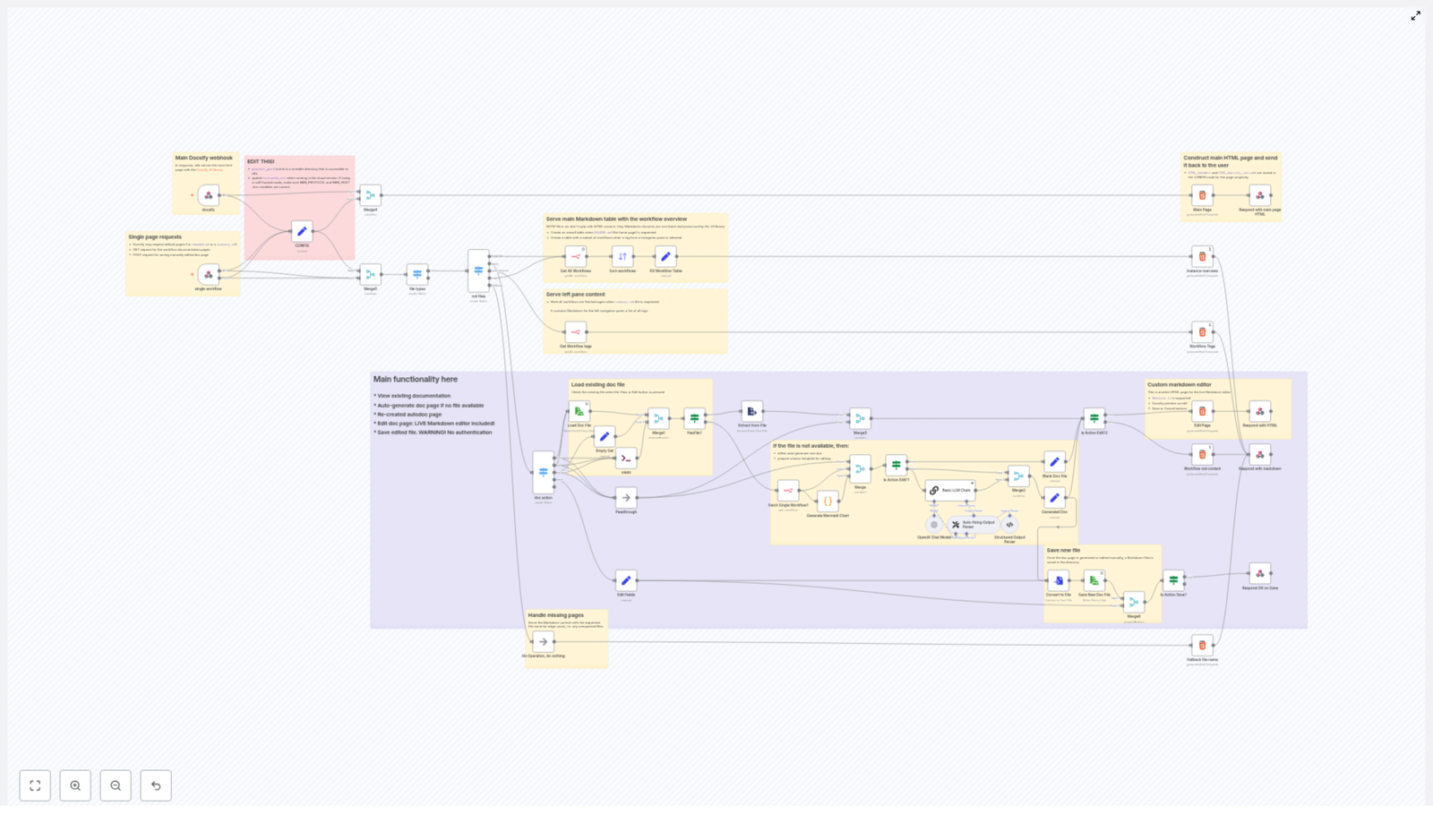

- Export smaller functional units

Instead of one huge workflow:- Split automations into smaller, focused workflows

- Make each module easier to preview, import, and debug

9. Example Checklist: Cleaning a Workflow JSON for Import

Use this simple workflow JSON cleanup checklist whenever you get the “Could not load workflow preview” error.

- Validate the JSON

Run the file through a JSON validator and fix any syntax errors. - Check node types

Search for"type"values:- Compare them with the nodes available in your n8n instance

- If you find unsupported or unknown types, temporarily remove them in a copy of the JSON

- Remove environment-specific data

Delete or replace:- Absolute file paths

- Local tokens

- IDs that only exist in the original environment

- Simplify advanced expressions

For very complex expressions:- Replace them with static placeholders so the workflow imports cleanly

- Rebuild or paste the full expressions back in the n8n editor once everything loads

10. Recap and Next Steps

The message “Could not load workflow preview” usually indicates a preview or compatibility issue, not a permanently broken workflow. In most cases you can still:

- Access and validate the raw workflow JSON

- Import the workflow via the n8n UI, CLI, or REST API

- Fix problems related to:

- Custom or unknown nodes

- Version mismatches

- Missing credentials

- Large or complex workflow structures

If you have tried the steps above and still cannot import the workflow, prepare the following information before asking for help:

- Your n8n version

- A list of any custom or community nodes installed

- The exact error messages you see in the UI or logs

- A sanitized copy of the workflow JSON with all secrets removed