Groundhogg Address Verification with n8n & Lob

If you send physical mail, you already know the pain of bad addresses: returned envelopes, wasted postage, annoyed customers, and confused ops teams. The good news is that you can automate a lot of that headache away.

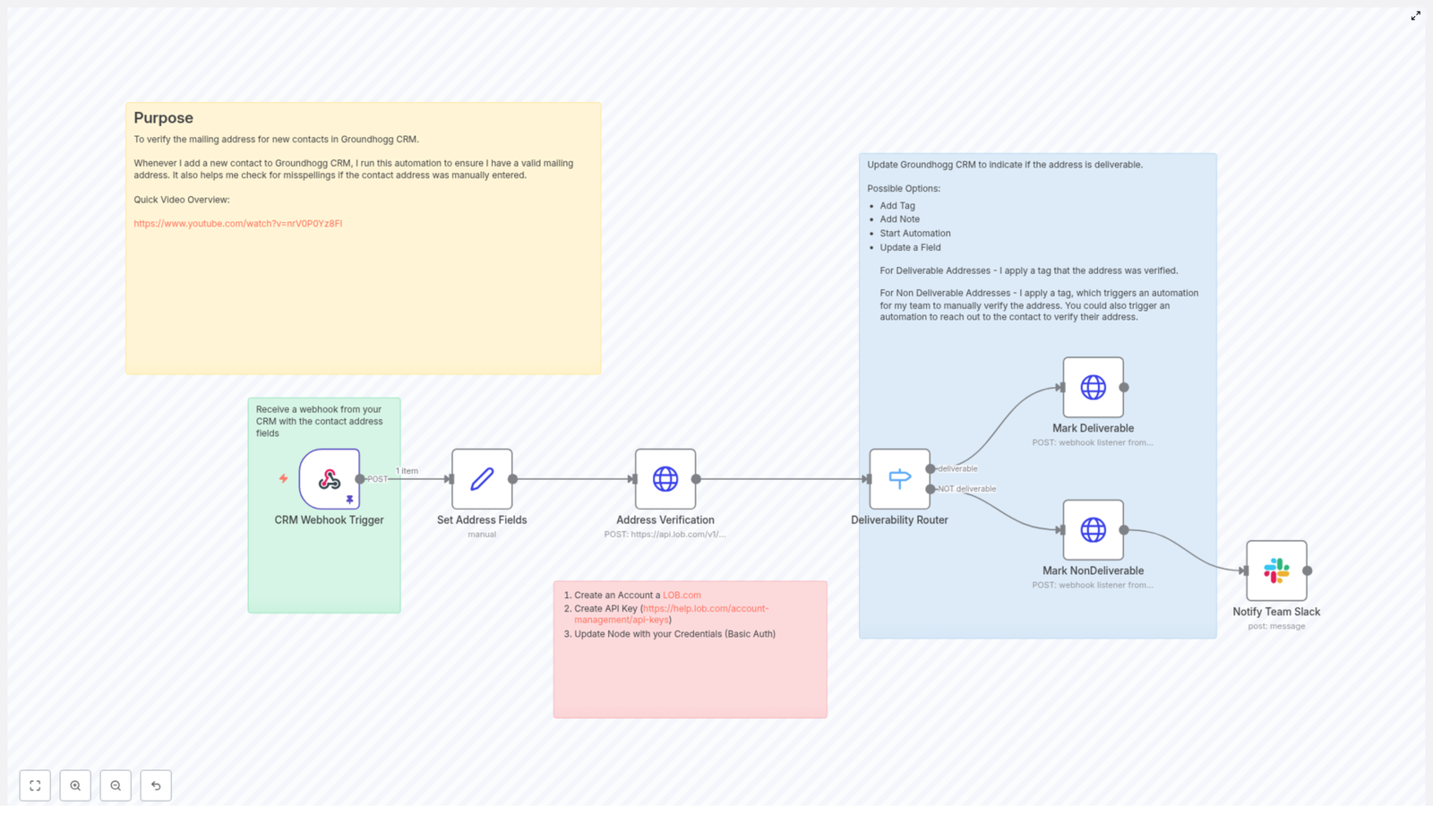

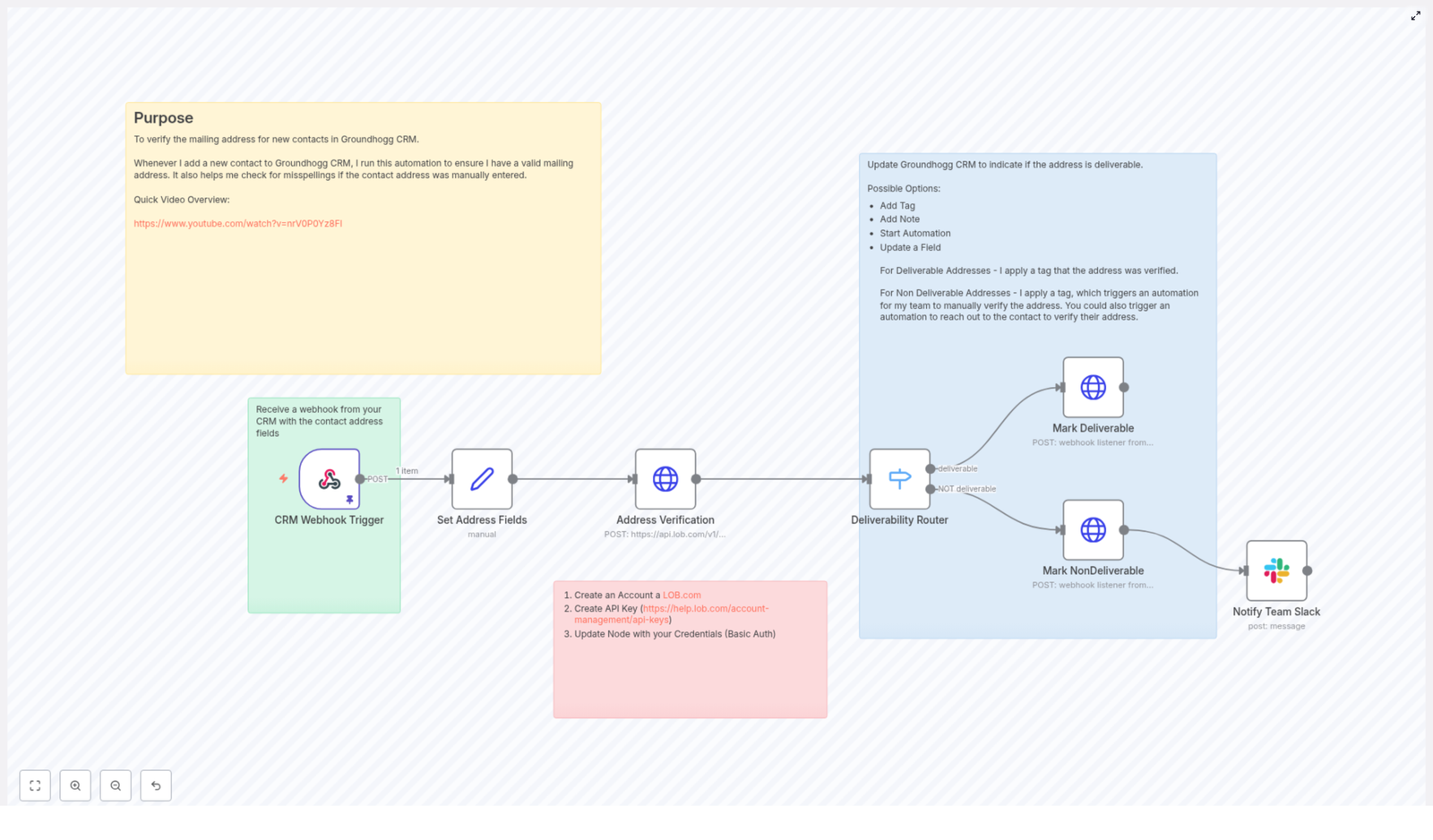

In this guide, we’ll walk through an n8n workflow template that connects Groundhogg and Lob so every new or updated contact gets an automatic address check. The workflow verifies mailing addresses as soon as they land in your CRM, tags contacts based on deliverability, and can even ping your team in Slack when something looks off.

Think of it as a quiet little assistant that sits between Groundhogg and your mail campaigns, catching typos and invalid addresses before they cost you money.

What this n8n template actually does

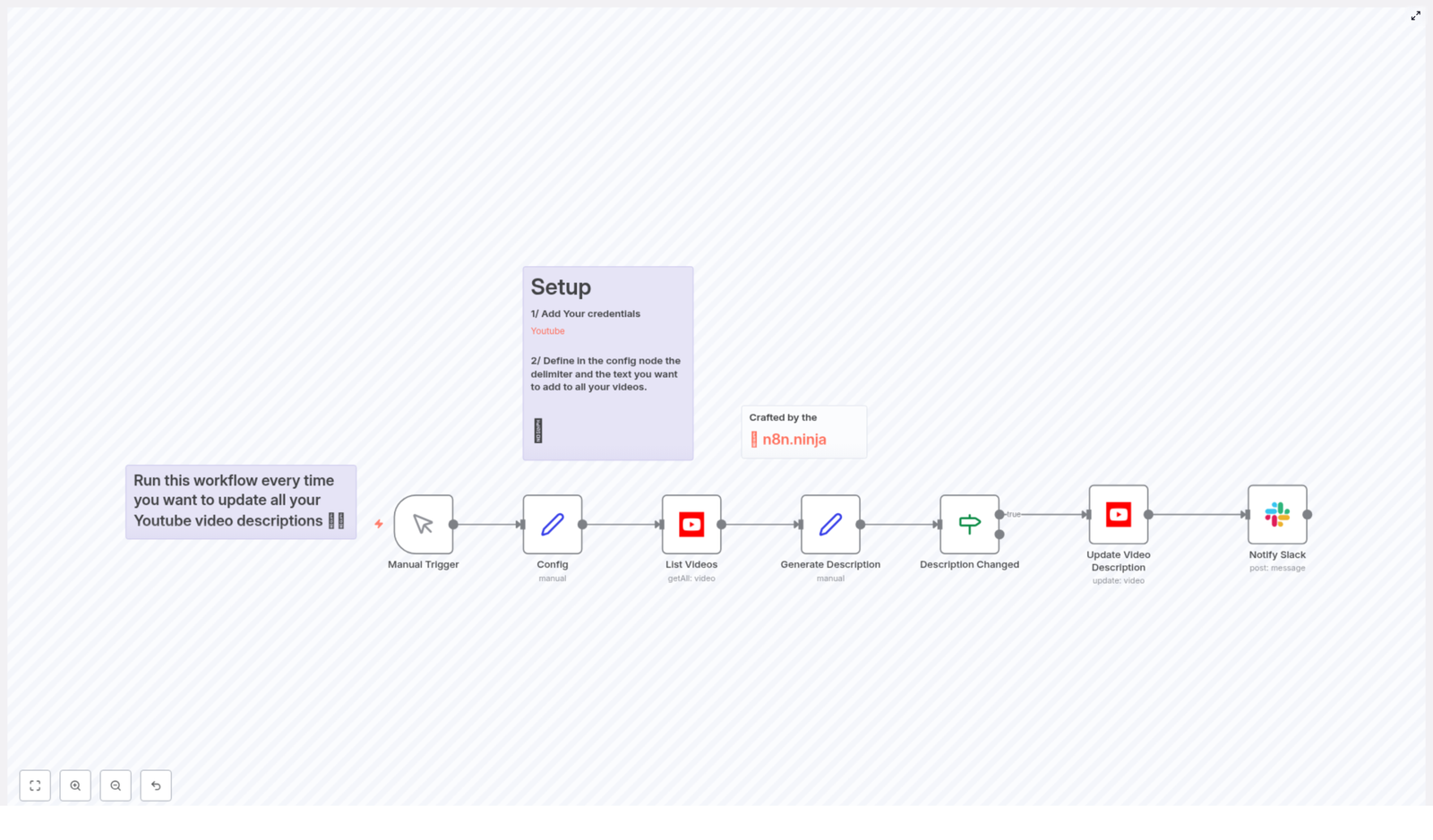

Let’s start with the big picture. This n8n workflow listens for new or updated contacts coming from Groundhogg, sends their address to Lob’s US address verification API, reads the result, and then updates your CRM and team accordingly.

Here’s the workflow in plain language:

- Groundhogg sends a webhook to n8n whenever a contact is created or updated.

- n8n maps the incoming address fields into a clean, standard format.

- The workflow calls Lob’s

/v1/us_verificationsendpoint to verify the address. - Based on Lob’s

deliverabilityresult, n8n:- Adds a Mailing Address Deliverable tag in Groundhogg when everything looks good.

- Adds a Mailing Address NOT Deliverable tag when there’s a problem.

- Optionally sends a message to a Slack channel, like

#ops, so someone can manually review the address.

The end result: your CRM stays clean, your mail gets where it’s supposed to go, and your team doesn’t have to manually double-check every address.

When should you use this automation?

This template is a great fit if you:

- Run physical mail campaigns (letters, postcards, welcome kits, swag, etc.).

- Rely on accurate addresses for billing, shipping, or compliance.

- Are tired of returned mail and want to protect your campaign ROI.

- Want a simple way to tag and segment contacts based on address quality.

If you’re already using Groundhogg as your CRM and n8n for automation, plugging Lob into the mix gives you a powerful, low-maintenance address verification layer.

Why verifying addresses in Groundhogg matters

It might be tempting to skip verification and “deal with problems later”, but that usually shows up as wasted time and money. Automated address verification helps you:

- Improve deliverability for physical mail campaigns so your letters and packages actually arrive.

- Cut down on returned mail and the postage you pay for pieces that never reach their destination.

- Catch manual-entry errors early, such as typos, missing apartment numbers, or invalid ZIP codes.

- Maintain high-quality customer data in Groundhogg, which also improves reporting and segmentation.

In other words, a small bit of automation upfront saves your team from chasing bad addresses later.

What you need before you start

Before you plug in this template, make sure you have the basics in place:

- An n8n instance with web access (self-hosted or n8n.cloud).

- A Groundhogg CRM account with the ability to send webhooks from funnels or automations.

- A Lob account and API key so you can use the US address verification endpoint.

- A Slack webhook or Slack app if you want notifications in a channel (optional but handy for ops teams).

How the workflow is structured in n8n

Let’s break down the main nodes so you know exactly what each part is responsible for and where you might want to customize things.

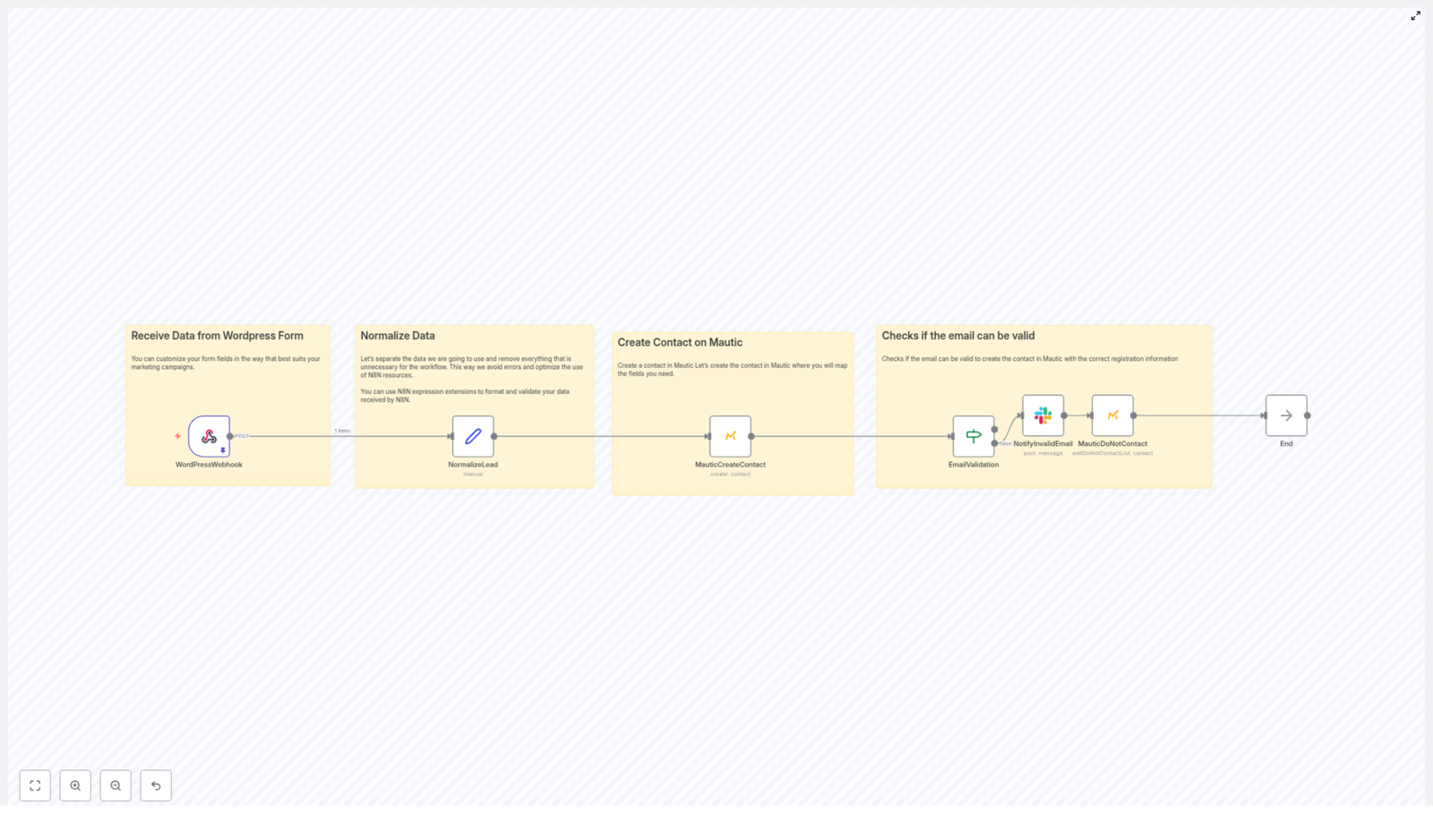

1. CRM Webhook Trigger

This is where the workflow starts. The Webhook Trigger node in n8n listens for POST requests from Groundhogg.

In Groundhogg, you configure your funnel or automation to send a webhook to the n8n URL and include the contact’s address details. Typical fields you’ll want in the payload:

id(the Groundhogg contact ID)addressaddress2citystatezip_codeemailorphone(optional, but often useful for context)

A sample webhook payload from Groundhogg might look like this:

{ "id": "5551212", "email": "mr.president@gmail.com", "phone": "877-555-1212", "address": "1600 Pennsylvania Avenue NW", "address2": "", "city": "Washington", "state": "DC", "zip_code": "20500"

}2. Set Address Fields

Once n8n receives the webhook, the next step is to standardize and map the incoming fields. The Set node is used to make sure the data going into Lob’s API is in the format it expects.

For example, you might map the payload into a simple object like:

{ "address": "1600 Pennsylvania Avenue NW", "address2": "", "city": "Washington", "state": "DC", "zip_code": "20500"

}This keeps things consistent and makes it easier to debug if something doesn’t look right later.

3. Address Verification (Lob)

Now comes the actual verification step. The workflow uses an HTTP Request node to call Lob’s US verification endpoint:

https://api.lob.com/v1/us_verificationsIt sends the mapped address fields and Lob responds with:

- Standardized address components like

primary_line,city,state, andzip_code. - A

deliverabilityvalue that tells you whether the address is valid and deliverable.

Typical deliverability values include:

deliverablenot deliverableunknown

Here’s an example cURL request that mirrors what n8n is doing behind the scenes, which you can use to test your Lob setup:

curl -u YOUR_LOB_API_KEY: \ -X POST https://api.lob.com/v1/us_verifications \ -d primary_line='1600 Pennsylvania Avenue NW' \ -d city='Washington' \ -d state='DC' \ -d zip_code='20500'Setting up Lob in n8n

To get Lob working with this workflow, you’ll need to configure authentication properly.

- Create an account at Lob.com.

- Generate an API key (see Lob’s docs: API keys guide).

- In n8n, edit the Address Verification HTTP Request node:

- Use Basic Auth as the authentication method.

- Set your Lob API key as the username.

- Leave the password field blank.

Once that’s done, your n8n workflow can securely talk to Lob and verify addresses on demand.

Routing based on deliverability

After Lob responds, the workflow needs to decide what to do next. This is where the Deliverability Router (a Switch node) comes in.

4. Deliverability Router

The Switch node checks $json.deliverability from the Lob response and sends the workflow down different paths based on that value.

- If

deliverabilityis deliverable, the contact follows the “success” path. - If it is not deliverable or another unexpected value, the workflow takes an alternate route.

This branching is what lets you treat good addresses, bad addresses, and “not sure” addresses differently.

5. Mark Deliverable / Mark NonDeliverable

On each branch, HTTP Request nodes talk back to Groundhogg to update the contact. These nodes can hit a Groundhogg webhook listener or the Groundhogg API directly.

Common actions include:

- For valid addresses:

- Add the tag Mailing Address Deliverable.

- Optionally write a note or update a custom field.

- Continue onboarding or campaign automations as usual.

- For invalid addresses:

- Add the tag Mailing Address NOT Deliverable.

- Trigger a manual verification automation in Groundhogg.

- Optionally pause certain mail-related funnels until the address is fixed.

The key is that Groundhogg always stays in sync with what Lob has verified.

6. Notify Team in Slack (optional but useful)

If an address comes back as non-deliverable, you probably want a human to take a quick look. The workflow can send a Slack notification to a channel like #ops whenever this happens.

The Slack node uses either a webhook URL or a Slack app to post a message that might include:

- The contact’s ID or email.

- The address Lob flagged as problematic.

- A short note like “Address verification failed, please review.”

This makes it easy for your team to jump in and fix issues before they affect your campaigns.

How to handle different deliverability results

Once you’ve got the basic workflow running, you can decide exactly how your system should behave for each outcome.

- Deliverable:

- Add a positive verification tag (for example, Mailing Address Deliverable).

- Not deliverable:

- Add a non-deliverable tag.

- Notify your ops team in Slack for manual review.

- Optionally send an automated email or SMS asking the contact to confirm or correct their address.

- Unknown or partial:

- Route these to a “needs human review” path.

- Consider a follow-up workflow that asks the contact for more details (like apartment number or suite).

Security and privacy best practices

Since you’re working with personal data, it’s worth taking a moment to lock things down properly.

- Protect your Lob API key by using n8n’s credential storage or environment variables instead of hard-coding keys in HTTP nodes.

- Keep webhook URLs private and avoid exposing them publicly. If possible, validate incoming requests.

- Use HTTPS end to end and only store the personal information you actually need to run your business.

- Stay compliant with applicable data protection laws by handling PII responsibly.

Testing and debugging your workflow

Before you roll this out to your entire list, it’s smart to run a few test contacts through the pipeline.

- Use pinData or a manual trigger in n8n to simulate sample payloads from Groundhogg.

- Inspect the output of the Address Verification node to see exactly what Lob is returning in the JSON.

- If your Switch node is not behaving as expected, check the

deliverabilityvalue and update your conditions to match Lob’s exact string. - Log failures and add retry logic in case of temporary HTTP errors or timeouts.

Troubleshooting common issues

If something’s not working, here are a few quick checks that usually help:

- 401 from Lob:

- Double-check your API key.

- Confirm Basic Auth is configured correctly, with the key as the username and an empty password.

- Unexpected deliverability values:

- Log or print the full Lob response JSON.

- Update your Switch node rules to match the actual values Lob is sending.

- Groundhogg not updating:

- Verify the HTTP Request node is pointing at the correct Groundhogg listener or API URL.

- Confirm the payload includes the correct

idfor the contact.

Ideas to extend this workflow

Once you’ve got the basic template running smoothly, you can easily build on it. Some popular enhancements include:

- Write back standardized addresses from Lob into Groundhogg so your records are always normalized.

- Add a retry loop for addresses that come back as unknown, maybe after you collect more details from the contact.

- Trigger a two-way verification flow via email or SMS asking the contact to confirm or correct their address.

- Create a dashboard or report that tracks verification rates, error counts, and trends over time for ongoing data quality monitoring.

Costs and rate limits to keep in mind

Lob charges per verification request, so it’s worth keeping an eye on your usage. Check the pricing for your Lob plan and consider strategies like:

- Verifying addresses only when they are first created or changed.

- Batching or sampling if you handle very high volumes.

That way, you keep your data clean without any surprise bills.

Wrapping up

By connecting Groundhogg and Lob through n8n, you get a simple but powerful automation that:

- Reduces manual address checking.

- Improves mail deliverability and campaign performance.

- Keeps your CRM data accurate and actionable.

The template includes everything you need to get started quickly: a webhook trigger, field