OpenAI Citations for File Retrieval (RAG)

This guide walks you through an n8n workflow template that adds clear, file-based citations to answers generated by an OpenAI Assistant that uses file retrieval or a vector store. You will learn how to extract citation metadata from OpenAI, turn file IDs into readable filenames, and format the final response as Markdown or HTML for reliable Retrieval-Augmented Generation (RAG).

What you will learn

By the end of this tutorial, you will be able to:

- Explain why citations are important in RAG workflows.

- Understand how OpenAI assistants expose file annotations and metadata.

- Build an n8n workflow that:

- Sends user questions to an OpenAI Assistant with a vector store.

- Retrieves the full assistant thread to capture all annotations.

- Parses messages to extract citation objects and file IDs.

- Looks up file metadata from the OpenAI Files API.

- Formats the final answer with human-readable citations.

- Customize the citation format, for example inline notes, footnotes, or links.

Why add citations to RAG responses?

Retrieval-Augmented Generation combines a language model with a vector store of documents. The model retrieves relevant content from your files and then generates an answer based on those snippets.

Out of the box, the assistant may know which files and text fragments it used, but the user often only sees a plain natural language answer. It may be unclear:

- Which file a specific sentence came from.

- Whether the answer is grounded in real documents.

- How to verify or audit the response later.

Adding structured citations solves this. It improves:

- Transparency – users can see where each fact came from.

- Traceability – you can trace text snippets back to source files.

- Trust – especially important for documentation, compliance, or any system that needs source attribution.

Concepts you need to know first

OpenAI Assistant with vector store (file retrieval)

In this setup, your OpenAI Assistant is connected to a set of uploaded files. When a user asks a question, the assistant:

- Retrieves relevant file chunks from the vector store.

- Generates an answer using those chunks as context.

- Attaches annotations to the generated text that point back to:

file_id– the OpenAI ID of the source file.text– the exact fragment extracted.- Offsets or positions of the fragment in the message.

Thread messages and annotations

OpenAI assistants work with threads. A thread contains all the messages exchanged between the user and the assistant. The assistant’s summarized reply that you see in n8n may not include all the raw annotation data, so you typically need to:

- Call the OpenAI API to retrieve the full thread messages.

- Inspect each message’s

contentfield. - Locate annotation arrays such as

text.annotations.

File metadata lookup

An annotation contains a file_id, but users need something more readable, like a filename. To bridge that gap you:

- Call the OpenAI Files API with each

file_id. - Retrieve metadata such as

filename. - Use that filename in your citation text.

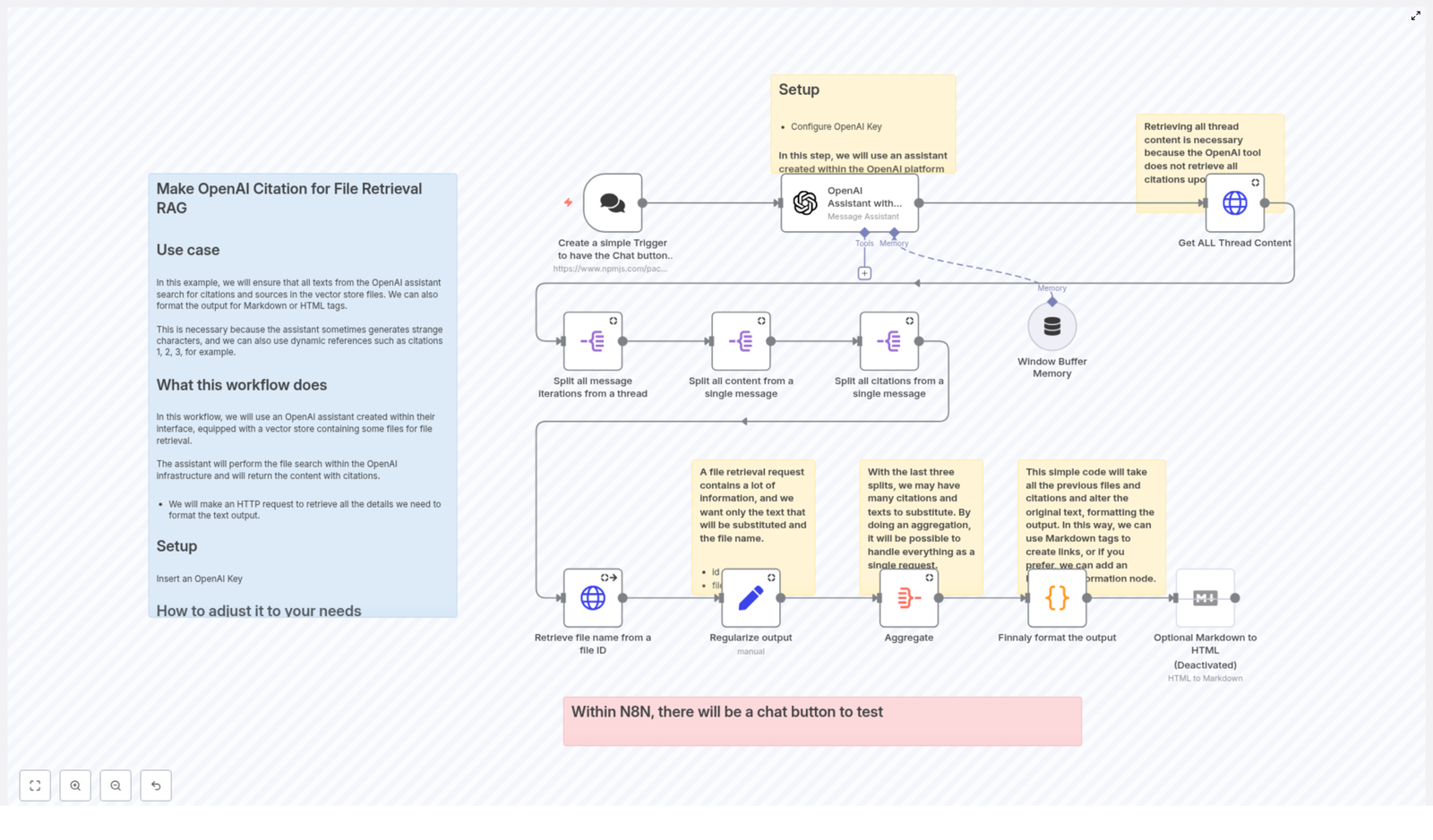

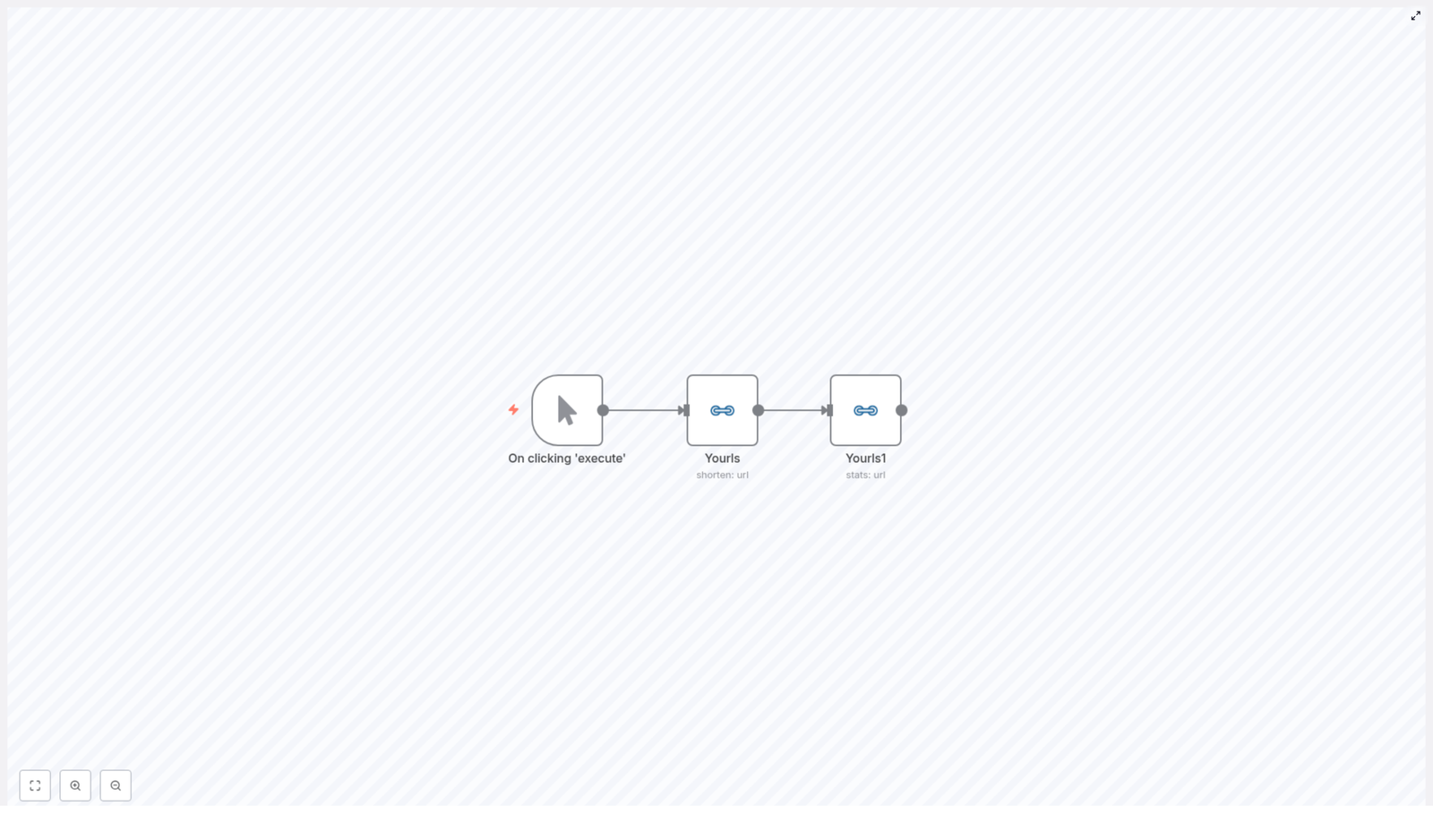

How the n8n workflow is structured

This tutorial is based on an n8n workflow that follows this high-level flow:

- User sends a question via a Chat Trigger in n8n.

- n8n sends the question to an OpenAI Assistant with a vector store.

- After the assistant responds, n8n retrieves the full thread messages from OpenAI.

- n8n splits and parses the messages to extract annotations and file IDs.

- For each file ID, n8n calls the OpenAI Files API to get the filename.

- All citation data is normalized and aggregated into a consistent structure.

- A Code node formats the final answer, inserting citations and optionally converting Markdown to HTML.

Step-by-step: building the citation workflow in n8n

Step 1 – Capture user questions with a Chat Trigger

Start with a Chat Trigger node. This node creates a chat interface inside n8n where users can type questions. When the user submits a message:

- The chat trigger fires.

- The workflow starts and passes the question to the next node.

Step 2 – Send the query to the OpenAI Assistant with vector store

Next, add an OpenAI Assistant node that is configured with your vector store (file retrieval). This node:

- Receives the user question from the Chat Trigger.

- Forwards it to the OpenAI Assistant that has access to your uploaded files.

- Gets back an answer that may contain annotations referencing:

file_idfor each source file.- Extracted

textsegments used in the answer.

At this point, you have a usable answer, but the raw response might not fully expose all the annotation details that you need for robust citations.

Step 3 – Retrieve the full thread content from OpenAI

To get all the citation metadata, you should retrieve the complete thread from OpenAI. Use an HTTP Request node that:

- Calls the OpenAI API endpoint for thread messages.

- Uses the thread ID returned by the Assistant node.

- Returns every message in the thread, including all annotations.

This step is important because the assistant’s immediate reply may omit some annotation payloads. Working with the full thread ensures you do not miss any citation data.

Step 4 – Split and parse the thread messages

Once you have the full thread, you need to extract the annotations from each message. In n8n you can:

- Use a Split In Batches or similar split node to iterate over each message in the thread.

- For each message, inspect its

contentstructure. - Locate arrays that hold annotations, for example

text.annotations.

Each annotation typically contains fields like:

file_id– the OpenAI file identifier.text– the snippet extracted from the file.- Offsets or positions that indicate where the text appears.

Step 5 – Look up file metadata from the OpenAI Files API

Now that you have a list of annotations with file_id values, the next step is to turn those IDs into human-friendly filenames. For each annotation:

- Call the OpenAI Files endpoint with the

file_id. - Retrieve the associated metadata, typically including

filename. - Combine that filename with the extracted text to build a richer citation object.

Step 6 – Normalize and aggregate citation data

Different messages may reference the same file or multiple fragments from that file. To make formatting easier:

- Standardize each citation as a simple object, for example:

{ id, filename, text }. - Collect all citation objects into a single array so you can process them in one pass.

At this stage you have:

- The assistant’s answer text.

- A list of citation records that link fragments of that answer to specific filenames.

Step 7 – Format the final output with citations

The last main step is to inject citations into the assistant’s answer. You typically do this in an n8n Code node and optionally follow it with a Markdown node if you want HTML output.

Common formatting options include:

- Inline citations such as

(source: filename). - Numbered footnotes like

[1], with a reference list at the end. - Markdown links if your files are accessible via URL.

Example n8n Code node: simple inline citations

The following JavaScript example shows how a Code node can replace annotated text segments in the assistant’s output with inline filename references. It assumes:

- The assistant’s answer is stored at

$('OpenAI Assistant with Vector Store').item.json.output. - The aggregated citation data is available as

$input.item.json.data, where each entry hastextandfilename.

// Example n8n JS (Code node)

let saida = $('OpenAI Assistant with Vector Store').item.json.output;

for (let i of $input.item.json.data) { // replace the raw text with a filename citation (Markdown-style) saida = saida.replaceAll(i.text, ` _(${i.filename})_ `);

}

$input.item.json.output = saida;

return $input.item;

This logic walks through each citation, finds the corresponding text in the assistant response, and appends an inline reference such as _(my-file.pdf)_.

Example: numbered citations and reference list

If you prefer numbered citations, you can extend the logic. The idea is to:

- Assign a unique index to each distinct

file_id. - Replace each annotated text segment with a marker like

[1]or[2]. - Append a formatted reference list at the end of the answer.

// Pseudocode to create numbered citations

const citations = {};

let idx = 1;

for (const c of $input.item.json.data) { if (!citations[c.file_id]) { citations[c.file_id] = { index: idx++, filename: c.filename }; } // replace c.text with `[${citations[c.file_id].index}]` or similar

}

// append a formatted reference list based on citations

In a real implementation, you would perform the string replacements in the answer text and then build a block such as:

[1] my-file-1.pdf

[2] another-source.docx

Formatting choices for your UI

Depending on your front end, you can adjust the final citation style. Here are some options:

- Simple inline citation Replace the text with something like

(source: filename)if you want minimal changes to the answer structure. - Numbered footnotes Use numeric markers in the text and list all sources at the bottom. This keeps the main answer clean while still being traceable.

- Markdown to HTML If your UI is web based, run the final Markdown through an n8n Markdown node to convert it to HTML.

- Clickable links When files are accessible via URL, format citations as Markdown links, for example:

[filename](https://.../file-id).

Best practices for reliable citations

1) Always retrieve the complete thread

Do not rely only on the immediate assistant reply. Make a separate request for the full thread messages so you have all annotation payloads needed to resolve citations accurately.

2) Normalize text before replacement

Annotation text may include variations in whitespace or punctuation. To avoid incorrect replacements:

- Trim and normalize whitespace where appropriate.

- Consider using character offsets from the annotation instead of naive string matching.

3) Deduplicate repeated citations

The same file or fragment can appear multiple times in an answer. To keep citations tidy:

- Deduplicate entries by

file_id. - Reuse the same citation index for repeated references.

4) Handle partial and ambiguous matches

Short text fragments can accidentally match unrelated parts of the answer if you use a simple replaceAll. To reduce this risk:

- Use offsets when available to target exact positions.

- Wrap replacements in unique markers during processing, then clean them up.

- Be cautious with very short snippets that could appear in many places.

Troubleshooting common issues

- No annotations returned Check that your OpenAI Assistant is configured to include file metadata in its tool outputs. If needed, verify that you are using the thread retrieval approach and not only the immediate reply.

- File lookup fails Confirm your OpenAI API credentials and permissions. Make sure the

file_idactually exists in the assistant’s vector store and that you are querying the correct project or environment. - Corrupt or broken output after replacement Inspect the original text and the annotation snippets. If replacements are misaligned, switch from naive

replaceAllto offset-based replacements or more precise string handling.

Security and privacy considerations

Citations expose details about your source files, so treat them with care:

- Only display filenames or metadata that are safe to show to end users.

- If files contain sensitive information, consider masking or redacting parts of the filename or path.

- Review your data handling policies to ensure compliance with internal and external regulations.

Recap and next steps

You have seen how to build an n8n workflow that:

- Captures user queries through a Chat Trigger.

- Uses an OpenAI Assistant with a vector store to answer questions.

n8n Website Analyzer with GPT-4 & Serper

How a Stressed SEO Marketer Turned n8n, GPT‑4.1, and Serper Into a Website Analyzer Superpower

By 9:30 a.m., Lina already had a headache.

Her manager had just dropped a list of 120 URLs into her inbox with a cheerful note: “Need titles, meta descriptions, summaries, and keyword patterns for all of these by tomorrow. Should help with our SEO roadmap.”

Lina was an experienced SEO marketer, not a magician. She knew what this meant in practice: endless tab switching, copy pasting text into documents, scanning for patterns, and trying to guess which keywords actually mattered. She had done this routine manually before. It was slow, repetitive, and error prone.

This time, she decided it had to be different.

The breaking point: when manual analysis stops scaling

Lina opened the first few pages from the list. Each one had messy layouts, pop ups, navigation menus, footers, and cookie banners. The information she actually needed was buried in the main content.

- She needed page titles and meta descriptions for quick SEO checks.

- She needed concise summaries to share with her content team.

- She needed keyword patterns, not just guesses, but structured n‑gram analysis of unigrams, bigrams, and trigrams.

Doing this manually for 10 pages was annoying. For 120 pages it was a nightmare.

She had used n8n before for simple automations like sending Slack alerts and syncing form submissions, so a thought crossed her mind: “What if I can turn this into an automated website analyzer?”

The discovery: an n8n Website Analyzer template

Searching for “n8n website analyzer” led her to a reusable workflow template built around GPT‑4.1‑mini and Serper. It promised exactly what she needed:

- Automated page scraping.

- LLM powered summarization.

- N‑gram analysis with structured outputs.

The more she read, the more it felt like this template was designed for people exactly like her: content teams, SEO specialists, and developers who needed fast, structured insights from web pages at scale.

The workflow combined three main ingredients:

- n8n for orchestration and low code automation.

- Serper as the search and scraping layer that fetched clean content.

- GPT‑4.1‑mini to parse, summarize, and analyze the text.

Instead of manually reading every page, Lina could have an AI agent do the heavy lifting, then plug the results straight into her reporting stack.

Inside the “Website Analyzer” brain

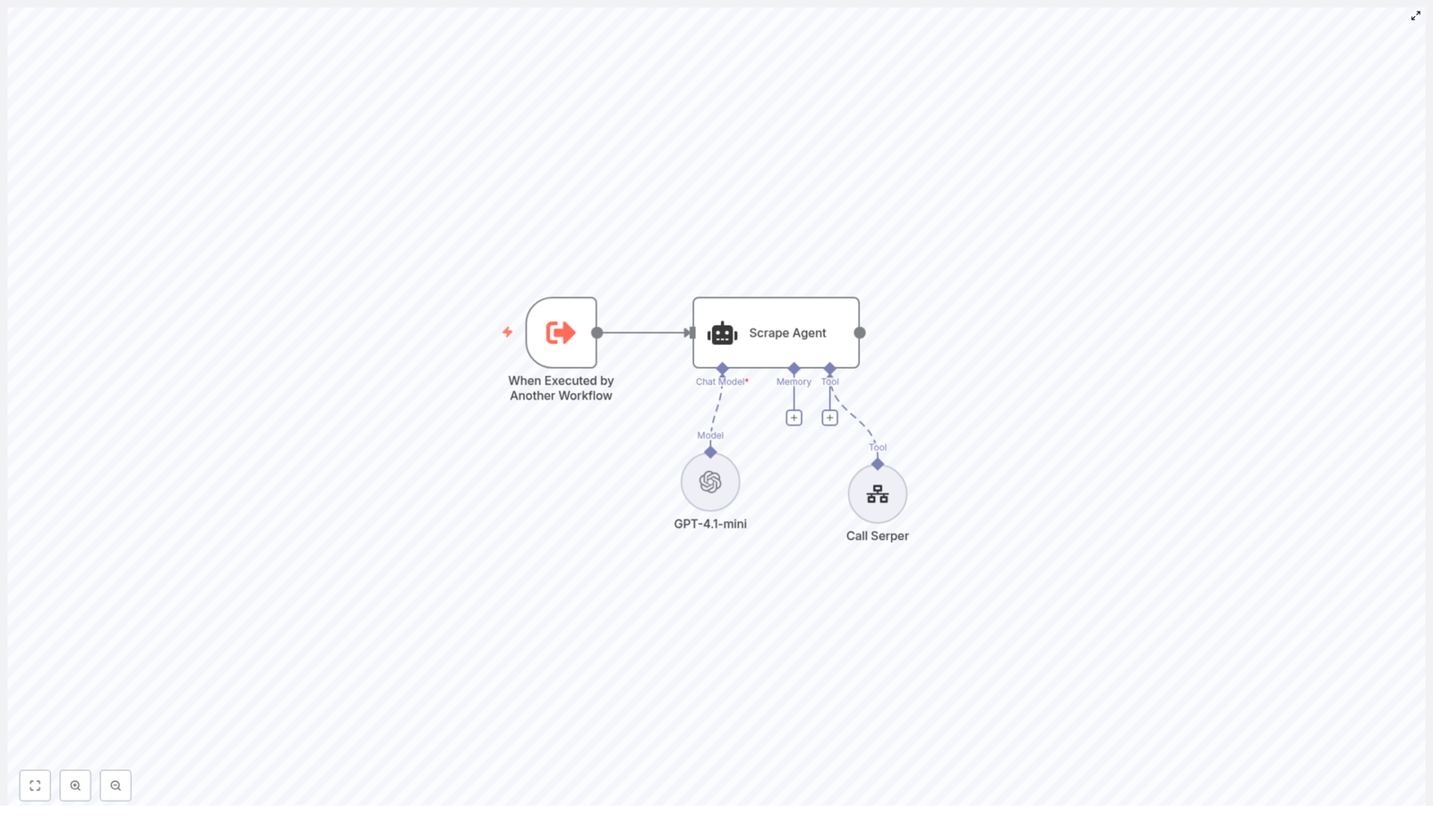

Before she trusted it with her 120 URLs, Lina wanted to understand how this n8n workflow actually worked. The template followed an AI agent pattern, with a few key nodes acting like parts of a small team.

The core nodes Lina met along the way

- When Executed by Another Workflow – A trigger node that let this analyzer run on demand. Lina could call it from other workflows, from a schedule, or from a simple webhook.

- Scrape Agent – A LangChain style agent node that coordinated the language model and the tools. This was the “brain” that decided what to do with each URL.

- GPT‑4.1‑mini – The LLM responsible for parsing the scraped text, creating summaries, and performing n‑gram analysis.

- Call Serper – A separate workflow used as a tool that actually fetched the web page, cleaned the HTML, and returned usable content.

In other words, the workflow did not just “call GPT on a URL.” It followed a clear step by step process that made sense even to a non developer like Lina.

The rising action: turning a template into her personal analyzer

Lina imported the template into her n8n instance and watched the nodes appear in the editor. It looked more complex than the simple automations she was used to, but the structure was logical.

Step 1 – Bringing the template into n8n

She started by importing the workflow JSON file. Once loaded, she checked:

- That all nodes were connected correctly.

- That the “When Executed by Another Workflow” trigger was at the top.

- That the Scrape Agent node pointed to the “Call Serper” tool workflow.

With the skeleton in place, it was time to give the analyzer access to real data.

Step 2 – Wiring up GPT‑4.1‑mini and Serper credentials

Without valid API keys, the workflow was just a nice diagram. Lina opened the credentials panel and configured two key integrations:

- OpenAI credentials for the GPT‑4.1‑mini node, where she pasted her API key so the agent could perform the summarization and analysis.

- Serper credentials for the “Call Serper” workflow, ensuring that the URL fetch node would return either clean text or HTML that the tool could sanitize.

Once saved, the red warning icons disappeared. The agent was ready to think and browse.

Step 3 – Understanding the agent’s step by step behavior

Lina opened the Scrape Agent configuration and followed the logic. For each URL, the workflow would:

- Receive a request from another workflow or trigger with the URL to analyze.

- Call the Serper tool to fetch the page HTML and extract the main textual content, avoiding navigation bars, ads, and boilerplate.

- Send the cleaned content to GPT‑4.1‑mini with a structured prompt that requested:

- Page title.

- Meta description, or a generated summary if none existed.

- A concise 2 to 3 sentence summary of the page.

- N‑gram analysis including unigrams, bigrams, and trigrams.

- Return a structured response that other workflows could consume as JSON, send to a webhook, export as CSV, or write directly into a database.

This was exactly the workflow she had been doing manually, only now it could run across dozens or hundreds of pages without her supervision.

The turning point: crafting the perfect prompt

When Lina clicked into the system prompt for the Scrape Agent, she realized how much power lived in a few paragraphs of instruction. The template already included a solid default prompt, but she wanted to understand the rules before trusting the n‑gram output.

The core prompt guidelines focused on keeping the analysis clean and consistent:

- Analyze only the main textual content, ignore navigation, sidebars, footers, and ads.

- Normalize the text before extracting n‑grams:

- Convert to lowercase.

- Remove punctuation.

- Strip stop words.

- Return the top 10 items for unigrams, bigrams, and trigrams when available.

- Exclude n‑grams that contain only stop words.

She kept those rules but added a few tweaks of her own, such as slightly adjusting the way summaries were phrased to match the tone her team preferred.

The prompt became the contract between her expectations and the model’s behavior. With that in place, she felt confident enough to run a real test.

First run: from a single URL to a reliable JSON payload

To avoid surprises, Lina started with one URL from her list. She triggered the workflow manually inside n8n, watched the execution log, and waited for the result.

The output arrived as a clean JSON object, similar to this structure:

{ "url": "https://example.com/page", "title": "Example Page Title", "meta_description": "Short meta description or generated summary", "summary": "2-3 sentence summary", "n_grams": { "unigram": ["word1", "word2", "word3"], "bigram": ["word1 word2", "word2 word3"], "trigram": ["word1 word2 word3"] }

}

Everything she needed was there: title, meta description, summary, and structured keyword patterns. No more scanning paragraphs and guessing which phrases mattered.

Scaling up: testing, iterating, and debugging like a pro

With the first success, Lina queued a handful of URLs. She used n8n’s execution view to monitor each run and confirm the outputs were consistent.

Iterating on the workflow

- She checked that Serper always returned enough text. For pages with very little content, she learned to verify whether the site relied heavily on client side rendering. In those cases, a headless browser or pre render service could help capture the final HTML.

- She tightened the LLM prompt to reduce hallucinations, explicitly asking GPT‑4.1‑mini to avoid inventing facts and to state clearly when information was missing.

- She adjusted the number of n‑gram results when she wanted a shorter list for quick overviews.

Each small tweak improved the reliability of the analyzer. Soon, she felt ready to let it loose on the full list of 120 URLs.

Beyond the basics: extending the Website Analyzer

Once the core analyzer was stable, Lina started to see new possibilities. The template was not just a one off solution, it was a foundation she could extend as her needs evolved.

Language detection and smarter n‑grams

Some of the URLs her team tracked were in different languages. She added a language detection step before the n‑gram extraction so that the workflow could:

- Identify the page language automatically.

- Route the content to language specific stop word lists.

- Produce cleaner, more meaningful n‑gram results in each language.

Content scoring and SEO strength

Next, she used GPT‑4.1‑mini not only to summarize, but also to score content based on:

- Readability.

- SEO strength.

- Relevance to a given keyword set.

These scores helped her prioritize which pages needed urgent optimization and which were already performing well.

Storage, dashboards, and long term insights

Instead of exporting CSV files manually, Lina connected the workflow to her database. Each run now:

- Stored analyzer outputs in a structured table.

- Fed data into a dashboard built on top of Elasticsearch and a BI tool.

- Allowed her to search across titles, summaries, and n‑grams over time.

What started as a one day emergency task turned into a sustainable system for ongoing content intelligence.

Staying responsible: ethics, legality, and best practices

As she scaled the analyzer, Lina knew she had to be careful. Scraping public content did not mean she could ignore ethics or legal considerations.

She put a few safeguards in place:

- Checking

robots.txtand site terms before adding a domain to her automated runs. - Implementing rate limits and exponential backoff in n8n to avoid overloading target servers.

- Filtering and redacting any sensitive personal data before storing or sharing outputs.

These steps kept the workflow aligned with both technical best practices and company policies.

Performance and cost: keeping the analyzer lean

As the number of URLs grew, Lina became more conscious of API costs and performance. She made a few optimizations:

- Fetching only the necessary text and stripping scripts, styles, and images at the scraping stage.

- Caching results for URLs that were analyzed repeatedly, so she did not pay for the same page twice.

- Using GPT‑4.1‑mini for routine analysis, reserving larger models only for deep dives on high value pages.

With these adjustments, the workflow stayed fast and affordable even as her team expanded its coverage.

What changed for Lina and her team

By the end of the week, Lina had more than just a completed task list. She had built an internal Website Analyzer agent that her team could reuse for:

- Automated SEO page audits and keyword extraction.

- Content research and competitor analysis with quick summaries and topic clusters.

- Data enrichment for indexing and cataloging large sets of URLs.

Instead of spending hours on manual copy paste work, she could now focus on strategy, content ideas, and actual optimization. The tension that began her week had turned into a sense of control.

Your turn: building your own n8n Website Analyzer

If you recognize yourself in Lina’s story, you can follow a similar path in your own n8n instance.

- Import the Website Analyzer template into n8n and verify the node connections.

- Configure your OpenAI credentials for the GPT‑4.1‑mini node and set up Serper (or another scraping tool) for clean content extraction.

- Customize the Scrape Agent system prompt so it matches your analysis needs, including n‑gram rules and summary style.

- Test with a few URLs, inspect the JSON outputs, then iterate on the prompt and node settings.

- Once stable, scale up, add storage or dashboards, and extend with language detection, scoring, or rate limiting as needed.

The template gives you a ready made AI agent that combines orchestration, web crawling, and LLM analysis into one reusable workflow. You do not have to start from scratch or build your own tooling layer.

Start now: import the template, plug in your OpenAI and Serper credentials, and run your first test URL. From there, you can shape the analyzer around your own SEO, content, or data enrichment workflows.

Automate Strava to Twitter with n8n

Automate Strava to Twitter with n8n

On a cold Tuesday evening, Alex stared at their phone, thumb hovering over the Twitter (X) app. Another 10K run logged in Strava, another manual post to write, another few minutes spent formatting distance, time, and a half-hearted caption.

It was not the writing that bothered Alex. It was the repetition. Every run, every ride, every swim followed the same pattern: open Strava, check stats, open Twitter, type the same style of message, add a hashtag or two, hit publish. On busy weeks, Alex simply stopped sharing workouts altogether.

As a data-loving runner and part-time marketer, Alex wanted something better: a consistent, automatic way to share Strava activities on Twitter without thinking about it every time. That search led them to n8n and a simple Strava to Twitter automation template that quietly changed their routine.

The problem: manual sharing and inconsistent updates

Alex’s goals were straightforward:

- Stop manually posting every single workout

- Share consistent, polished messages that included key stats like distance and time

- Optionally drop in hashtags, team mentions, or branded phrases without retyping them

Yet every time Alex tried to stay consistent, life got in the way. Some workouts never made it to Twitter at all. Others had messy formatting or missing metrics. The whole process felt fragile and time consuming.

As someone already experimenting with automation, Alex wondered: “Could n8n listen for new Strava activities and tweet for me automatically?”

The discovery: a tiny n8n workflow with a big impact

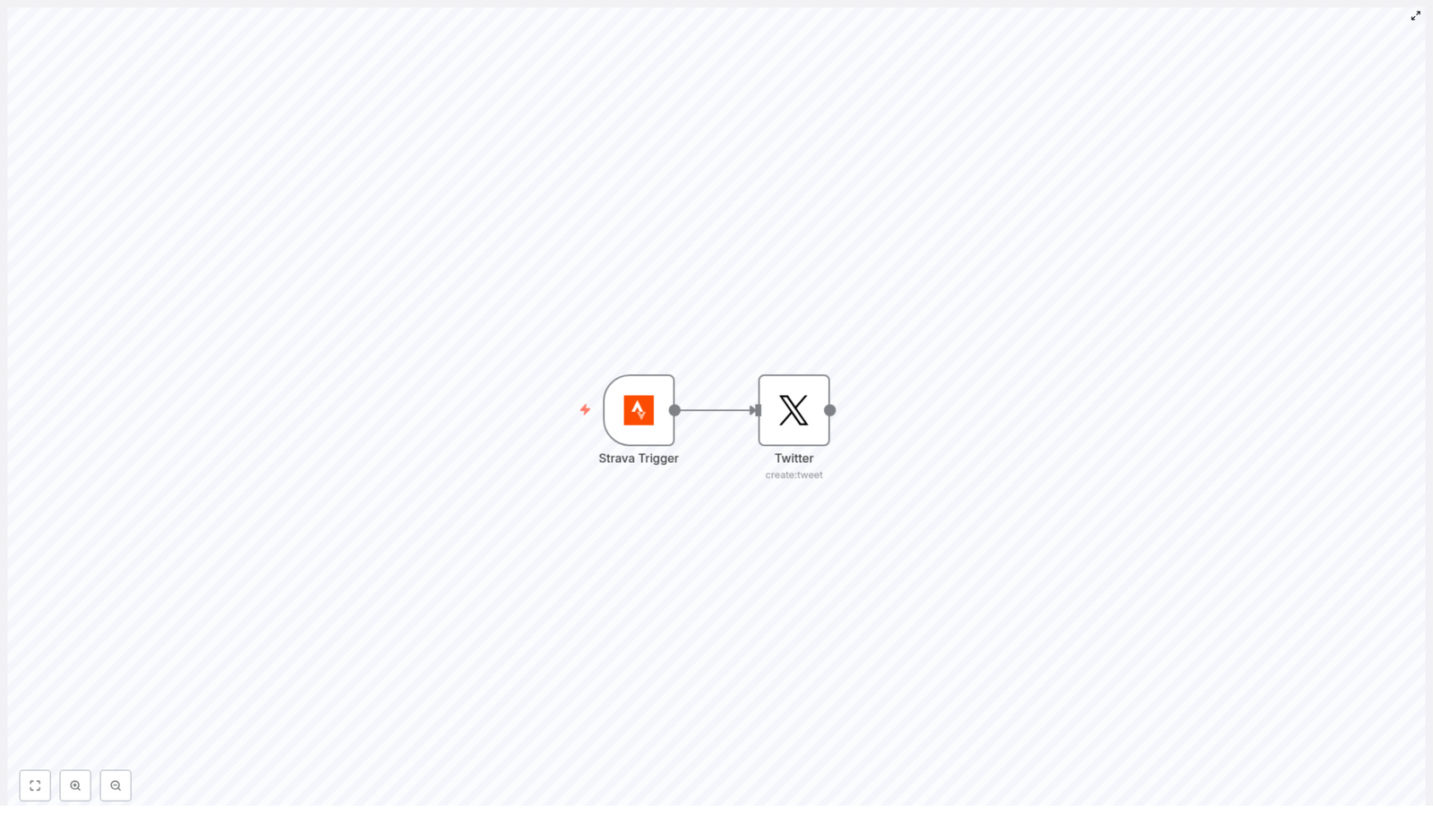

During a late-night search for automation ideas, Alex came across an n8n workflow template: a Strava to Twitter integration that promised to post new activities automatically.

The idea was surprisingly simple. The entire workflow relied on just two nodes:

- Strava Trigger – listens for new Strava activities via webhooks

- Twitter (create:tweet) – posts a tweet using the data from that activity

In Alex’s mind, this looked like a tiny assembly line. Strava would send a webhook when a new workout was created, the Strava Trigger node would catch it, and the Twitter node would format a tweet and publish it right away. No more copy-pasting stats. No more forgetting to post after a long run.

There was only one question left: could Alex actually get it set up?

Setting the stage: accounts, keys, and credentials

Before the real magic could happen, Alex needed the basic pieces in place. If you follow along with their story, you will need the same prerequisites:

- An n8n instance, whether desktop, cloud, or self-hosted

- A Strava account and a Strava API application for webhooks or OAuth

- A Twitter (X) developer account with API keys, usually OAuth 1.0a credentials for n8n

- Basic familiarity with how n8n nodes and expressions work

Alex already had an n8n instance running and both Strava and Twitter accounts in use. The missing piece was the developer setup, which became the first step in the journey.

Rising action: Alex builds the workflow

Creating the Strava application

Alex headed to the Strava developer portal and registered a new application. The portal returned a Client ID and Client Secret, which would be necessary to authenticate n8n and subscribe to Strava activity events.

Those credentials felt like a key to a locked door. With them, n8n could now “speak” to Strava and receive notifications whenever Alex completed a workout.

Wiring the Strava Trigger node

Inside the n8n editor, Alex added the first piece of the automation: the Strava Trigger node.

They configured it to listen for new activities:

- Event:

create - Object:

activity

Next, Alex connected their Strava OAuth2 credentials to the node using n8n’s credential manager. Once saved, the node was ready to receive data whenever Strava sent a webhook.

Strava’s webhook payload would arrive in a field called object_data, containing details like:

distancein metersnameof the activitymoving_timeand other metrics

For the first time, Alex could see the structure of their workouts in raw JSON. It was a bit intimidating, but it also meant complete control over what the future tweet would say.

Designing the tweet with the Twitter node

With the trigger in place, Alex added the second and final node: Twitter (create:tweet). This node was connected directly to the Strava Trigger, forming a simple two-node chain.

The key step was to build a tweet text that used values from the Strava payload. In the Twitter node’s text field, Alex used an n8n expression similar to this:

=`I ran ${Math.round($node["Strava Trigger"].json["object_data"]["distance"] / 100)/10} km and completed my ${$node["Strava Trigger"].json["object_data"]["name"]}! #Strava`A few important details immediately stood out to Alex:

- Strava reports distance in meters, so the expression divided by 1000 (via a simple transformation) and rounded to one decimal place.

- The activity name came directly from

object_data["name"], making each tweet feel personal and specific. - Hashtags like

#Stravacould be customized or expanded with mentions, team tags, or branded phrases.

With this expression, Alex no longer had to think about formatting. Every time Strava sent a new activity, the tweet text would be built automatically using the latest data.

Authenticating Twitter securely

The last configuration step was to give n8n permission to post tweets on Alex’s behalf. In the Twitter node, Alex added their Twitter OAuth1 credentials through n8n’s credential manager.

Instead of storing keys in plain text, n8n kept them encrypted and reusable across workflows. That added a layer of security and made Alex feel more comfortable letting an automation access their Twitter account.

The turning point: the first automated tweet

With the workflow active, Alex did what any curious runner would do: they went for a test run.

Back home, sweaty and curious, Alex opened Strava and saved the activity. Within moments, the n8n workflow received the webhook. The Strava Trigger node passed the payload to the Twitter node, which evaluated the expression and sent off a formatted tweet.

When Alex refreshed their Twitter feed, there it was:

I ran 10.3 km and completed my Evening Run! #Strava

No manual typing, no copy-paste, no delay. The automation had quietly done the work in the background.

Under the hood: the n8n workflow JSON

Curious to understand how everything fit together, Alex examined the minimal workflow JSON that powered this automation. If you want to replicate the same setup, you can import a similar JSON into your own n8n instance and adjust the credentials:

{ "nodes": [ { "name": "Strava Trigger", "type": "n8n-nodes-base.stravaTrigger", "parameters": {"event": "create", "object": "activity"}, "credentials": {"stravaOAuth2Api": "strava"} }, { "name": "Twitter", "type": "n8n-nodes-base.twitter", "parameters": { "text": "=I ran {{$node[\"Strava Trigger\"].json[\"object_data\"][\"distance\"]}} meters and completed my {{$node[\"Strava Trigger\"].json[\"object_data\"][\"name\"]}}!" }, "credentials": {"twitterOAuth1Api": "twitter-account"} } ], "connections": {"Strava Trigger": {"main": [[{"node":"Twitter","type":"main","index":0}]]}}

}

Alex used this as a starting point, then refined the expression to convert meters to kilometers and tweak the copy. The structure, however, remained the same: a Strava Trigger node wired directly to a Twitter node.

When things go wrong: Alex’s troubleshooting journey

Of course, not everything worked perfectly on the first try. Along the way, Alex ran into a few common issues and learned how to fix them.

- No trigger events arriving

The workflow sat idle after a workout. Alex discovered that the Strava webhook subscription needed to be active and that thewebhookIdin the Strava app had to match the n8n endpoint. Once updated, events started flowing again. - Tweet failing silently

When a test tweet did not appear, Alex checked the Twitter node logs. The root cause turned out to be invalid or outdated Twitter credentials. After updating OAuth1 keys and checking rate limits and tweet length, the node worked reliably. - Strange or incorrect values in the tweet

At one point, the tweet showed raw meters without conversion. To debug, Alex inserted aFunctionnode between Strava Trigger and Twitter, logging the fullobject_datapayload. This made it easier to see which fields were available and how to reference them correctly.

Security, privacy, and what Alex chose to share

As the automation became part of Alex’s daily training, a new question appeared: “What am I actually sharing with the world?”

Strava activities can include location data and map information. Alex did not always want to reveal exact routes or home addresses. To stay safe, they decided to:

- Exclude any map URLs or GPS-based fields from the tweet text

- Use n8n’s credential manager to store OAuth tokens securely

- Limit access to the n8n instance to trusted users only

With these safeguards in place, the automation highlighted performance stats without exposing sensitive details.

Leveling up: how Alex customized the workflow

Once the basic Strava to Twitter automation was running smoothly, Alex started to see new possibilities. The original two-node flow became a foundation for more advanced customizations.

Filtering which activities get posted

Not every workout needed to be public. Recovery jogs and short commutes could stay private. Alex added a Filter node between Strava Trigger and Twitter to enforce simple rules, such as:

- Only tweet runs or rides above a certain distance

- Exclude specific activity types like “Commute”

- Use tags or metadata to decide what should be shared

Adding photos and richer content

To make tweets more engaging, Alex experimented with attaching images. They used an HTTP Request node to call Strava’s API for detailed activity data and photos, then configured the Twitter node to upload those images alongside the text.

This small enhancement made the feed more visual and increased engagement from followers.

Scheduling and pacing tweets

On race days or heavy training weeks, Alex preferred not to flood followers with multiple posts in a short time. To handle this, they tested queueing tweets through tools like Buffer or by adding scheduling logic inside n8n.

Instead of posting instantly, some tweets were delayed or batched, creating a more balanced presence on Twitter.

Refining metrics for storytelling

As a data enthusiast, Alex could not resist improving how metrics appeared in tweets. They adjusted expressions to format pace, moving time, and distances in a more readable way, turning raw numbers into friendly, shareable stats.

Best practices Alex learned along the way

After several weeks of running this Strava to Twitter automation in n8n, Alex had a short list of best practices to keep things smooth and follower friendly:

- Limit auto-posting frequency so your feed does not feel like spam

- Use consistent templates and hashtags so your posts are recognizable

- Test thoroughly with a private or test Twitter account before going live

- Review Strava privacy settings and tweet content to avoid oversharing

The resolution: automation as a quiet training partner

Weeks later, Alex noticed something subtle but important. Training logs on Strava and posts on Twitter were finally in sync. Every meaningful run or ride appeared on Twitter with clean formatting, accurate stats, and on-brand hashtags.

The workflow itself remained tiny: just a Strava Trigger node and a Twitter node, with optional filters and enhancements layered on top. Yet the impact on Alex’s routine was huge. No more forgotten race recaps, no more rushed posts after long runs, and more time to focus on training instead of typing.

If you want to follow the same path as Alex, you can:

- Import the template JSON into your n8n instance

- Connect your Strava and Twitter credentials through the credential manager

- Customize the tweet expression, filters, and any extra nodes you need

- Enable the workflow and let your next workout be the first automated test

From that point on, your n8n workflow becomes a silent partner, turning every new Strava activity into a polished tweet without you lifting a finger.

Call to action: Import the template, enable the workflow, and share one of your automated tweets. Tag us or subscribe if you want more n8n automation stories and templates.

Build a TV Rating Trend Report with n8n & LangChain

How a Tired Analyst Turned TV Ratings Chaos Into an Automated Trend Report With n8n & LangChain

The night everything broke

By the time Mia hit “send” on the last TV rating report of the day, it was already 11:42 p.m.

She stared at the spreadsheet on her screen, eyes blurring over columns of ratings, shares, timestamps, and notes from a dozen regions. Programming directors were waiting on her trend summary for the flagship show, Evening News, and she had just noticed a possible spike during a special election segment. To confirm it, she had to:

- Dig through old CSV files

- Search emails for past anomalies

- Manually copy-paste numbers into a Google Sheet

- Try to remember if they had seen something similar a few months ago

Every step was manual, slow, and fragile. One wrong paste or missed filter, and her report could be off. Worse, she knew that by the time she sent it, the data was already stale.

That night, after yet another last-minute request for “a quick week-over-week comparison,” Mia closed her laptop and thought, This has to be automated. There has to be a better way to build TV rating trend reports.

The search for a smarter TV rating workflow

The next morning, Mia wrote down what her ideal system would do. If she could redesign her workflow from scratch, it would:

- Ingest TV rating data in real time, without manual uploads

- Understand the meaning of notes and segments, not just numbers

- Find similar past events automatically, instead of forcing her to remember dates

- Summarize trends and anomalies in plain language

- Log everything neatly into Google Sheets for stakeholders

In other words, she wanted a pipeline that could turn raw TV rating events into actionable, contextual trend reports, with as little human effort as possible.

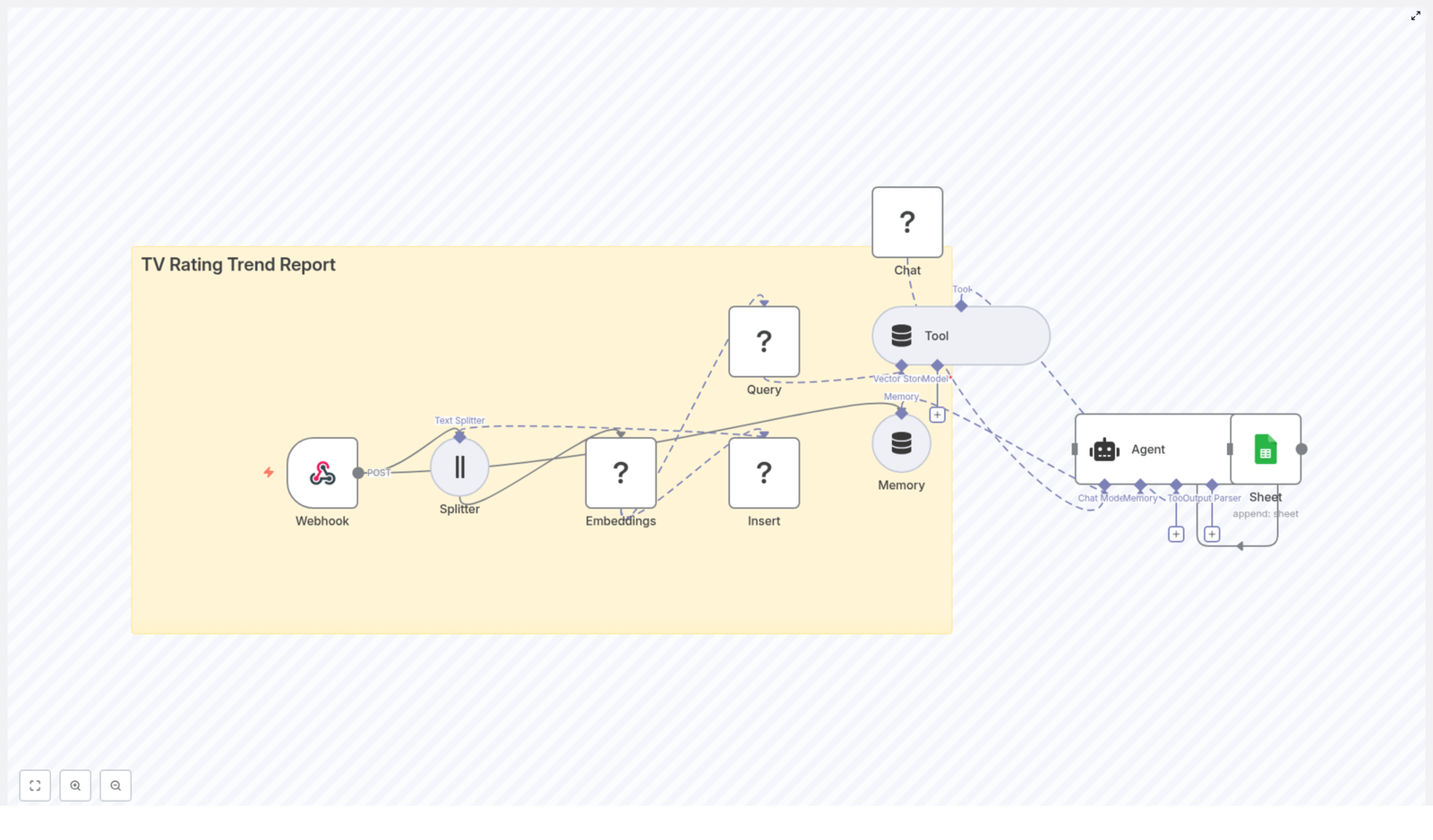

While researching workflow automation, she stumbled across an n8n workflow template titled “TV Rating Trend Report”, built with n8n + LangChain. The description sounded almost exactly like the system she had just described on paper.

Discovering the n8n TV rating trend report template

The template promised an automated pipeline that would:

- Use a webhook to ingest TV rating data in real time

- Split long notes into manageable chunks with a Text Splitter

- Create Hugging Face embeddings for semantic search

- Store vectors in a Redis vector store for similarity queries

- Use a memory buffer and LangChain-style agent to generate context-aware insights

- Automatically write final trend summaries to Google Sheets

It was not just a script. It was a full workflow that could take her from an incoming rating event to a structured, contextualized trend report, without manual intervention.

Mia decided to try it with a small pilot: one week of data for Evening News.

Rising action: wiring the pipeline together

Step 1: Giving the data somewhere to land – the webhook

The first thing Mia needed was a way to get her TV monitoring system to talk to n8n. The template started with a Webhook node, configured to accept POST requests.

She set up the webhook in n8n and pointed her rating system to send payloads like this:

{ "show": "Evening News", "timestamp": "2025-09-25T20:00:00Z", "rating": 3.8, "share": 12.4, "region": "Metro", "notes": "Special segment on elections"

}

Now, every time a new rating event arrived, n8n would catch it instantly. No more CSV exports, no more drag-and-drop uploads.

Step 2: Teaching the system to read long notes

Mia knew that the real story often lived in the notes field. “Special segment on elections,” “guest appearance from celebrity anchor,” “breaking weather alert” – those were the details that explained spikes and dips.

The template used a Text Splitter node to handle long notes or combined reports. It was configured as a character-based splitter with:

chunkSize: 400chunkOverlap: 40

That meant if a note or narrative ran long, it would be broken into overlapping chunks of about 400 characters, with 40 characters of overlap to preserve context. For Mia’s medium-length notes, it was a sensible starting point.

She made a small mental note: if her notes ever got very short, she could reduce the overlap to save storage. And if she ever started sending structured JSON, she could serialize only the human-readable parts for embeddings.

Step 3: Turning words into vectors with Hugging Face embeddings

Next, the template attached an Embeddings node powered by Hugging Face. This was where the magic of semantic search began.

The node took each text chunk and converted it into a vector embedding, a numeric representation of meaning. With the right model, the system could later ask questions like “show me events similar to this spike” and retrieve relevant past episodes, even if the wording was different.

In the template, the model was set to default. Mia updated her n8n credentials to reference a specific Hugging Face model from the sentence-transformers family, which is commonly used for semantic search. That gave her more predictable quality and relevance.

Step 4: Storing history in Redis for similarity search

Embeddings were useful only if she could store and query them efficiently. The template used a Redis vector store, with an index called:

tv_rating_trend_report

Each time a new chunk arrived, the workflow would:

- Insert the vector into Redis

- Attach metadata such as show title, timestamp, rating, and region

This metadata was crucial. It meant Mia could later filter queries like:

- “Find similar events for this show in the last 7 days”

- “Retrieve anomalies for the Metro region only”

Her raw rating events were slowly turning into a searchable memory.

Step 5: Letting the agent look up context on demand

Once the data was flowing into Redis, Mia needed a way for the system to use it when generating reports. The template included a Query (Redis) node wrapped as a Tool.

When it was time to build a trend report, the agent could call this tool to:

- Fetch top-k similar chunks for a given show or time period

- Retrieve relevant past spikes, dips, or anomalies

Because the vector store was exposed as a tool, the agent could decide when to run a similarity search during its reasoning process. The logic stayed modular and transparent, and Mia could later inspect which context had been retrieved.

Step 6: Giving the workflow a memory and a voice

To make the analysis feel less like isolated snapshots and more like a continuous narrative, the template added two more pieces:

- A Memory (Buffer Window) node to keep recent interactions and summaries

- An Agent (LangChain-style) node connected to a chat model, the Tool, and the Memory

The memory buffer allowed the agent to remember what it had recently said about a show or a time period, instead of treating each report as a blank slate. The agent could then synthesize:

- Week-over-week changes

- Notable spikes and dips

- Context-aware commentary that referenced past events

Mia shaped the agent prompt using a simple pattern. She made sure it always received:

- The retrieval results (most similar chunks from Redis)

- Current metrics like rating and share

- Relevant memory or conversation context

Her prompt looked similar to this:

Analyze the current rating data and the retrieved historical context. Provide a one-paragraph trend summary and list any significant anomalies with probable causes.

It was concise, but clear about expectations: a short trend summary plus a list of anomalies and likely explanations.

Step 7: Sending everything where stakeholders actually live – Google Sheets

Finally, the agent needed somewhere to write its findings. Mia’s team relied heavily on a shared Google Sheet, where programming directors checked daily performance.

The workflow ended with a Google Sheets node that appended a new row to a sheet named Log. The agent was configured to output a structured set of fields, which mapped to columns like:

dateshowaggregated_ratingtrend_summarynoteslink_to_record

For a payload like this:

{ "show": "Evening News", "timestamp": "2025-09-25T20:00:00Z", "rating": 3.8, "share": 12.4, "region": "Metro", "notes": "Special segment on elections; high engagement on social"

}

She expected to see a row similar to:

[2025-09-25, Evening News, 3.8, +0.4 (wk), "Spike during special segment; similar to 2025-06-12 episode", link_to_record]

For the first time, the spreadsheet would update itself with both numbers and narrative.

The turning point: from manual grind to automated insight

A few days into her pilot, Mia watched the workflow run on a real spike.

There had been a special segment on elections during Evening News. Ratings ticked up, social engagement surged, and the monitoring system fired its usual payload into n8n.

Behind the scenes, the data flowed:

- The Webhook caught the POST request.

- The Text Splitter chunked the notes, using

chunkSize=400andchunkOverlap=40. - The Embeddings (Hugging Face) node turned chunks into vectors.

- The Insert (Redis Vector Store) node stored them with metadata under

tv_rating_trend_report. - When it was time to generate the report, the Query (Redis) + Tool retrieved similar past events.

- The Memory (Buffer Window) added recent context.

- The Agent pulled everything together into a trend summary.

- The Google Sheets node appended the final report to the

Logsheet.

By the time Mia opened the Google Sheet, the row was already there. The trend summary mentioned a spike similar to one during a past election episode, highlighted the week-over-week increase, and pointed to likely causes.

There were no frantic searches through old files, no late-night spreadsheet edits, and no guesswork about whether a spike was truly unusual.

Behind the scenes: tuning and best practices Mia learned

Choosing the right embedding model

Mia noticed early on that the quality of semantic search depended heavily on the embedding model. For broadcast text and short notes, she found that models from the sentence-transformers family, or other models optimized for semantic search, delivered more relevant similarity results than generic models.

By explicitly setting a model in her Hugging Face credentials instead of relying on the default, she kept the quality consistent across runs.

Adjusting chunk size and overlap

The default chunkSize=400 with chunkOverlap=40 worked well at first, but Mia experimented:

- When she saw some loss of context between chunks, she tried increasing overlap slightly.

- When storage costs grew due to very frequent events, she considered reducing chunk size or filtering out low-value notes.

She kept the rule simple: if the agent seemed to miss important context, increase overlap. If the vector store grew too large or expensive, reduce chunk size or be selective about what got embedded.

Making metadata do the heavy lifting

Early on, Mia realized that good metadata was not optional. Every vector insert into Redis included:

showregionratingtimestamp

With those fields, she could run filtered queries like “same show, last 7 days” or “same region, similar notes.” Retrieval quality improved dramatically once she combined vector similarity with basic metadata filters.

Keeping credentials safe

Mia also took care to secure her integrations. Inside n8n, she stored:

- Hugging Face API keys

- Redis credentials

- Google Sheets OAuth tokens

All were kept in n8n’s credentials manager, with least-privilege access and periodic key rotation. It was a small step that avoided big security headaches later.

Building resilience with error handling and monitoring

As the workflow moved toward production, Mia added guardrails:

- Retry logic for transient network issues and rate limits

- Error handlers for failed Google Sheets appends

- Monitoring for webhook latency and queue sizes

If a single step failed, the entire reporting process would not silently break. She could see what went wrong and fix it quickly.

Scaling up: from one show to the entire schedule

Once the pilot for Evening News ran smoothly, Mia expanded the workflow to cover more shows. As volumes increased, she learned a few tricks to control cost and keep performance stable.

Handling large volumes of rating events

For high-traffic schedules, Mia:

- Used batch inserts into Redis instead of writing every event individually

- Applied a cheaper embedding model for routine data, reserving a higher-quality model for anomalies or priority shows

- Periodically archived or downsampled old vectors, especially for periods where fine-grained history was less critical

The result was a scalable vector store that did not balloon uncontrollably.

Real-world ways Mia used the workflow

Over the next few weeks, the “TV Rating Trend Report” workflow became part of Mia’s daily toolbox. Some practical use cases emerged:

- Daily trend summaries automatically written to a Google Sheet and emailed to programming directors

- Alerting logic when a show’s rating jumped by more than a set percentage week over week

- Semantic search across past episodes to find similar spikes or dips for editorial analysis

- Stakeholder-facing logs that updated themselves, with both numbers and narrative

What used to be late-night manual work turned into a mostly hands-off system that surfaced insights on its own.

Troubleshooting: the bumps along the way

Not everything worked perfectly from day one. Mia ran into a few issues and learned how to fix them:

- Irrelevant retrieval results

She checked whether metadata filters were set correctly and whether her embedding model was a good fit for short broadcast notes. Adjusting filters and upgrading the model improved relevance. - Agent hallucinations

When the agent occasionally inferred events that did not happen, she increased the

n8n: Add Google Drive File to Vector DB

n8n Template Reference: Add Google Drive File to Supabase Vector DB with OpenAI Embeddings

This documentation-style guide describes a production-ready n8n workflow template that ingests a document from Google Drive, extracts its textual content, generates OpenAI embeddings, and writes the resulting vectors and metadata into a Supabase vector table. It is intended for technical users who want a reliable, repeatable ingestion pipeline for knowledge retrieval, RAG/chatbots, and enterprise semantic search.

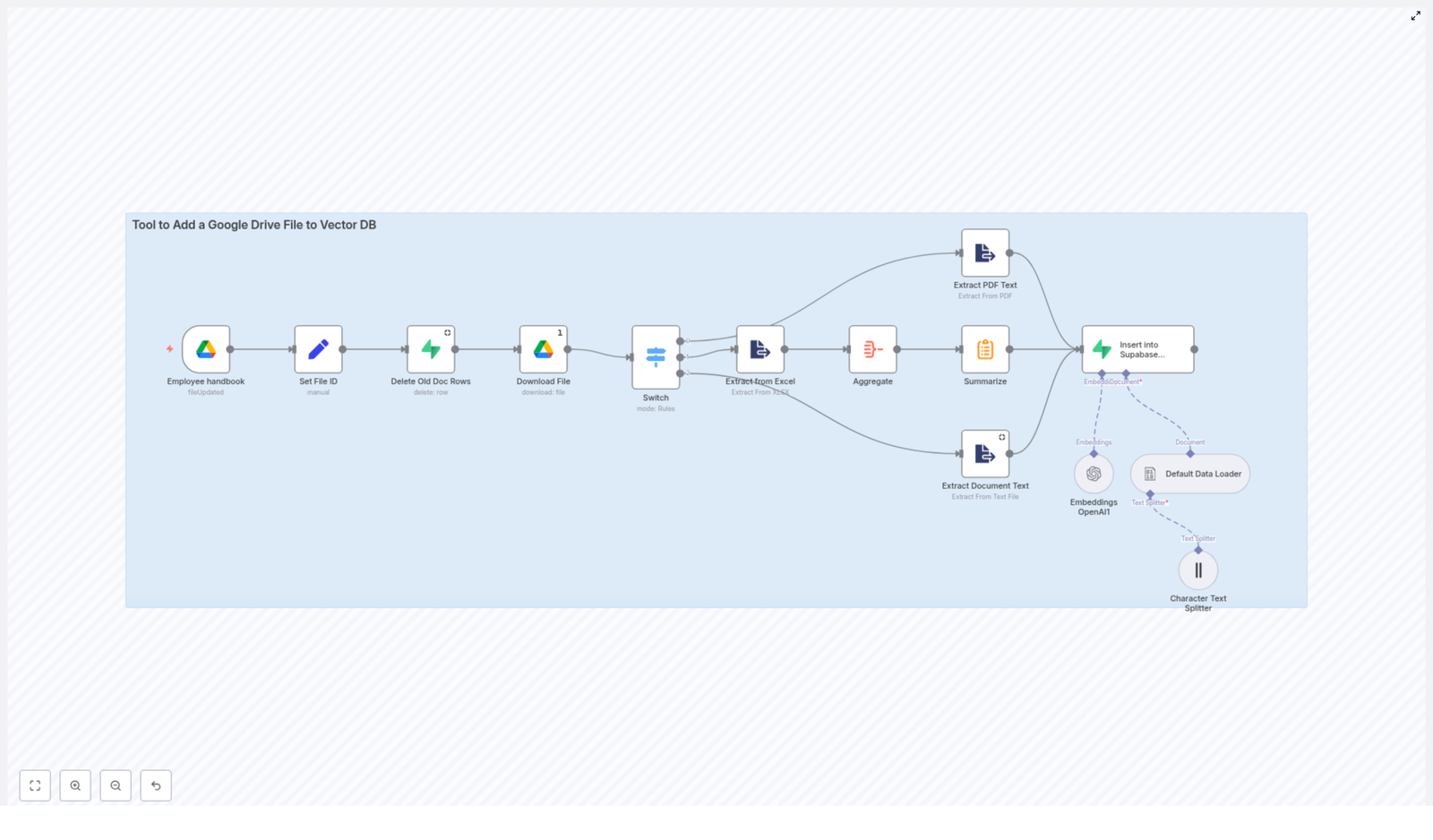

1. Workflow Overview

The template implements an end-to-end ingestion pipeline that:

- Watches a specific Google Drive file or folder for changes

- Downloads the updated file and normalizes metadata

- Deletes any previous vector records for that file in Supabase

- Performs file-type-specific text extraction (Google Docs, PDF, Excel, text)

- Aggregates and optionally summarizes long content

- Splits the text into embedding-friendly chunks

- Generates embeddings using an OpenAI model

- Persists vectors and metadata into a Supabase vector table

The flow is linear with a single entry trigger and a single write operation into Supabase, and it is designed to be idempotent at the file level by removing old rows before inserting new ones.

2. Architecture & Data Flow

2.1 High-level sequence

- Trigger: A Google Drive Trigger node fires on file updates.

- Normalization: A Set node standardizes the file ID and MIME type.

- Cleanup: A Supabase node removes prior vectors for the same file.

- Acquisition: A Google Drive node downloads the file content.

- Routing: A Switch node routes execution based on MIME type.

- Extraction: Format-specific nodes extract raw text.

- Aggregation & summarization: Text is aggregated and optionally summarized.

- Chunking: A Character Text Splitter node breaks content into chunks.

- Document construction: A Default Data Loader node builds document objects.

- Embedding: An OpenAI Embeddings node generates vector representations.

- Persistence: A Supabase node inserts vectors and metadata into a vector table.

2.2 Core technologies

- n8n – Orchestration engine and workflow runtime.

- Google Drive – Source of documents (Docs, PDFs, Excel, text files).

- OpenAI – Provider of text embeddings (for example,

text-embedding-3-small). - Supabase – Postgres with pgvector extension used as a vector database.

3. Node-by-node Breakdown

3.1 Google Drive Trigger (Employee handbook)

Role: Entry point for the workflow.

Behavior: Listens for updates to a file or folder in Google Drive and starts the workflow when a change is detected.

- Trigger type:

fileUpdated - Configuration:

- Specify either a single

fileIdor afolderId. - Set polling frequency according to how quickly you need updates reflected in the vector store.

- For deterministic behavior, target a specific file (for example, an “Employee handbook”) rather than a broad folder.

- Specify either a single

Output: Metadata about the updated file, including its ID and MIME type, which is consumed by downstream nodes.

3.2 Set File ID

Role: Normalize and expose key identifiers for later use.

Behavior: A Set node extracts and standardizes fields such as:

file_id– The canonical ID of the Google Drive file.mimeType– The MIME type reported by Google Drive.

These fields are stored as workflow variables and referenced throughout the pipeline, especially for routing logic and for metadata written to Supabase.

3.3 Delete Old Doc Rows (Supabase)

Role: Ensure that each file has a single, current set of vectors in Supabase.

Behavior: This Supabase node issues a delete operation against the target vector table. It filters rows by the file identifier stored in metadata.

- Typical filter: using a JSONB metadata column, such as:

metadata->>'file_id' = :file_id

This step prevents duplicate or stale vector entries after a file is updated. If the delete query fails or the file is not yet present, the workflow can still proceed, but you may end up with multiple versions unless the delete is corrected.

3.4 Download File (Google Drive)

Role: Retrieve the file bytes from Google Drive.

Behavior: The Google Drive node downloads the file content using the ID from the trigger.

- For Google Docs: Configure the node to export as

text/plainto simplify downstream text extraction. - For other formats (PDF, XLSX, plain text): The node downloads the raw file for processing by specific extractor nodes.

At this stage, the workflow has both file metadata and raw file content available to route and transform.

3.5 Switch (file-type routing)

Role: Direct file content to the correct extractor based on MIME type.

Behavior: A Switch node evaluates the mimeType value and routes execution to one of several branches.

application/pdf→ PDF extraction branchapplication/vnd.openxmlformats-officedocument.spreadsheetml.sheet→ Excel extraction branchapplication/vnd.google-apps.documentortext/plain→ document/text extraction branch

If a MIME type does not match any configured case, the workflow will not have a valid extraction path. In that situation, add a default branch that logs or handles unsupported formats to avoid silent failures.

3.6 Extractors (PDF, Excel, Text)

Role: Convert different file formats to a normalized text representation.

Behavior by branch:

- PDF extraction:

- Uses a PDF extraction node or module to read selectable text.

- Output is typically a set of text segments or pages.

- If the PDF is image-only, you must enable OCR or use a dedicated PDF OCR node; otherwise, text may be missing.

- Excel extraction:

- Reads worksheets and converts rows into text representations.

- Rows are usually aggregated later into larger text blocks to avoid embedding each row individually.

- Text / Google Docs extraction:

- For Google Docs exported as

text/plain, the node works with plain text content. - For native text files, content is already suitable for direct processing.

- For Google Docs exported as

The output of all extractor branches is raw text, ready for aggregation, summarization, and chunking.

3.7 Aggregate & Summarize

Role: Consolidate granular extractions and generate optional summaries.

Behavior:

- Aggregation:

- For Excel, multiple rows are concatenated into a single field or a small number of larger segments.

- For PDFs or text documents, this step can merge smaller text fragments into cohesive sections.

- Summarization:

- Runs a lightweight summarization step to produce a short description or abstract of the document or segment.

- The summary is stored as metadata and improves retrieval quality by providing a quick overview for ranking or display.

Even if you do not rely heavily on summaries at query time, they are valuable for debugging, result previews, and manual inspection of vector records.

3.8 Character Text Splitter

Role: Prepare text for embedding by splitting it into manageable chunks.

Behavior: The Character Text Splitter node divides long content into overlapping segments. Typical parameters include:

- Chunk size: Approximately 1,000 to 2,000 characters.

- Overlap: A smaller overlap (for example, 200 characters) to preserve context across adjacent chunks.

This approach improves embedding quality and semantic search performance by maintaining local context while respecting embedding model token limits.

3.9 Default Data Loader

Role: Convert raw text chunks into document objects with structured metadata.

Behavior: The Default Data Loader node wraps each chunk in a document-like structure that includes:

- Content: The text chunk itself.

- Metadata:

file_idfilenamemimeType- Optional page or section indicators

- Summary or abstract, if available

- Chunk index or position within the original document

These document objects form the direct input to the Embeddings node, ensuring consistent structure for all chunks regardless of source format.

3.10 Embeddings OpenAI

Role: Generate vector embeddings for each text chunk.

Behavior: The OpenAI Embeddings node calls an OpenAI embeddings model for each document object.

- Typical model:

text-embedding-3-small(or another supported embedding model). - Credentials: Requires a valid OpenAI API key configured in n8n credentials.

Output: An array of floating-point numbers representing the embedding vector for each chunk. These vectors are aligned with the dimension of your pgvector column in Supabase.

If the model changes, verify that the new vector dimensionality matches the schema of your Supabase vector column to avoid insertion errors.

3.11 Insert into Supabase Vectorstore

Role: Persist embeddings and metadata into Supabase for vector search.

Behavior: The final Supabase node inserts one row per chunk into the configured vector table.

- Target table: In this template, the table is named

policy_doc. - Columns: A typical schema includes:

id(primary key)embedding(pgvector column)content(text of the chunk)metadata(JSON or JSONB, containing file_id, filename, mimeType, summary, chunk_index, etc.)created_at(timestamp)

- Vector search: The table can be queried using functions such as

match_documentsor other pgvector-compatible search patterns.

By storing rich metadata, you can filter or re-rank results based on source file, department, or other contextual fields during retrieval.

4. Configuration & Credentials

4.1 Google Drive OAuth2

- Configure OAuth2 credentials in n8n with access to the relevant Drive or shared drive.

- Ensure the scopes include read access for the files you want to ingest.

- Limit access to only the necessary files/folders for security and least privilege.

4.2 Supabase

- Provide Supabase API keys (service role or appropriate key) to allow insert and delete operations.

- Enable and configure the pgvector extension in the target database.

- Ensure the vector column dimension matches the embedding model dimension.

4.3 OpenAI

- Set up an OpenAI credential in n8n with an API key that has access to the chosen embeddings model.

- Confirm usage limits and rate limits are compatible with your expected ingestion volume.

4.4 n8n Runtime

- Allocate sufficient memory for handling large documents, especially PDFs and multi-sheet Excel files.

- Adjust execution timeout settings if you expect long-running extractions or large batch uploads.

5. Best Practices & Optimization

- Rate limiting:

- Batch embedding requests where possible.

- Add small delays between calls to avoid hitting OpenAI rate limits during bulk ingestion.

- Chunk configuration:

- Use chunk sizes that balance context and token constraints, for example:

- 500 to 1,000 words, or

- 1,000 to 2,000 characters with around 200-character overlap.

- Use chunk sizes that balance context and token constraints, for example:

- Metadata quality:

- Always capture

file_id, source URL or Drive link, and a summary field. - Consistent metadata improves debugging, traceability, and search filtering.

- Always capture

- Schema consistency:

- Keep a uniform schema in Supabase, for example:

id,embedding(vector),content,metadata(JSON),created_at.

- Avoid ad hoc schema changes that can break downstream query logic.

- Keep a uniform schema in Supabase, for example:

- Incremental updates:

- Always delete or mark old rows for a file before inserting new vectors.

- This template uses a delete-by-file_id pattern to keep the vector store clean.

6. Troubleshooting & Edge Cases

- Missing text from PDFs:

- Verify that the PDF text is selectable. If it is image-only, enable OCR or swap to a PDF OCR node.

- Without OCR, embeddings will be incomplete or empty.

- Credential errors:

- Re-authorize Google Drive, OpenAI, or Supabase credentials if nodes start failing with permission-related errors.

- Confirm scopes and roles allow the required read/write operations.

- Large files failing:

- Increase n8n execution timeouts and memory limits.

- Consider pre-splitting very large documents or limiting maximum file size.

- Embedding mismatches:

- Check that the embedding model you use matches the vector dimension configured in your pgvector column.

- If you change models,

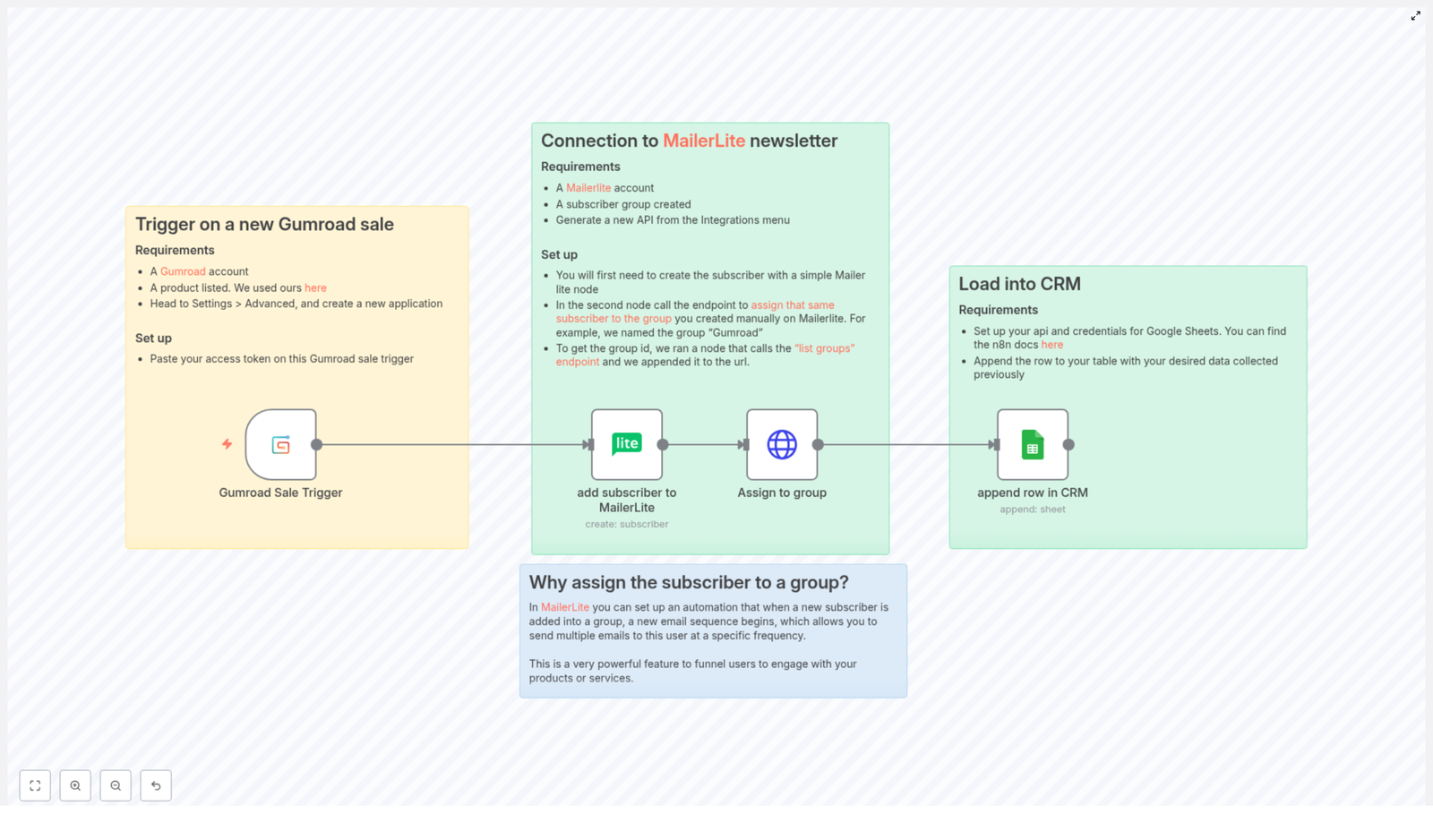

Automate Gumroad Sales to MailerLite & Google Sheets with n8n

Automate Gumroad Sales to MailerLite & Google Sheets with n8n

Manually copying every new Gumroad buyer into your email list and spreadsheet is a special kind of torture. You open Gumroad, copy the email, paste it into MailerLite, then into Google Sheets, then swear you will “totally automate this someday.” This guide is that someday.

We will walk through an n8n workflow that quietly does all the boring stuff for you in the background. Every sale becomes:

- a new subscriber in MailerLite,

- assigned to the right MailerLite group, and

- logged as a neat little row in your Google Sheets CRM.

You sip coffee, n8n does the admin. Fair trade.

What this n8n workflow actually does

Here is the big picture of the automation you will build. Whenever a new Gumroad sale happens, your n8n workflow will:

- Listen for a Gumroad sale using a

Gumroad Triggernode. - Create a subscriber in MailerLite with the buyer’s email and country.

- Add that subscriber to a MailerLite group using the MailerLite API.

- Append a row to Google Sheets with sale timestamp, email, country, and product name.

The result is a fully automated flow from “someone bought your thing” to “they are in your email system and CRM, ready for follow-up and future marketing.”

Why bother automating Gumroad sales?

Aside from protecting your sanity, automating Gumroad sales with n8n gives you a few big advantages:

- Better follow-up – Instantly enroll buyers in MailerLite sequences, onboarding, or support flows.

- Always up-to-date CRM – Keep a searchable purchase log in Google Sheets without ever touching the keyboard.

- Hands-free marketing – Trigger upsells, nurture campaigns, and segmenting based on what people bought and where they are from.

Once this is running, every sale automatically plugs into your marketing and tracking system. No more “I forgot to add them to the list” moments.

What you need before starting

Before you fire up n8n, make sure you have these pieces ready:

- An n8n instance (cloud or self-hosted).

- A Gumroad account with an API access token.

- A MailerLite account plus your MailerLite API key.

- A Google account with:

- a Google Sheets file to act as your CRM, and

- Google credentials configured in n8n.

- A MailerLite group created for Gumroad buyers (you will need its

group_id).

Once you have those, you are ready to build the workflow or plug in the template.

Step-by-step: building the workflow in n8n

Step 1 – Catch new sales with a Gumroad Trigger node

First, you need n8n to know when someone buys from you on Gumroad.

- Add a Gumroad Trigger node to your workflow.

- Configure the webhook to listen for sale events.

- In the node credentials, paste in your Gumroad access token so n8n can validate incoming events.

From now on, every new Gumroad sale will fire this workflow without you lifting a finger.

Step 2 – Create the MailerLite subscriber

Next, you want your new buyer to land in MailerLite automatically.

- Add a MailerLite node right after the Gumroad Trigger.

- Set the operation to create subscriber.

- Map the fields from the Gumroad payload to MailerLite fields using n8n expressions.

Typical field mappings:

- Email:

{{$json.email}} - Custom field – country:

{{$json.ip_country}}

You can add extra custom fields if you like, but at minimum, email and a couple of useful attributes are enough to get started.

Step 3 – Add the subscriber to a MailerLite group

MailerLite works best when you use groups to trigger automations. So after creating the subscriber, you want to drop them into the right group.

To do that, use either:

- an HTTP Request node, or

- the MailerLite node if your version already supports group operations.

With the HTTP Request node, configure a POST request to:

https://connect.mailerlite.com/api/subscribers/{subscriber_id}/groups/{group_id}Where:

{subscriber_id}is theidreturned from the previous MailerLite node (the one that created the subscriber).{group_id}is the id of your Gumroad buyers group in MailerLite.

In n8n, use expression syntax to construct the URL and pass your MailerLite credentials so the call is authenticated. Once this is set, every new buyer gets instantly assigned to that group and can enter your automations.

Step 4 – Log the sale in Google Sheets

Now for your lightweight CRM. You want a simple, filterable history of every sale in Google Sheets.

- Add a Google Sheets node to the workflow.

- Choose the operation to append a row.

- Select your CRM sheet and map the values coming from the Gumroad Trigger node.

Typical columns and mappings:

- date: sale timestamp (convert the format if needed).

- email: buyer email.

- country: buyer country.

- product name:

product_namefrom Gumroad.

You end up with a clean historical log you can sort, filter, or export whenever you feel like analyzing your sales or bragging about them.

How to test your Gumroad – n8n – MailerLite – Sheets setup

Before trusting this workflow with your entire business, give it a proper test run.

- Activate the workflow in n8n so it listens for real events.

- Trigger a test sale:

- Either make a low-priced test purchase on Gumroad, or

- Use Gumroad’s webhook test tooling if available.

- Check MailerLite:

- Confirm the new subscriber appears in your audience.

- Verify they are assigned to the correct group.

- Open your Google Sheet:

- Make sure a new row was added.

- Check that the timestamp, email, country, and product name look correct.

If everything looks good, your manual copy-paste job has officially been retired.

Error handling, reliability, and best practices

APIs sometimes misbehave, networks get grumpy, and rate limits are a thing. A few tweaks in n8n can make your workflow much more robust.

- Retry logic: Enable n8n’s retry options on nodes that call external APIs (Gumroad, MailerLite, Google). This helps with temporary failures so a single bad request does not break your whole flow.

- Idempotency: Avoid creating duplicate MailerLite subscribers. Use MailerLite’s API features to search for existing subscribers or add logic in n8n to check if the email already exists before creating a new one.

- Respect rate limits: Gumroad and MailerLite both have rate limits. If you expect bursts of sales (nice problem to have), consider batching or throttling requests in n8n.

- Logging and debugging: Store the raw webhook payload in a separate Google Sheet or logging service. This makes it much easier to debug weird edge cases later.

Privacy, consent, and compliance

When you are collecting buyer data and sending it into tools like MailerLite and Google Sheets, you also need to stay on the right side of privacy regulations such as GDPR and CCPA.

Make sure to:

- Include clear privacy notices on your product pages.

- Get proper opt-in consent for marketing emails where required.

- Double-check that your MailerLite automations respect unsubscribe requests automatically.

It is your responsibility to configure everything in a compliant way, so take a moment to verify your setup before going all-in on automated sequences.

Troubleshooting common issues

If something does not work on the first try, you are not alone. Here are some quick checks:

- No webhook events arriving: Confirm that the Gumroad webhook is correctly configured and that your n8n webhook URL is publicly reachable.

- Subscriber not created in MailerLite: Open the n8n execution log, inspect the incoming Gumroad payload, and verify all required fields for the MailerLite node are mapped correctly.

- Wrong or missing MailerLite group: Use the MailerLite API to list groups and confirm you are using the correct

group_idin your HTTP Request or MailerLite node.

Most problems come down to a typo, a missing field, or a credential that did not save correctly, so the execution log is your best friend here.

Advanced ways to level up this workflow

Once the basic Gumroad to MailerLite to Google Sheets flow is running smoothly, you can start getting fancy.

- Enrich buyer data: Before adding people to MailerLite, call a geo-IP or enrichment API to add extra fields like city, company, or more detailed location.

- Conditional sequences: Add logic in n8n to route buyers to different MailerLite groups depending on:

- which product they bought, or

- which country they are in.

- Sync with a full CRM later: Keep using Google Sheets as a simple CRM, then later connect it (or your n8n workflow) to tools like Pipedrive or HubSpot when you are ready to upgrade.

n8n gives you a lot of room to grow, so this starter workflow can easily become the backbone of a more sophisticated automation setup.

Wrapping up

By wiring up Gumroad, n8n, MailerLite, and Google Sheets, you turn every sale into an automated chain of useful actions: subscriber creation, group assignment, and CRM logging, all without you opening a single tab.

This is a low-cost, high-impact upgrade to your creator toolkit. You improve customer experience, stay organized, and free up your time for making products instead of maintaining spreadsheets.

Ready to set this up? Fire up your n8n instance, follow the steps above, and run a test sale to make sure everything is connected. After that, you can safely retire your copy-paste routine.

If you want help customizing this workflow, like adding conditional routing or integrating with a dedicated CRM, reply with the behavior you want and I will share a tailored n8n workflow configuration.

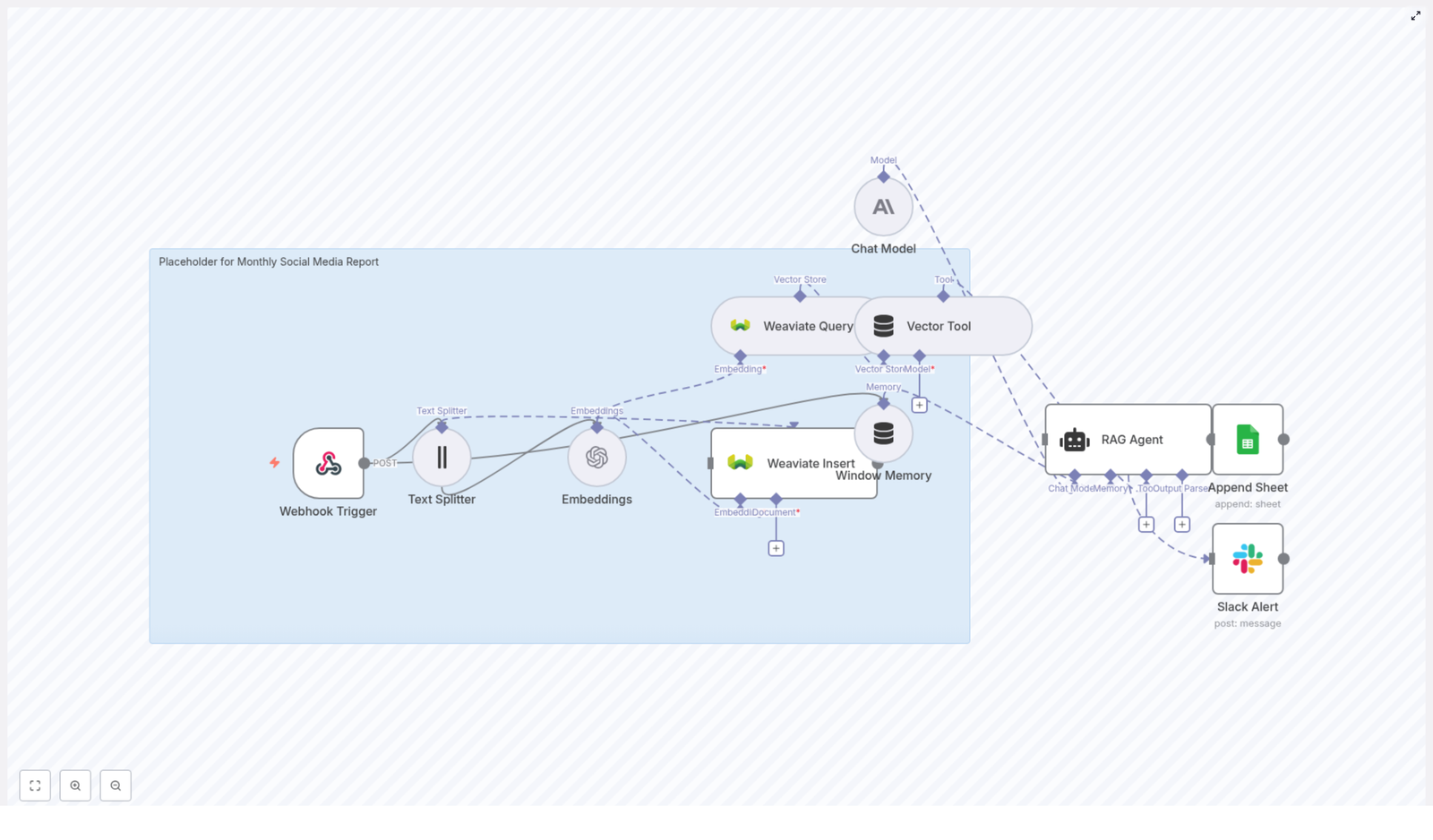

Automate Monthly Social Reports with n8n & Weaviate

Automate Monthly Social Media Reports with n8n & Weaviate

If you’re tired of cobbling together monthly social media reports by hand, you’re not alone. Copying metrics, digging through past posts, trying to add “insights” at the last minute… it gets old fast.

The good news: you can hand most of that work off to an n8n workflow that does the heavy lifting for you. This setup takes raw report data, chunks and embeds it, stores it in Weaviate, uses a RAG (retrieval-augmented generation) agent to add context and insights, then logs everything neatly in Google Sheets, with Slack alerts if anything breaks.

Let’s walk through what this template does, when it’s worth using, and how each part fits together, in plain language.

What this n8n workflow template actually does

At a high level, this workflow turns raw social media data into a structured, insight-rich monthly report. Here’s the journey your data takes:

- It arrives at an n8n Webhook as a POST request.

- The content is split into smaller pieces with a Text Splitter.

- Each chunk is turned into a vector via an Embeddings node using OpenAI.

- Those vectors and their metadata are stored in a Weaviate index.

- When you want a report, a Vector Tool queries Weaviate for relevant context.

- A RAG Agent with Window Memory and an Anthropic chat model uses that context to generate insights.

- The final report is written into a Google Sheets “Log” sheet.

- If anything fails, a Slack Alert pings you in a channel like

#alerts.

So instead of hunting through spreadsheets and past posts, you get a repeatable, context-aware monthly report that’s ready to share with your team.

Why automate monthly social media reports at all?

Let’s be honest: manual reporting is not the best use of anyone’s time. Automation helps you:

- Save hours every month by skipping copy-paste work and manual summaries.

- Reduce errors from typos, missed posts, or inconsistent formulas.

- Stay consistent in how you track metrics and present insights.

- React faster to trends because your data and insights are always up to date.

By combining vector search with a RAG agent, your reports also get smarter. The workflow can look back at historical posts, pull in similar content, and tailor insights to your brand’s actual performance instead of generating generic advice.

When this template is a good fit

You’ll get the most value from this n8n workflow template if:

- You already collect social media metrics monthly and want to streamline the process.

- You have content that benefits from historical context, like comparing performance month over month.

- You want a single source of truth in Google Sheets that other tools or dashboards can use.

- You’re comfortable using APIs and have access to OpenAI, Weaviate, Anthropic, Google Sheets, and Slack.

If that sounds like your setup, this workflow can become your “reporting assistant” that runs quietly in the background.

How the workflow is structured

Here’s a more detailed look at the main components and how they work together inside n8n.

1. Webhook Trigger – your data entry point

Everything starts with a Webhook node in n8n. You configure it with a POST path like: