Automated Job Application Parser with n8n & RAG

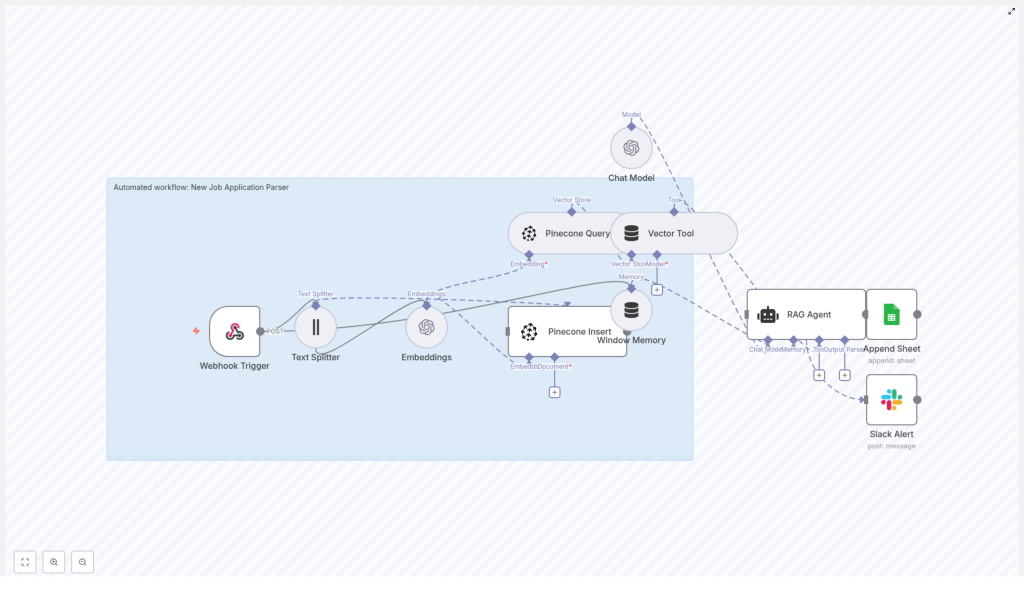

Streamline how your hiring team ingests and processes candidate applications by building an automated job application parser using n8n, OpenAI embeddings, and a vector database (Pinecone). This guide explains the components, configuration tips, privacy considerations, and extension ideas for the workflow pictured above.

Why build an automated job application parser?

Manual processing of resumes and cover letters is slow, error-prone, and inconsistent. An automated parser accelerates screening, centralizes candidate data, and helps your recruiting team focus on high-value decisions. Using a Retrieval-Augmented Generation (RAG) approach with embeddings and a vector store enables semantic search over application content — so you can find relevant skills and experience even when phrasing differs.

High-level workflow overview

The example workflow processes incoming job applications via a webhook, splits and embeds the extracted text, stores embeddings in Pinecone, and then runs a RAG agent to generate structured outputs that are appended to Google Sheets and can trigger Slack alerts on errors. Key components include:

- Webhook Trigger: receives the raw application payload.

- Text Splitter: chunks long documents into manageable pieces for embeddings.

- Embeddings: creates vector representations using OpenAI’s embedding model.

- Pinecone Insert & Query: stores and queries embeddings for later retrieval.

- Window Memory & Vector Tool: provides context and tools for the RAG agent.

- RAG Agent (Chat Model): synthesizes context and produces a structured response.

- Append Sheet: logs the agent output to Google Sheets for tracking.

- Slack Alert: sends an error notification if the agent fails.

Component-by-component breakdown

1. Webhook Trigger

Configure an n8n webhook node to accept POST requests from your application intake source (career site forms, email-to-webhook service, or ATS). Use a secure URL and validate incoming requests using a shared secret or signature to prevent unauthorized submissions.

2. Text Splitter

Resumes and cover letters can be lengthy. The Text Splitter node divides content into smaller chunks (example settings: chunkSize=400, chunkOverlap=40) to keep embedding requests efficient and to preserve semantic structure across paragraphs. Adjust chunk size based on your average document length and the token limits of your embedding model.

3. Embeddings

Use an embedding model such as OpenAI’s text-embedding-3-small to convert text chunks into vectors. Embeddings capture semantic meaning and enable similarity search. In the template, the Embeddings node is connected to both insert (to save embeddings) and query (to retrieve relevant context for RAG).

4. Pinecone Insert and Pinecone Query

Pinecone serves as the vector store. Insert created embeddings with metadata (e.g., candidate_id, document_type, original_text snippet). For retrieval, query Pinecone with the current application context to fetch the most relevant chunks to augment the RAG agent’s memory. Choose a meaningful index name such as new_job_application_parser and set metadata fields to help filter results later.

5. Window Memory and Vector Tool

Window Memory buffers recent conversation or processing context for the RAG agent, providing short-term state across steps. The Vector Tool wraps the Pinecone query results into a tool that the RAG agent can call to fetch contextual documents. This enables Retrieval-Augmented Generation: the agent answers using both the language model and the retrieved application context.

6. Chat Model and RAG Agent

The RAG Agent node orchestrates the chat model (for example, OpenAI’s chat endpoint) with tools (Vector Tool) and memory (Window Memory). Use a system message to define the agent role, e.g., “You are an assistant for New Job Application Parser.” Provide a prompt template that instructs the agent to parse and return structured fields such as:

- Candidate name

- Email and phone

- Top skills and years of experience

- Role fit summary

- Recommended status (e.g., Pass / Review / Reject)

The agent’s output is appended to Google Sheets for auditability. In the template, the Append Sheet node writes the RAG output to a “Log” sheet under a specified Google Sheets document ID.

7. Error handling and Slack alerts

Set the RAG Agent node to route errors to a Slack Alert node. This ensures your team is notified immediately if parsing fails, allowing quick investigation. Include meaningful error payloads and context to speed troubleshooting.

Configuration tips and best practices

- Security: Use secrets and OAuth credentials in n8n rather than inlined keys. Protect the webhook endpoint and validate payloads.

- Data retention: Keep personally identifiable information (PII) handling compliant with your local laws. Consider redaction or encryption for sensitive fields before storing in Pinecone or Sheets.

- Pinecone metadata: Index candidate_id, source (email/portal), and document_type to allow filtered searches.

- Chunking strategy: Tune chunkSize and chunkOverlap. Smaller chunks improve relevance at the cost of more storage and requests.

- Rate limiting & cost: Monitor API usage (OpenAI, Pinecone) and implement batching or throttling if you expect high volume.

- Testing: Seed the system with a few diverse resumes to confirm retrieval and the RAG agent’s parsing accuracy.

Scaling and monitoring

For higher throughput, run n8n with horizontal scaling (workers) or a managed instance. Use observability tools and logs to track failures, average processing time, and request costs. Consider adding a lightweight queue (e.g., Redis or SQS) between the webhook and processing steps for burst protection.

Extending the parser

This workflow is a strong foundation. Common extensions include:

- Email integration: Add a node to parse incoming resume attachments from email and forward text to the pipeline.

- Resume/CV parsers: Combine with specialized resume parsing services for better structured extraction for fields like education and employment history.

- Candidate scoring: Add a scoring node that uses extracted skills/years to compute a fit score for each job opening.

- Dashboarding: Push parsed results to a BI tool or Airtable for richer reporting and filtering.

- Human-in-the-loop: Add a Slack or email approval step where recruiters can validate or correct parsed data before final storage.

Privacy and compliance

Hiring data is sensitive. Ensure you have a lawful basis to process candidate information and implement data retention policies. Avoid storing full PII in public or shared indexes. If required, anonymize or encrypt candidate identifiers stored in Pinecone and Google Sheets.

How to get started (quick checklist)

- Provision OpenAI and Pinecone accounts and store credentials in n8n.

- Create a Google Sheets document and configure OAuth for n8n.

- Import the n8n workflow template provided in this post (or recreate nodes as described).

- Configure webhook security and test with sample payloads.

- Tune chunk sizes and test the RAG agent outputs with several resume samples.

- Enable Slack alerts and monitor the first 100 processed applications closely.

Conclusion and next steps

Automating job application parsing with n8n, embeddings, and a vector store reduces manual effort, improves consistency, and provides semantic search across candidate content. This RAG-based approach gives your hiring team a reliable foundation to scale recruitment while keeping costs and overhead predictable.

Ready to automate candidate intake? Import the workflow template into your n8n instance, run a few test submissions, and iterate on prompts and chunking until the RAG agent reliably extracts the fields you need. If you’d like help customizing the template for your hiring process, contact our team for a demo or consulting engagement.

Call to action: Import the template, test with 10 sample resumes, and join a 30-minute walkthrough to optimize parsing thresholds and output formats for your hiring pipeline.