Automate Lead Generation & Cold Email Outreach with n8n

What You Will Learn

In this guide, you will learn how to use an n8n workflow template to:

- Collect lead criteria through a form and trigger the workflow automatically

- Search for businesses via an external API based on your target audience

- Filter websites and extract the best contact email using AI

- Validate and store leads in Google Sheets without duplicates

- Generate personalized cold emails with AI and send them via Gmail

- Log email status and timestamps for tracking and reporting

By the end, you will understand how each n8n node works in the template and how the entire automation fits together, from intake to outreach.

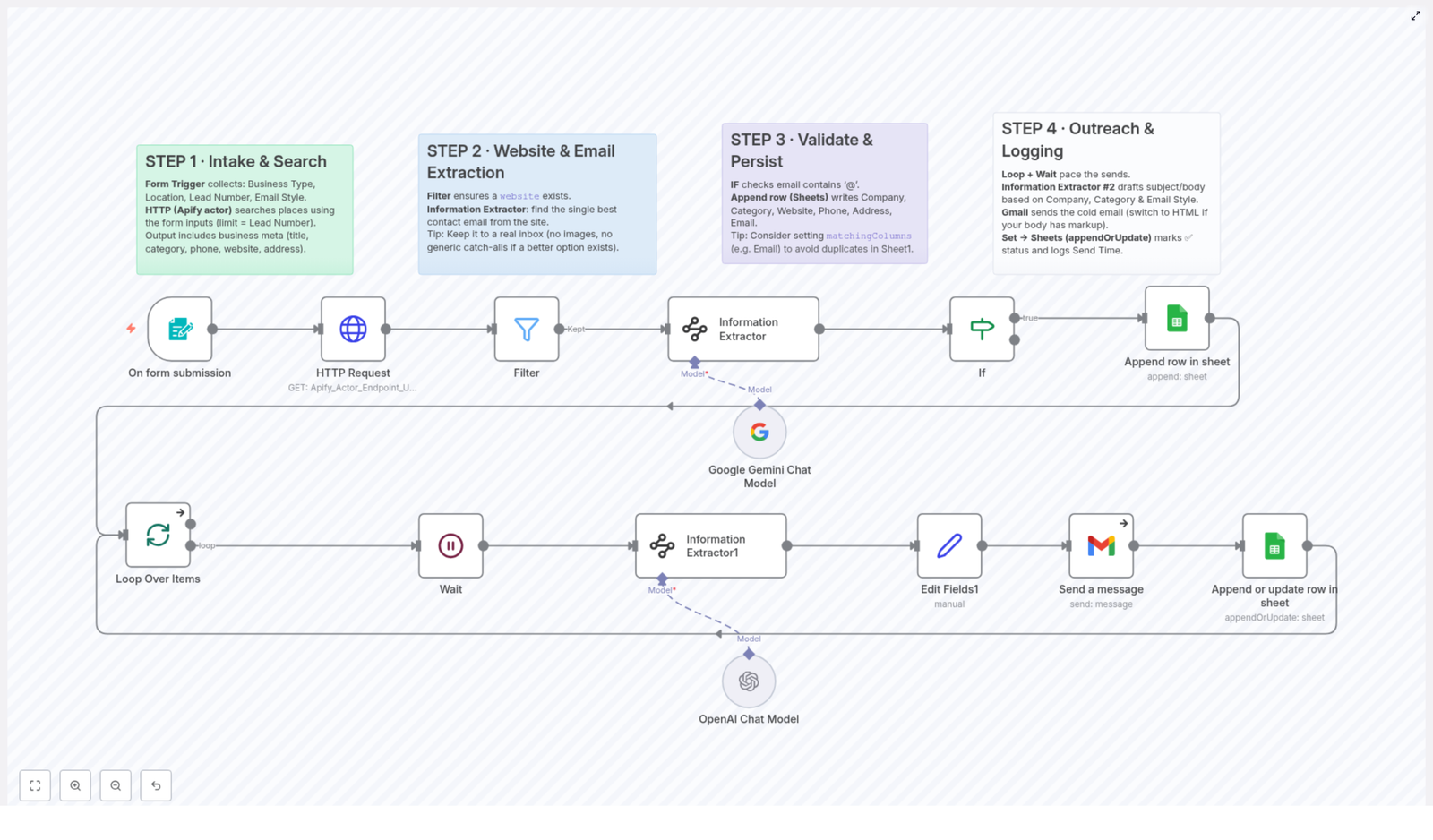

Concept Overview: How the Workflow Works

This n8n workflow automates the full lead generation and cold email process. It connects:

- A Form Trigger to collect your targeting criteria

- An HTTP Request node to call an external business search API (for example, an Apify actor)

- An AI-powered Information Extractor to find the best email address on each site

- Validation logic using Filter and If nodes

- A Google Sheets integration to store and deduplicate leads

- A Loop Over Items and Wait structure to pace email sending

- A second Information Extractor to draft personalized email content

- Gmail nodes to send emails and log the result back to your sheet

Think of it as a pipeline: you define what kind of leads you want, the workflow finds businesses, extracts contact data, validates it, stores it, and then sends tailored emails at a safe pace.

Step 1 – Collect Lead Criteria & Start the Workflow

Form Trigger: Intake of Targeting Parameters

The workflow starts with a Form Trigger node. This is where you or your team provide the input that will guide the entire automation. The form typically asks for:

- Business Type – for example, “restaurants,” “SaaS companies,” or “marketing agencies”

- Location – such as a city, region, or country

- Lead Number – how many leads you want the workflow to collect

- Email Style – the tone of the outreach email, for example:

- Friendly

- Professional

- Simple

These inputs are passed into the next nodes and used for search, filtering, and email generation. Treat this form as your control panel for each campaign.

Business Search via HTTP Request

After the form is submitted, an HTTP Request node calls an external API to find businesses that match your criteria. A common choice is an Apify actor that can search business directories or similar sources.

The HTTP Request node is configured to:

- Use the Business Type and Location from the form as search parameters

- Limit the number of returned results to the specified Lead Number

- Retrieve structured data for each business, such as:

- Company title or name

- Category

- Phone number

- Website URL

- Address

At the end of this step, you have a raw list of potential leads with basic business information.

Step 2 – Filter Websites & Extract the Best Email with AI

Filter Node: Keep Only Leads with a Website

Not every result from the API will have a usable website. Since the next step relies on visiting each site to find an email address, the workflow uses a Filter node to improve data quality.

The Filter node checks whether each item includes a valid website field. Only entries that contain a website URL are allowed to continue. This prevents unnecessary errors and wasted AI calls on incomplete data.

Information Extractor: AI-Based Email Discovery

Once you have a clean list of businesses with websites, the workflow uses an Information Extractor node powered by AI models like Google Gemini or OpenAI.

This node is configured to:

- Visit each company’s website

- Scan the content for contact information

- Identify the single best contact email address

The AI is instructed to prioritize:

- Real inbox email addresses over image-based or obfuscated emails

- Specific contact emails over generic catch-all addresses where possible

The goal is to ensure your outreach reaches a real, active inbox rather than a generic or low-priority email.

Step 3 – Validate Email Addresses & Store Leads

If Node: Basic Email Format Validation

After extracting emails, the workflow performs a simple validation step using an If node. This node checks whether the extracted email address contains the @ character.

If the condition is met, the email is considered to have a valid basic format and the lead continues to the next step. If not, the lead is skipped to avoid storing invalid or incomplete contact information.

Google Sheets: Centralized Lead Storage and Deduplication

Valid leads are then written to a Google Sheet. This sheet becomes your main database for collected leads and contains columns such as:

- Company Name

- Category

- Website

- Phone

- Address

To keep your data clean, the Google Sheets node uses the matchingColumns option for deduplication. A common choice is to match on the Email field. This means if a lead with the same email is found again, it is not added as a new row. Instead, you avoid repetitive entries and maintain a tidy, up-to-date sheet.

Step 4 – Automate Outreach & Log Results

Loop Over Items: Process Leads in Batches

With a validated and stored list of leads, the workflow moves into the outreach phase. A Loop Over Items container is used to handle each lead one by one or in small batches.

Inside this loop, a Wait node is often included. The Wait node:

- Introduces delays between email sends

- Helps you avoid spam detection and sending rate limits

- Makes your outreach appear more natural and less automated

Information Extractor #2: Generate Personalized Email Content

Next, the workflow uses a second Information Extractor node, again powered by AI models like Google Gemini or OpenAI. This time, the goal is not to extract an email, but to generate the email you will send.

This node uses:

- The company’s details (name, category, website, etc.)

- The Email Style chosen in the initial form (Friendly, Professional, or Simple)

Based on these inputs, the AI drafts:

- A personalized email subject line

- A tailored email body that speaks directly to that company

This step turns raw lead data into context-aware, human-like outreach messages without manual writing.

Gmail Nodes: Send the Cold Emails

Once the subject and body are generated, Gmail nodes handle the actual sending. These nodes are configured with your Gmail account and can:

- Send emails to the extracted contact email address

- Use the AI-generated subject and body content

- Optionally send in HTML format for richer styling and layout

Append or Update: Log Email Status and Timestamp

After each email is sent, the workflow writes results back to your Google Sheet using an appendOrUpdate operation. This step usually records:

- A status indicator, such as a checkmark for successful sends

- The timestamp of when the email was sent

This logging keeps your outreach records synchronized and gives your sales or marketing team real-time visibility into what has been sent and when.

Key Benefits of This n8n Workflow Template

- Fully automated lead generation – Save hours of manual research, copy-pasting, and data cleanup.

- High quality email extraction – AI-based scraping and selection help you reach real inboxes, not dead or generic addresses.

- Scalable, safe outreach – Looping with Wait nodes lets you scale up volume while reducing spam risk.

- Real-time tracking – Google Sheets logging keeps everyone aligned with up-to-date outreach history.

- Customizable communication style – Quickly switch between Friendly, Professional, or Simple email tones to match your audience.

Quick Recap

- Intake – Use a Form Trigger to define Business Type, Location, Lead Number, and Email Style.

- Search – Call an external API with an HTTP Request node to find matching businesses.

- Filter – Keep only leads with a website using a Filter node.

- Extract – Use an AI-powered Information Extractor to find the best contact email.

- Validate & Store – Check email format with an If node, then write valid leads to Google Sheets with deduplication.

- Outreach – Loop over leads, pace sending with Wait, generate personalized emails with AI, and send via Gmail.

- Log – Append or update the sheet with send status and timestamps for full visibility.

FAQ

Do I need coding skills to use this template?

No. The workflow is built with n8n’s visual interface. You configure nodes, connect them, and adjust settings, but you do not need to write code to follow the steps described here.

Can I change the email style or content?

Yes. The Email Style comes from the form input, and you can adjust the prompts in the Information Extractor nodes to change tone, structure, or call to action. This lets you adapt the outreach to different campaigns or audiences.

How do I avoid sending duplicate emails to the same lead?

The Google Sheets node uses matchingColumns (for example, on the Email field) to prevent duplicate entries. You can also add additional checks or conditions in n8n if you want more advanced deduplication logic.

Is it possible to use a different email provider instead of Gmail?

The template uses Gmail nodes by default, but n8n supports many email integrations. You can swap the Gmail nodes for another email provider while keeping the rest of the workflow intact.

Start Automating Your Lead Generation & Outreach

This n8n workflow template gives you a complete system for finding leads, extracting high quality contact details, and sending personalized cold emails at scale. Once configured, it runs with minimal manual effort and keeps your lead database and outreach logs always up to date.

Integrate this template into your marketing stack to save time, improve consistency, and increase your chances of converting cold prospects into warm opportunities.