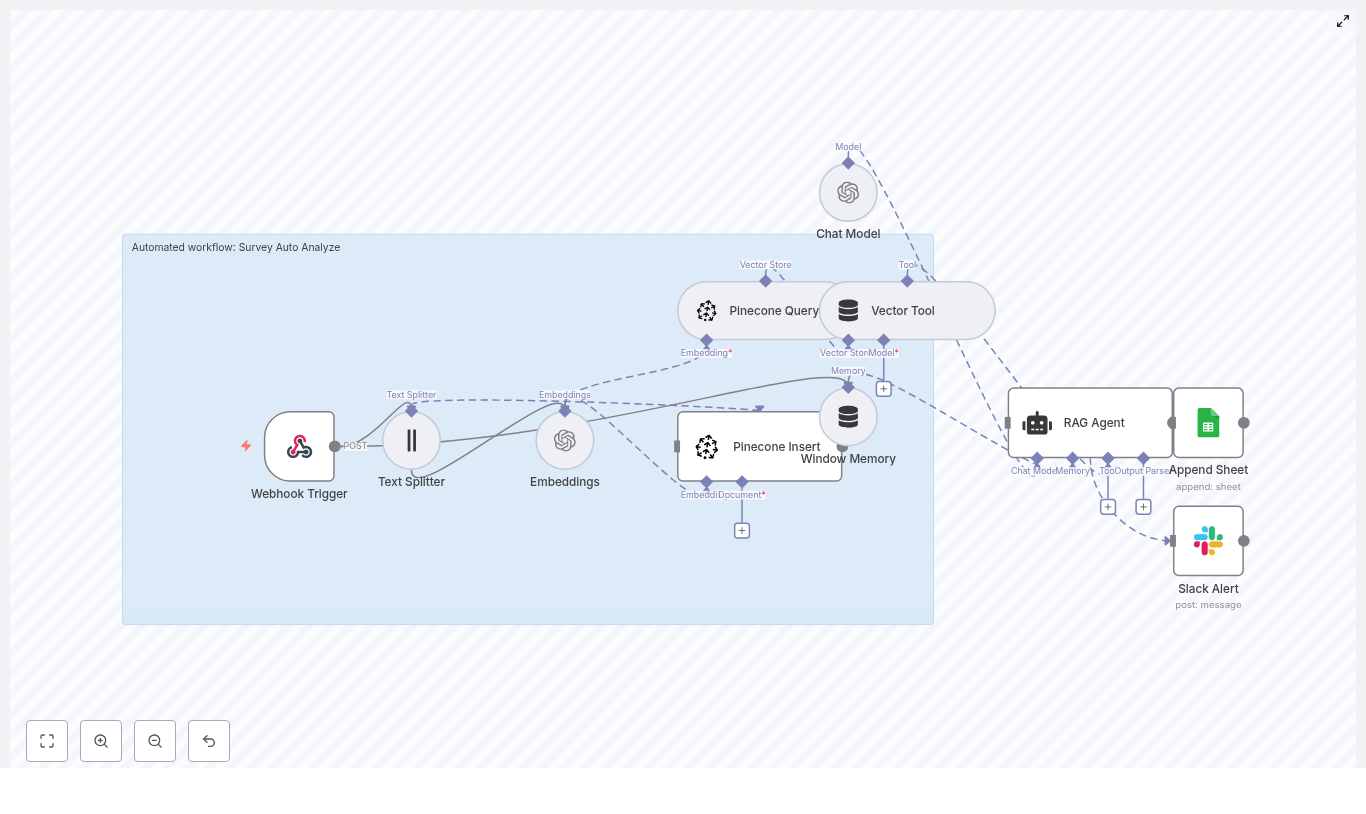

Automate Survey Analysis with n8n, OpenAI & Pinecone

Survey responses are packed with insights, but reading and analyzing them manually does not scale. In this step-by-step guide you will learn how to build a reusable Survey Auto Analyze workflow in n8n that uses OpenAI embeddings, Pinecone vector search, and a RAG (Retrieval-Augmented Generation) agent to process survey data automatically.

By the end, you will have an automation that:

- Receives survey submissions through an n8n webhook

- Splits long answers into chunks and generates embeddings with OpenAI

- Stores vectors and metadata in Pinecone for later retrieval

- Uses a RAG agent to summarize sentiment, themes, and actions

- Logs results to Google Sheets and sends error alerts to Slack

Learning goals

This tutorial is designed as a teaching guide. As you follow along, you will learn how to:

- Understand the overall survey analysis architecture in n8n

- Configure each n8n node required for the workflow

- Use OpenAI embeddings and Pinecone for semantic search

- Set up a RAG agent to generate summaries and insights

- Log outputs to Google Sheets and handle errors with Slack alerts

- Apply best practices for chunking, metadata, cost control, and security

Key concepts and tools

Why this architecture works well for survey analysis

The workflow combines several tools, each responsible for one part of the process:

- n8n – Open-source workflow automation that connects all components, from receiving webhooks to sending alerts.

- OpenAI embeddings – Converts survey text into numerical vectors that capture semantic meaning, which makes similarity search and RAG possible.

- Pinecone – A managed vector database that stores embeddings and lets you quickly retrieve similar responses.

- RAG agent – A Retrieval-Augmented Generation agent that uses retrieved context from Pinecone plus an LLM to generate summaries, sentiment analysis, themes, and recommended actions.

- Google Sheets & Slack – Simple destinations for logging processed results and receiving alerts when something goes wrong.

Instead of manually reading every response, this architecture lets you:

- Index responses for future comparison and trend analysis

- Automatically summarize each new survey submission

- Surface key pain points and actions in near real time

How the n8n workflow is structured

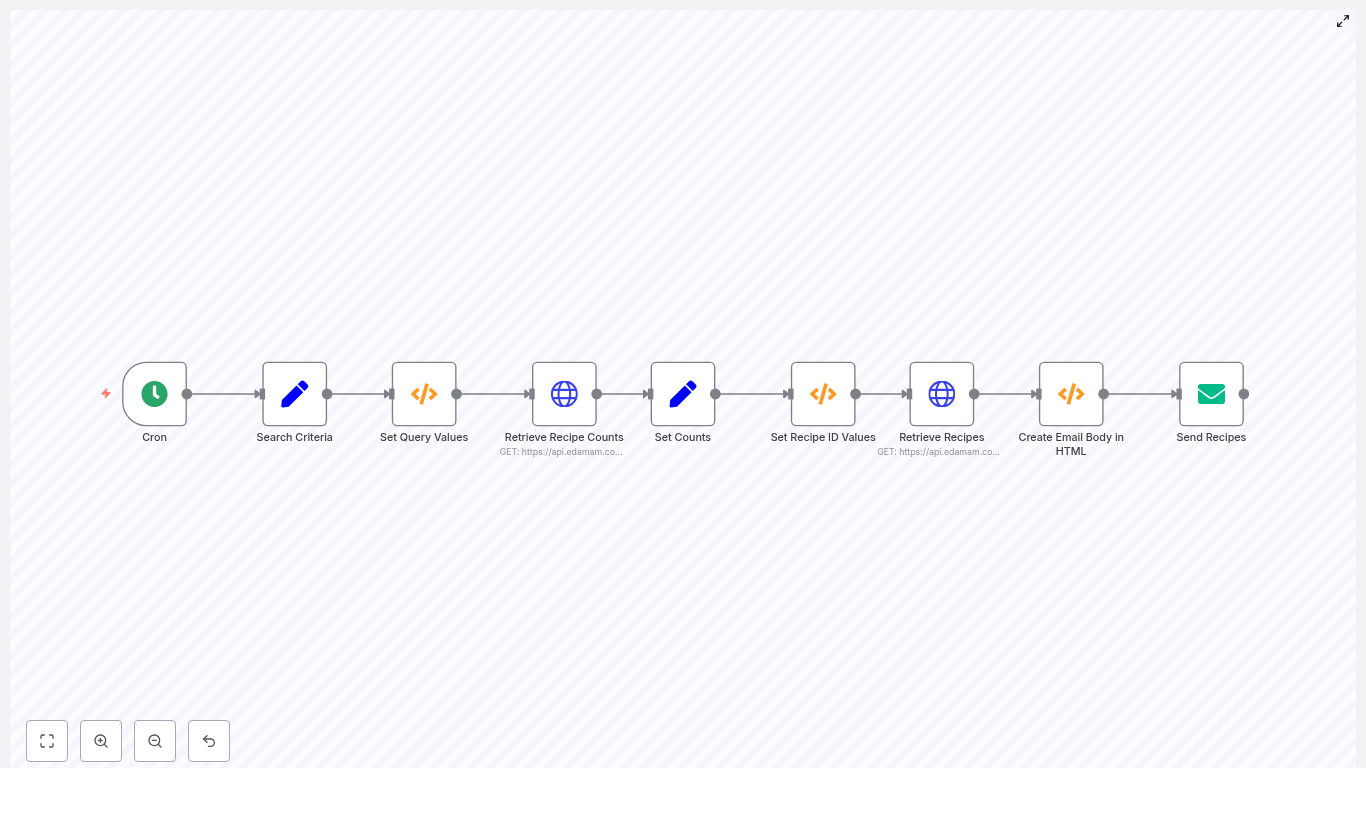

Before building, it helps to picture the flow from left to right. The core nodes in the workflow are:

- Webhook Trigger – Receives incoming survey submissions as POST requests.

- Text Splitter – Breaks long answers into smaller chunks to prepare for embeddings.

- Embeddings (OpenAI) – Generates a vector for each text chunk.

- Pinecone Insert – Stores vectors and metadata in a Pinecone index.

- Pinecone Query + Vector Tool – Retrieves similar chunks when you want context for a new analysis.

- Window Memory – Maintains short-term context for the agent during a single request.

- RAG Agent – Uses the LLM and retrieved context to analyze and summarize the response.

- Append Sheet (Google Sheets) – Logs the agent’s output in a spreadsheet.

- Slack Alert – Sends a message when an error occurs, including details for troubleshooting.

Next, we will build each part of this pipeline in n8n step by step.

Step-by-step: Building the Survey Auto Analyze workflow in n8n

Step 1 – Create the Webhook Trigger

The webhook is the entry point for your survey data.

- In n8n, add a Webhook node.

- Set the HTTP Method to

POST. - Set the Path to something like

survey-auto-analyze. - Copy the generated webhook URL.

- In your survey provider (for example Typeform, a Google Forms webhook integration, or a custom app), configure it to send a POST request to this webhook URL whenever a response is submitted.

The payload you receive should include at least:

respondent_idtimestampanswers(for example a map of question IDs to free-text answers)- Any extra metadata you care about, such as

sourceor survey name

Step 2 – Split long text into chunks

Embedding models work best when text is not too long. Chunking also improves retrieval quality later.

- Add a Text Splitter node after the Webhook.

- Choose a character-based splitter.

- Set:

- chunkSize to a value like

400 - chunkOverlap to a value like

40

- chunkSize to a value like

This means each long answer will be broken into overlapping segments. The overlap helps preserve context between chunks so that semantic search in Pinecone works better.

Step 3 – Generate embeddings with OpenAI

Next, you will convert each text chunk into a vector representation using OpenAI.

- Add an Embeddings node.

- Select the model

text-embedding-3-smallor the latest recommended OpenAI embedding model. - Attach your OpenAI API credentials in n8n.

- Configure the node so that for each chunk from the Text Splitter, the embeddings endpoint is called and a vector is returned.

Each output item from this node will now contain the original text chunk plus its embedding vector.

Step 4 – Insert embeddings into Pinecone

Now you will store the vectors in Pinecone so they can be used for retrieval and RAG later.

- Add a Pinecone Insert node and connect it to the Embeddings node.

- Provide your Pinecone index name, for example

survey_auto_analyze. - Map the embedding vector to the appropriate field expected by Pinecone.

- Add metadata fields such as:

source(for exampletypeform)respondent_idquestion_idtimestamp

Rich metadata makes it much easier to filter and audit items later. For example, you can query only responses from a particular survey, time range, or question.

Step 5 – Configure retrieval for RAG using Pinecone

When a new response comes in, you may want to analyze it in the context of similar past responses. That is where retrieval comes in.

- Add a Pinecone Query node.

- Use the embedding of the current response (or its chunks) as the query vector.

- Set topK to the number of nearest neighbors you want to retrieve, for example

5to10. - Connect a Vector Tool node so that the retrieved documents can be passed as context into the agent.

The Pinecone Query node returns the most semantically similar chunks, which the Vector Tool then exposes to the RAG agent as a source of contextual information.

Step 6 – Set up Window Memory

For many survey analysis cases, you will want the agent to keep track of short-term context during processing of a single request.

- Add a Window Memory node.

- Configure it according to how much conversational or request-specific history you want to preserve.

This memory is typically short-lived and scoped to the current execution, which helps the agent handle multi-step reasoning without exceeding token limits.

Step 7 – Configure the RAG Agent in n8n

Now you will put everything together in a Retrieval-Augmented Generation agent.

- Add an Agent node.

- Set a clear system message, for example:

You are an assistant for Survey Auto Analyze. Process the following data to produce a short summary, sentiment, key themes, and recommended action. - Attach:

- Your chosen Chat Model (LLM)

- The Vector Tool output (retrieved documents from Pinecone)

- The Window Memory node

- Define the expected output format. You can use:

- Plain text, for example a readable summary

- Structured JSON, if you want to parse fields like

sentiment,themes, andactionsdownstream

The agent will read the current survey response, look up similar past chunks in Pinecone, and then generate an analysis that reflects both the new data and the historical context.

Step 8 – Log results to Google Sheets

To keep a simple log of all analyzed responses, you can append each result to a Google Sheet.

- Add a Google Sheets node and choose the Append Sheet operation.

- Connect it to the output of the RAG Agent node.

- Select or create a sheet, for example a tab named

Log. - Map the fields from the agent output, such as:

- Summary

- Sentiment

- Themes

- Recommended actions

- Respondent ID and timestamp

Over time, this sheet becomes a searchable record of all processed survey responses and their AI-generated insights.

Step 9 – Handle errors with Slack alerts

To make the workflow robust, you should know when something fails so that you can fix it quickly.

- On the nodes that are most likely to fail (for example external API calls), configure an onError branch.

- Add a Slack node to this error path.

- Set up the Slack node to send a message to a monitoring channel.

- Include:

- The error message or stack trace

- The original webhook payload (or a safe subset) so you can reproduce the issue

This gives you immediate visibility when OpenAI, Pinecone, or any other part of the workflow encounters a problem.

Example: Webhook payload and RAG output

Here is a sample survey payload that might be sent to the Webhook node:

{ "respondent_id": "abc123", "timestamp": "2025-09-05T12:34:56Z", "answers": { "q1": "I love the mobile app but the login flow is confusing sometimes.", "q2": "Customer service was helpful but slow to respond." }, "source": "typeform"

}

After passing through embeddings, Pinecone, and the RAG agent, the output written to Google Sheets could look like this (for example in a single cell or structured across columns):

Summary: Positive feedback on mobile app UX; pain point: login flow.

Sentiment: mixed-positive.

Themes: UX, Authentication, Support response time.

Actions: Simplify login steps; improve SLA for support.You can adapt the format to your reporting needs, but the idea is always the same: turn raw text into a concise, actionable summary.

Best practices for this n8n survey analysis workflow

Chunking and retrieval quality

- Use chunking with overlap for better semantic retrieval.

- Good starting values:

- chunkSize: 300 to 500 characters

- chunkOverlap: 20 to 60 characters

- Experiment with these values if you notice poor matches from Pinecone.

Metadata in Pinecone

- Store useful metadata for each vector:

question_idrespondent_idtimestampsourceor survey name

- This enables filtered queries, audits, and more targeted analysis later.

Balancing cost and quality

- Use a smaller embedding model like

text-embedding-3-smallto keep indexing costs low. - If you need higher quality analysis, invest in a more capable LLM for the RAG agent while keeping embeddings lightweight.

- Monitor usage of:

- OpenAI embeddings

- Pinecone storage and queries

- LLM tokens

- Batch inserts into Pinecone where possible to reduce overhead.

Reliability and rate limits

- Implement rate limiting and retry logic for calls to OpenAI and Pinecone, either via n8n settings or at your webhook ingress.

- Use the Slack error alerts to quickly identify and resolve transient issues.

Privacy and PII handling

- Handle sensitive personal data in accordance with your privacy policy.

- Consider:

- Hashing identifiers like

respondent_id - Redacting names, emails, or other PII before generating embeddings

- Hashing identifiers like

Testing and troubleshooting your n8n workflow

Once your pipeline is configured, spend time testing it with different types of input:

- Short answers to confirm basic behavior.

- Very long responses to validate chunking and token limits.

- Non-English text to see how embeddings and the LLM handle multilingual input.

Use n8n’s execution log to inspect what each node receives and outputs. This is especially useful for:

- Confirming that chunking is working as expected.

- Checking that embeddings are being created and stored in Pinecone.

- Verifying that Pinecone Query returns relevant neighbors.

- Debugging the RAG agent’s prompt and output format.

If you see poor retrieval or strange answers from the agent:

- Adjust chunkSize and chunkOverlap.

- Tune topK in the Pinecone Query node.

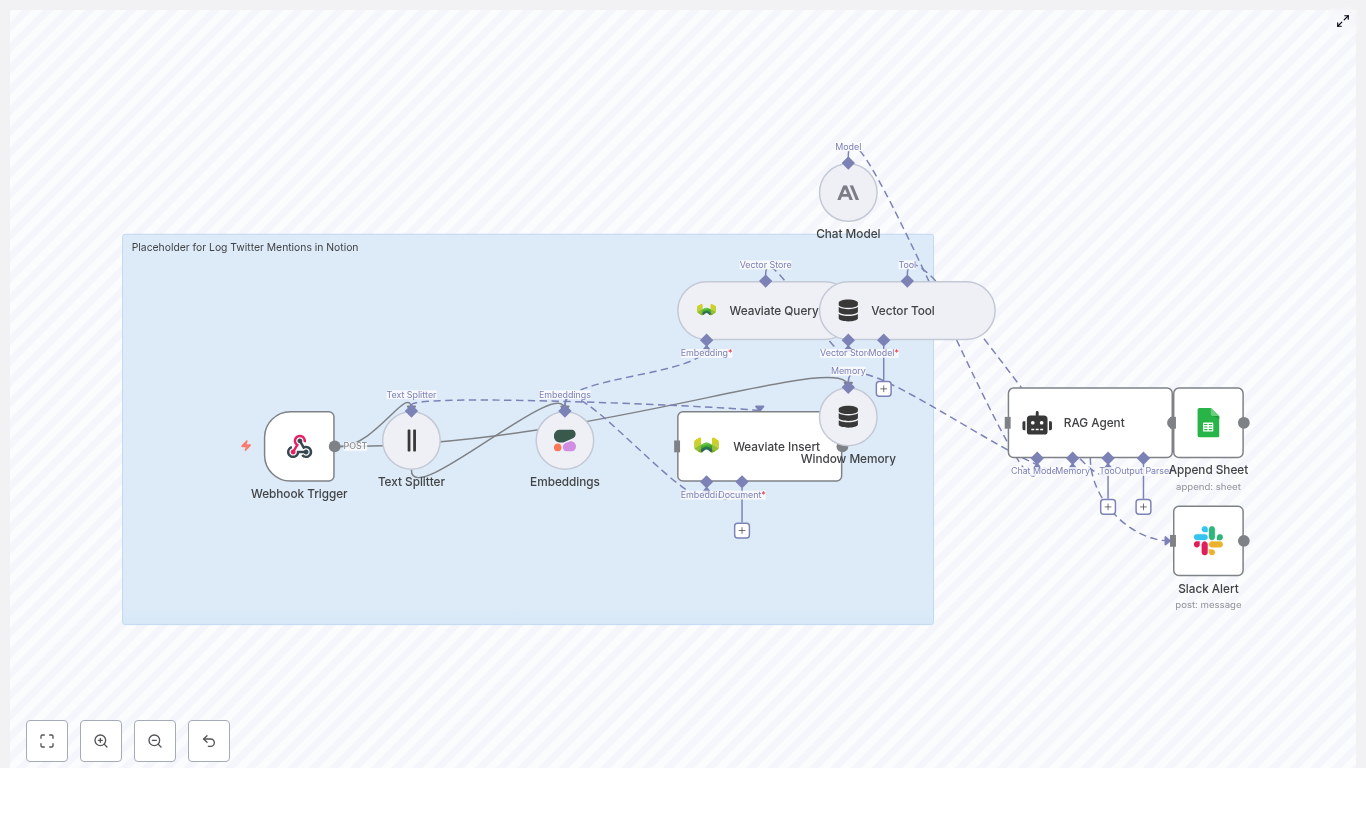

- Receives Twitter mention events through a Webhook Trigger node.

- Splits tweet content into embedding-ready chunks using a Text Splitter node.

- Generates semantic vector embeddings via Cohere.

- Stores and retrieves vectors in Weaviate for context-aware retrieval.

- Uses a Chat Model and RAG Agent to summarize, classify, or interpret each mention.

- Appends structured results to Google Sheets by default, with the option to swap in Notion.

- Sends Slack alerts if any node in the execution fails.

- Webhook Trigger – Entry point that receives POST requests from your Twitter-to-webhook bridge.

- Text Splitter – Preprocessing step that segments long tweets or threads into manageable text chunks for embedding.

- Embeddings (Cohere) – Generates vector representations of text for semantic search and retrieval.

- Weaviate Insert / Query – Vector database operations for storing and retrieving embedding vectors and associated metadata.

- Window Memory – Provides short-term conversational context to the RAG agent.

- Chat Model (Anthropic) + RAG Agent – LLM-based reasoning layer that interprets the mention and produces structured output.

- Append Sheet (Google Sheets) – Default persistence layer that logs processed mentions as rows.

- Slack Alert – Error notification path that posts failures to a Slack channel.

- Twitter sends a JSON payload to the n8n webhook URL.

- The Webhook Trigger passes the payload to the Text Splitter.

- Split segments are sent to the Cohere Embeddings node.

- Generated vectors and metadata are inserted into a Weaviate index.

- A Weaviate Query retrieves relevant context vectors for the current mention.

- The retrieved context is passed into a Vector Tool and then into the RAG Agent, which uses a Chat Model with Window Memory.

- The RAG Agent outputs a structured interpretation (for example, status, type, summary) that is sent to the Append Sheet node or a Notion node.

- If any node fails, the onError path triggers the Slack Alert node to notify a specified Slack channel.

- An n8n instance (self-hosted or n8n.cloud).

- A Twitter mention forwarding mechanism:

- Twitter API v2 filtered stream, or

- A third-party service that can POST mentions to a webhook.

- Cohere API key for embeddings.

- Weaviate instance (cloud or self-hosted) for vector storage.

- Anthropic API key (or another supported chat LLM) for the RAG agent.

- Google account with access to a Google Sheet, or a Notion integration if you prefer Notion as the final store.

- Slack workspace and bot token for error notifications.

- Restrict access to your n8n instance and webhook URLs.

- For public-facing webhooks, configure a shared secret or signature validation and verify the payload before processing.

- Review data retention and PII handling policies for user-generated content (tweets, authors, etc.).

- Attach credentials to each external-service node.

- Adjust index names, sheet IDs, and paths as needed.

- Webhook Trigger

- HTTP Method:

POST - Path: for example

log-twitter-mentions-in-notion

- HTTP Method:

- Embeddings (Cohere)

- Credential: your Cohere API key.

- Model: template uses

embed-english-v3.0(adjust only if you know the implications for vector dimension and compatibility).

- Weaviate Insert / Query

- Credential: your Weaviate instance configuration.

- Index name: template uses

log_twitter_mentions_in_notion.

- Chat Model

- Credential: Anthropic (or alternative LLM provider supported by your n8n installation).

- Append Sheet (Google Sheets)

- Credential: Google account with access to the target spreadsheet.

- Document ID: your Google Sheets document ID.

- Sheet name: target sheet tab name.

- Slack Alert

- Credential: Slack bot token.

- Channel: template uses

#alertsby default.

- Method:

POST - Path:

log-twitter-mentions-in-notion - Prevent hitting token limits for the embedding model.

- Preserve enough context by tuning chunk size and overlap.

- Credential: Cohere API key configured in n8n.

- Model:

embed-english-v3.0(as used in the template). - Do not change the model without verifying vector dimension compatibility with your existing Weaviate schema.

- Check that the text passed to this node is not unintentionally truncated or empty, as this would produce low-quality or useless embeddings.

- Credential: Weaviate instance configuration (URL, API key if required).

- Index name:

log_twitter_mentions_in_notionin the template. - Query by similarity using the current tweet text embedding.

- Retrieve related mentions or historical context that can help the RAG agent interpret the new mention.

- Provide the Chat Model with limited conversational state.

- Support consistent classification or summarization across related mentions.

- The current tweet content.

- Context retrieved from Weaviate via the Vector Tool.

- Window Memory context.

Status: Follow-Up RequiredMention Type: QuestionSummary: ...- Document ID: ID of the target spreadsheet.

- Sheet name: Name of the worksheet tab where rows should be appended.

- Tweet ID

- Author

- Full Text

- Status

- Mention Type

- Summary

- Timestamp

- Channel: template uses

#alerts. tweet_idauthor- Error message or node name

tweet_idauthortexttimestamp- Weaviate’s vector dimension matches the new model.

- Existing data in Weaviate is compatible with the new embeddings.

- Set the

indexNametolog_twitter_mentions_in_notionor your preferred index name. - Ensure your Weaviate instance is reachable from n8n (network, credentials, TLS, etc.).

- Use Weaviate Cloud for a managed deployment, or

- Run Weaviate locally via Docker for testing.

- Status (for example, Follow-Up Required, Done, Ignore).

- Mention Type (for example, Question, Feedback, Bug Report, Praise).

- Short summary of the mention.

- Current tweet text.

- Context from Weaviate Query (similar past mentions or related content).

- Window Memory state.

- Strengthening the system prompt.

- Providing explicit examples of input and desired output in the prompt.

- Adjusting Weaviate Query parameters

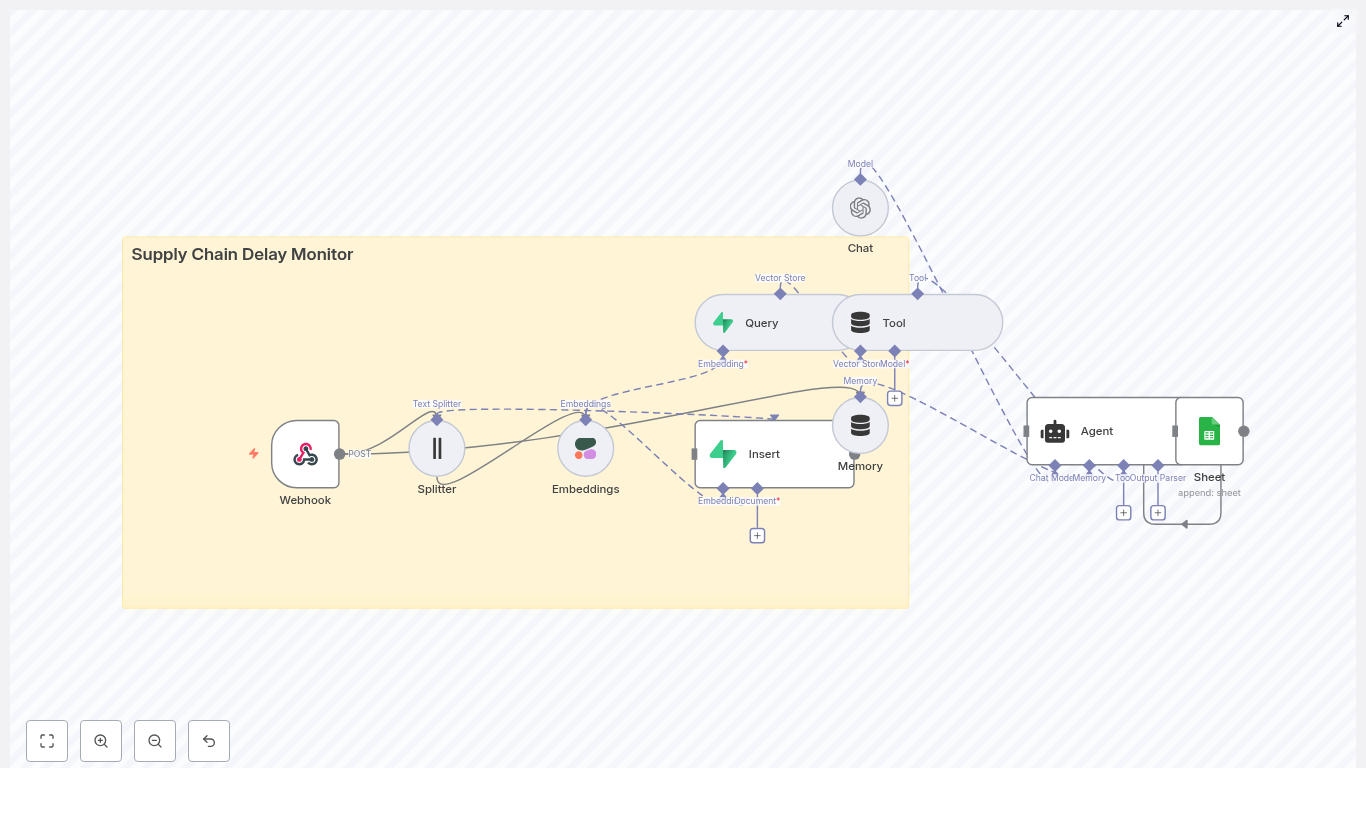

- Receives real-time supply chain events via a Webhook node.

- Splits unstructured messages into embedding-ready chunks.

- Generates vector embeddings using an embeddings provider such as Cohere.

- Persists embeddings and metadata in a vector store backed by Supabase.

- Queries historical incidents for semantic similarity and context.

- Uses an AI Agent powered by OpenAI (or another LLM) to analyze and classify the event.

- Writes a structured log entry to Google Sheets for tracking and follow-up.

- Automated detection and prioritization of shipment delays and exceptions.

- Pattern analysis across historical incidents using semantic search.

- Generating recommended remediation actions for operations teams.

- Creating a searchable audit trail in a spreadsheet-based log.

- n8n – Orchestration, node execution, and workflow logic.

- Cohere (or equivalent) – Embeddings provider for converting text into vectors.

- Supabase – Vector database and storage layer for embeddings and metadata.

- OpenAI (or another LLM) – Language model behind the AI Agent for reasoning and classification.

- Google Sheets – Operational log and simple reporting surface.

- Webhook receives POST events from TMS/EDI or tracking APIs.

- Splitter divides long carrier messages or reports into chunks.

- Embeddings node generates a vector for each text chunk.

- Insert + Vector Store (Supabase) persists vectors with shipment metadata.

- Query searches the vector index for similar historical events.

- Tool + Memory prepare contextual data and maintain short-term state for the Agent.

- Chat/Agent uses an LLM to classify severity, suggest actions, and summarize.

- Google Sheets appends a structured record of the analysis.

- HTTP Method:

POST - Authentication: Depending on your environment, use a token, header-based auth, or IP restrictions.

- Expected payload fields:

shipment_idtimestamplocationorcheckpointstatus(e.g., delayed, in transit, delivered)notesor other unstructured text (carrier messages, exception descriptions)

- Use authentication tokens or signed payloads to prevent spoofed events.

- Optionally validate a known header or secret before processing.

- Restrict the Webhook URL using IP allowlists where possible.

- Chunk size: ~400 characters.

- Overlap: ~40 characters between adjacent chunks.

- Preserves local context across chunks through overlap.

- Keeps each unit within common embedding model limits.

- Improves recall when querying for similar incidents, since each chunk can be matched independently.

- Very short messages may pass through unchanged with a single chunk.

- If the payload is missing or empty, consider adding a basic check or guard node before embedding to avoid unnecessary API calls.

- Set the embeddings model according to your provider’s recommended model for semantic search.

- Map the chunked text field from the Splitter node as the input text.

- Ensure the Cohere (or other provider) API key is stored as an n8n credential and not hardcoded.

- Monitor for rate limit or transient network errors and configure retries where appropriate.

- For failed embedding calls, you may choose to skip the record, log the failure, or route it to a separate error-handling branch.

- Index name: Use a dedicated index such as

supply_chain_delay_monitorto keep this use case isolated. - Vector field: Map the embeddings output vector to the appropriate column or field expected by your Supabase vector extension.

- Metadata: Persist fields like:

shipment_idcarrier(if available)locationtimestampstatus- Original text chunk or a reference to it

- Fast nearest-neighbor queries for similar incidents.

- Rich filtering using structured metadata (for example filter by carrier, lane, or time window).

- Use meaningful index names so you can manage multiple workflows or use cases in the same Supabase project.

- Periodically prune outdated or low-value embeddings to control storage and query cost.

- Use the newly generated embedding as the query vector.

- Target the same index used for insertion, for example

supply_chain_delay_monitor. - Optionally apply filters based on metadata (for example same carrier or similar route) if your Supabase setup supports it.

- Limit the number of neighbors returned to a manageable number for the LLM (for example top N matches).

- Wraps the query results in a format that the Agent node can consume as an external “tool” or data source.

- Provides the LLM with summarized context about similar historical incidents, including prior classifications or remediation steps if you store them.

- Stores recent interactions and Agent outputs so subsequent events can reference them.

- Helps avoid repetitive or redundant actions when multiple similar alerts arrive in a short timeframe.

- Use a buffer or similar memory strategy compatible with the Agent node.

- Limit memory size to avoid unnecessary token usage when interacting with the LLM.

- Classify delay severity, for example:

- Minor delay

- Moderate issue

- Critical disruption

- Identify likely root causes based on historical similarity.

- Recommend next steps, such as:

- Contact carrier

- Expedite alternate routing

- Escalate to supplier management

- Generate a concise, structured summary suitable for logging.

- Define explicit classification labels and severity thresholds.

- Specify what the Agent should output (for example JSON with fields like

severity,recommended_action,summary). - Clarify how to use tool results from the vector store and how to interpret memory content.

- Respect the LLM provider’s rate limits, especially for high-volume event streams.

- Consider a fallback strategy, such as default classifications or delayed retries, when the LLM is unavailable.

- Shipment ID

- Timestamp

- Location

- Status

- Severity classification

- Recommended action

- Short rationale or summary

- Use an n8n Google Sheets credential to securely connect to your spreadsheet.

- Configure the node to append a new row for each processed event.

- Ensure column ordering in Sheets matches the mapping in the node configuration.

- Use tokens, signed payloads, or custom headers to validate request origin.

- Combine network-level controls (IP whitelisting) with application-level checks where possible.

- Log incoming requests with minimal identifying information, such as a payload hash, to support debugging without exposing full PII.

- Start with 300 to 500 characters per chunk with a small overlap (for example 40 characters) to balance context and cost.

- Adjust chunk size based on the typical length and complexity of carrier messages in your environment.

- Batch embedding calls where possible to reduce API overhead and respect provider rate limits.

- Always store shipment-level identifiers and timestamps alongside vectors to enable precise filtering.

- Include carrier, route, or lane information if available to support more targeted similarity queries.

- Use consistent naming conventions for metadata fields to simplify future queries and analytics.

- Use descriptive index names such as

supply_chain_delay_monitorfor clarity. - Periodically remove stale or low-value records to keep query performance predictable and costs controlled.

- Consider segmenting indexes by business unit or region if you anticipate very large volumes.

- Define a clear schema for outputs, including severity levels and action templates.

- Document how severity maps to operational processes (for example critical events trigger paging, minor events are logged only).

- Iteratively refine the prompt based on real-world outputs and feedback from operations teams.

- Monitor usage against Cohere and OpenAI quotas to avoid service interruptions.

- Implement batching where compatible with your event latency requirements to reduce per-event overhead.

- For extremely high volumes, consider sampling or prioritization strategies so the most critical events are analyzed first.

- Automated exception classification: Ingest carrier EDI exceptions and categorize them by severity, enabling teams to focus on critical incidents first.

- Recurring delay detection: Identify patterns such as specific suppliers, routes, or ports that repeatedly cause delays by querying similar historical incidents.

- Remediation guidance: Generate recommended next steps and optionally feed them into downstream ticketing or case management systems.

- Reporting and audits: Use the Google Sheets log as a lightweight source of truth for weekly dashboards and leadership reviews.

- Ensure data is encrypted in transit and at rest. Supabase provides encryption capabilities; configure TLS for all external calls.

- Mask or hash customer-identifying fields before storing them in embeddings or logs if PII constraints apply.

- Carefully review what fields are included in text passed to the LLM, especially if you handle sensitive customer or shipment data.

- Store all provider keys (Cohere, OpenAI, Supabase, Google Sheets) as environment-level secrets in n8n.

- Limit who can access n8n credentials and audit changes to workflow configuration.

- Track embedding and LLM usage over time to understand cost drivers.

- Use caching strategies for repeated, similar queries where appropriate.

- Optimize chunk sizes and reduce unnecessary calls for low-value events.

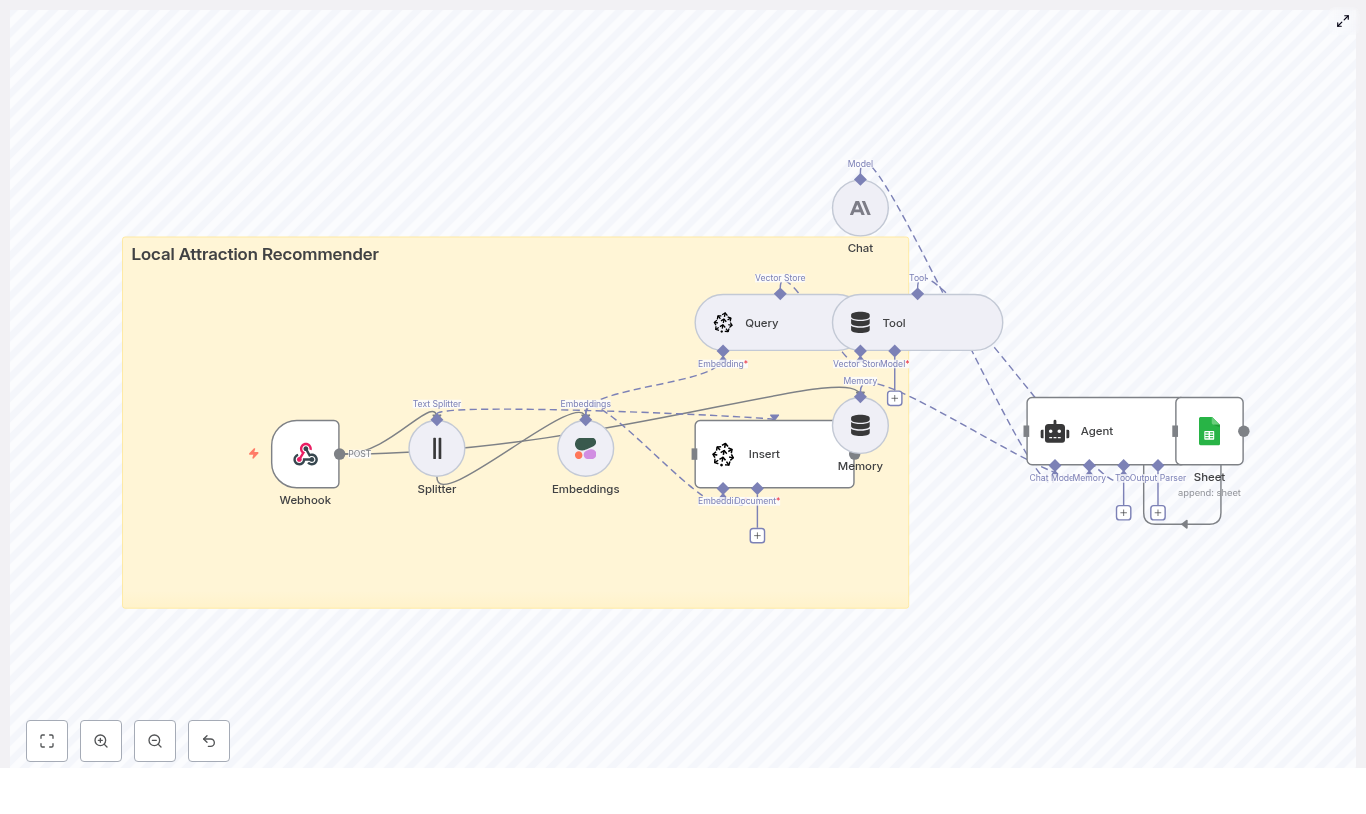

- Ingests attraction data (like parks, cafes, museums)

- Turns that text into embeddings with Cohere

- Stores and searches vectors in Pinecone

- Uses an Anthropic-powered agent to respond to users

- Logs everything neatly into Google Sheets

- Attraction data to index, or

- A user query asking for recommendations

- A city guide for tourists

- A “things to do nearby” feature for your app

- A concierge-style chatbot for hotels or coworking spaces

- Semantic vector search finds attractions that are meaningfully similar to what the user describes, not just keyword matches.

- Managed services like Cohere, Pinecone, and Anthropic handle the hard ML problems so you can focus on your data and experience.

- n8n as the glue keeps everything transparent and easy to tweak, with each step visible as a node.

- Google Sheets logging gives you a simple, accessible audit trail for analytics and improvements.

- Webhook – receives incoming POST requests with either data to index or user queries.

- Splitter – breaks long attraction descriptions into smaller chunks.

- Embeddings (Cohere) – converts text chunks into vectors.

- Insert (Pinecone) – stores those vectors in a Pinecone index.

- Query (Pinecone) + Tool – retrieves similar attractions for a user query.

- Memory – keeps short-term conversation state for multi-turn chats.

- Chat (Anthropic) + Agent – turns raw results into a friendly, structured answer.

- Sheet (Google Sheets) – logs each interaction for monitoring and analysis.

type: "index"– add or update attraction data in the vector store.type: "query"– ask for recommendations.- Attraction name

- Description

- Address or neighborhood

- Tags (for filtering and metadata)

- Chunk size: about 400 characters

- Overlap: around 40 characters

- Configure the chosen Cohere embedding model in the node settings.

- Store your Cohere API key in n8n credentials and link it to the node.

- The embedding vector itself

- A unique ID

- Metadata such as:

- Attraction name

- Neighborhood or city

- Tags (e.g. “family-friendly”, “outdoor”, “museum”)

- A text snippet or full description for context

- Uses the same Cohere embedding model to embed the query text.

- Sends that query vector to Pinecone.

- Retrieves the top K nearest neighbors from the index.

- Recent messages and responses

- The user ID

- A short interaction history

- The Anthropic chat model

- The vector store tool (Pinecone results)

- The buffer memory

- Why each place fits the user’s request

- Helpful details like distance or neighborhood

- Opening hours or other practical notes, if present in your data

user_id- Original query text

- Top retrieved results

- Timestamp

- Agent output

- Review relevance over time

- Spot patterns in user behavior

- Iterate on prompts, ranking, or index content

- Spin up n8n

Use either n8n Cloud or a self-hosted instance, depending on your infrastructure preferences. - Add your credentials

In n8n, configure credentials for:- Cohere API key

- Pinecone API key and environment

- Anthropic API key

- Google Sheets OAuth credentials

- Import the workflow template

Bring the template into your n8n instance and connect each node to the appropriate credential you just created. - Configure and test the Webhook

Set the Webhook path to/local_attraction_recommender(or your preferred path) and test it with a tool like curl or Postman. - Index a sample dataset

Start small with a CSV or JSON list of attractions. Send POST requests withtype: "index"and include fields like:namedescriptiontags

This will populate your Pinecone index.

- Send some queries

Once you have data indexed, issue POST requests withtype: "query"and confirm that the agent returns relevant, well-explained suggestions. - User preferences and constraints

For example: budget, accessibility needs, indoor vs outdoor, family-friendly, etc.

Recent choices, likes or dislikes, and previous feedback.- A structured output format

This makes it easier to display results in your app or website. - Neighborhoods or cities

- Activity types (outdoor, nightlife, family, culture)

- User personas (tourist, local, family, solo traveler)

- Click-through or follow-up rate

Are users actually visiting the suggested places or asking for more details? - Relevance scores

Manually review and label some suggestions as “good” or “off” to see where to improve. - Latency

Measure end-to-end response time so you know if the experience feels snappy enough. - Pinecone

Storage and query costs grow with:- Number of indexed attractions

- Query volume (QPS)

Plan your index size and query patterns accordingly.

- Cohere embeddings

You are billed per embedding call, so:- Batch embeddings when indexing large datasets.

- Avoid re-embedding unchanged text.

- Anthropic chat

Chat calls can be your biggest variable cost if you have many conversational sessions. You can:- Cache responses for common queries.

- Use simpler heuristic responses for very basic questions.

- Security

- Store all API keys in n8n credentials, never hard-code them.

- Protect the Webhook with an auth token or signed headers.

- Irrelevant results?

Confirm that the same Cohere embedding model is used for both:- Indexing attractions

- Embedding user queries

- Weak filtering or odd matches?

Inspect your metadata. Missing or inconsistent tags, neighborhoods, or location fields make it harder to filter and rank results properly. - Slow responses?

Monitor Pinecone index health and query latency in your deployment region, and check whether you are adding unnecessary steps in the workflow. - Add personalized ranking based on user history or explicit thumbs-up / thumbs-down feedback.

- Enrich attractions with photos, ratings, or external data using APIs like Google Maps or Yelp.

- Build a lightweight frontend (a static site, chatbot widget, or mobile app) that simply calls the Webhook endpoint.

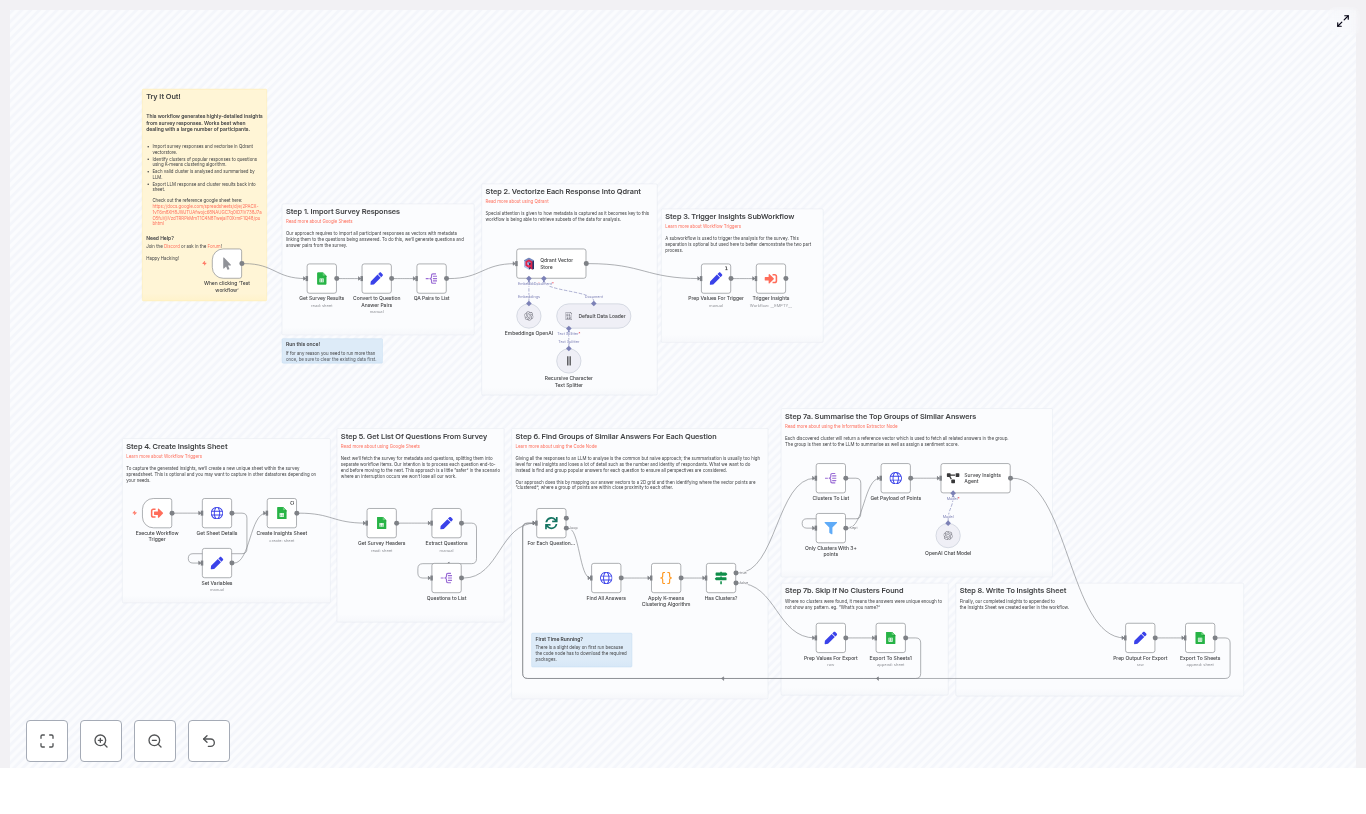

- OpenAI embeddings

- Qdrant as a vector database

- K-means clustering to group similar answers

- An LLM summarizer to generate insights and sentiment

- Prepare survey responses in n8n as question-answer pairs with metadata

- Generate OpenAI embeddings and store them in Qdrant for fast, flexible querying

- Run K-means clustering on similar answers to each question

- Use an LLM to summarize clusters into clear insights with sentiment labels

- Export all results back into Google Sheets for review and reporting

- Tune and troubleshoot the workflow for large surveys and better quality outputs

- Nuance between different types of answers

- Visibility into how many people share each opinion

- Traceability back to specific participants and their responses

- Vectorizing each individual answer with OpenAI embeddings so semantic similarity is measured accurately.

- Storing embeddings in Qdrant along with metadata such as question text, participant ID, and survey name.

- Clustering similar answers with K-means, then summarizing each cluster separately using an LLM.

- Exporting structured insights to Google Sheets (or another datastore) with counts, IDs, and raw answers for auditability.

- Each survey answer is converted into an embedding using an OpenAI model, such as

text-embedding-3-small. - These vectors are stored in Qdrant so that similar answers can be compared and grouped.

- The embedding vector for each answer

- Metadata such as the question text, participant ID, and survey name

- A Python-based node runs K-means on the embeddings for one question.

- You set a maximum number of clusters (for example, up to 10).

- Each cluster represents a common theme or viewpoint among the answers.

- Summarize the responses in a short paragraph

- Explain the key insight or takeaway from that cluster

- Assign a sentiment label such as negative, mildly negative, neutral, mildly positive, or positive

- Each row in the sheet represents one participant.

- Each column represents either a question or an identifier (for example, participant ID).

- Each answer becomes an independent item to be embedded.

- Metadata like

participantandsurveycan be attached consistently. - Downstream nodes can process all answers in the same way, regardless of the original column layout.

- The answer text is sent to the OpenAI embedding model, for example

text-embedding-3-small. - The resulting vector is stored in Qdrant with metadata fields such as:

question– the exact question textparticipant– participant ID or identifiersurvey– survey name or ID

- Ingestion workflow – reads from Google Sheets, creates embeddings, and writes to Qdrant.

- Insights subworkflow – performs clustering and summarization on top of the stored data.

- The sheet name includes a timestamp so you can track when the analysis was run.

- All generated insights are appended to this sheet.

- Look for header text that contains a question mark.

- Ignore ID or metadata columns that are not survey questions.

- Fetch embeddings for that question from Qdrant.

- Cluster those embeddings.

- Filter and summarize clusters.

- Write the resulting insights to the Insights sheet.

- The workflow queries Qdrant for all vectors where

metadata.questionequals the current question text. - The resulting vectors are passed to a K-means implementation in a Python node (or similar custom code node).

- You configure:

- Max number of clusters, for example up to 10.

- Other K-means parameters as needed.

- The workflow filters out clusters that have fewer than a minimum number of points. The template uses a default minimum cluster size of 3.

- The workflow fetches all associated payloads, including:

- Answer text

- Participant IDs

- These are sent to an information extractor node that uses an LLM to:

- Summarize the grouped responses in a short paragraph.

- Generate a clear insight that explains what these answers tell you.

- Assign a sentiment label such as:

- negative

- mildly negative

- neutral

- mildly positive

- positive

- Question – the original survey question text

- Insight summary – the LLM generated explanation

- Sentiment – one of the predefined labels

- Number of responses in the cluster

- Participant IDs – usually stored as a comma-separated list

- Raw responses – the original text answers used to generate the insight

- What people are saying

- How they feel about it

- How many participants share that view

- Which specific participants are included, if you need to investigate further

text-embedding-3-smallis a good default. It balances cost and quality for most survey analysis use cases.- If you need higher semantic accuracy and can accept higher cost, consider

text-embedding-3-large. - If you set the number too high, similar answers may be split into many small clusters.

- If you set it too low, distinct themes may be merged together.

- Adjust based on how many responses you have per question.

- Use n8n Split in Batches or similar nodes for ingestion.

- Batch requests to OpenAI to avoid rate limits and memory issues.

- Monitor throughput between n8n, Qdrant, and OpenAI APIs.

- Mask or hash participant identifiers before sharing the sheet externally.

- Review which metadata fields are included in the exported results.

- OpenAI embeddings for every answer

- LLM calls for summarizing each valid cluster

- Cache embeddings and summaries where possible so you do not recompute them unnecessarily.

- Batch requests to improve efficiency.

- Start with a small dataset to estimate cost per survey before scaling up.

- Split a very large spreadsheet into smaller sheets.

- Use incremental updates that only ingest new or changed rows.

- Check that your n8n credentials have sufficient API quotas for Google Sheets, Qdrant, and OpenAI.

- Reduce the maximum number of clusters in the K-means node.

- Increase the minimum cluster size threshold.

- Pre-filter very short or low-signal answers (for example, single-word responses) before generating embeddings.

- Improve the system prompt used in the Information Extractor or LLM node.

- Add a few-shot example to show the LLM what a good output looks like.

- Keep the prompt focused on the fields you need:

- Concise summary

- Actionable insight

- Clear sentiment label from the defined set

- Auditability – You can always trace an insight back to the exact responses that created it.

- Transparency – Stakeholders can see representative quotes and counts, which builds trust in the automated analysis.

- Flexibility – You can re-check or re-cluster specific subsets of participants if needed.

- Which participants were included

- How many responses contributed

- What those participants actually wrote

- Import the n8n workflow template into your n8n instance.

- Configure credentials for:

- Google Sheets

- OpenAI

- Qdrant

- Tune the settings:

- Embedding model choice

- Maximum number of clusters

- Minimum cluster size

- LLM prompt and output format

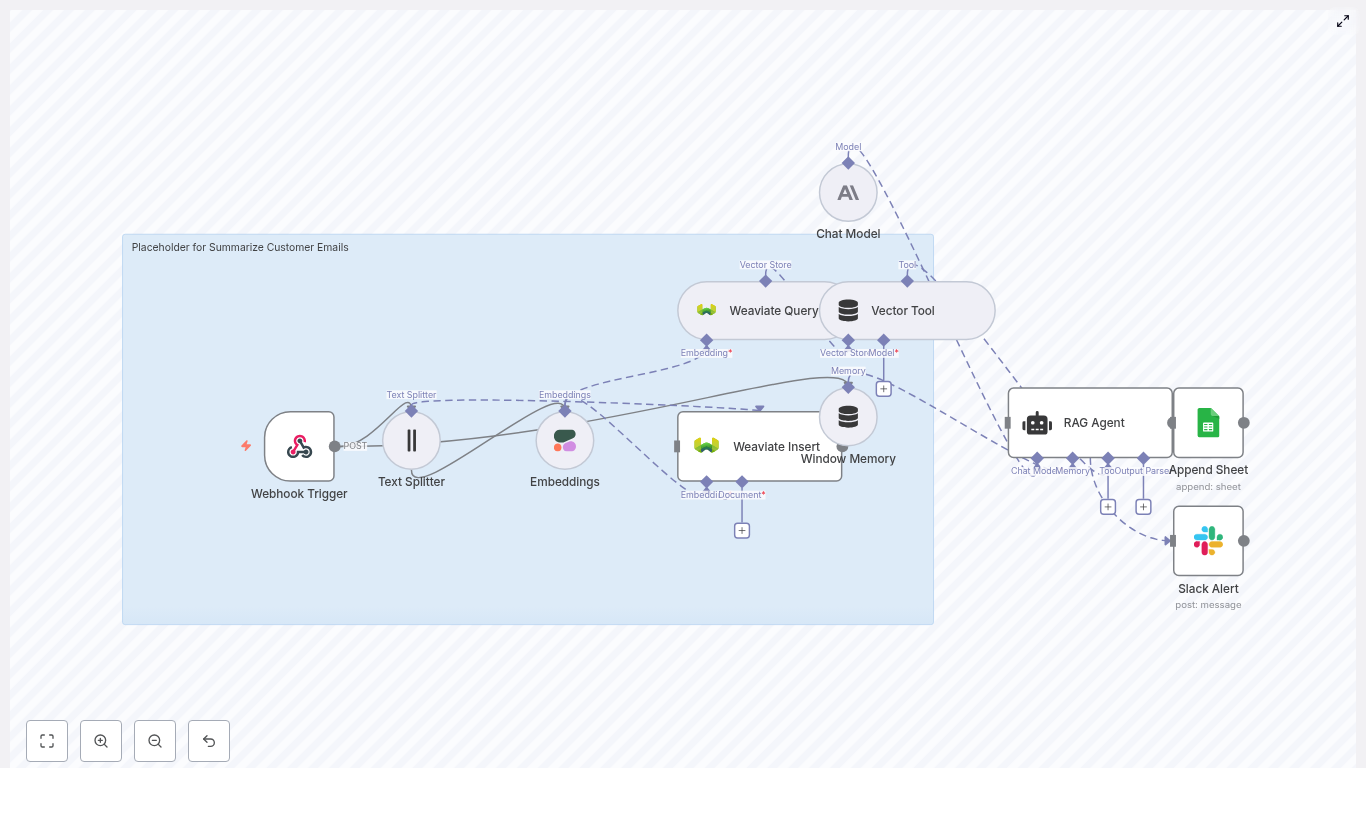

- n8n – central orchestration layer with webhook triggers, error handling, and node-based logic.

- Text splitting – prepares long emails for embedding and retrieval by chunking them into manageable segments.

- Cohere embeddings – converts text chunks into high-quality vector representations.

- Weaviate – vector database used for semantic storage and retrieval of email content.

- Anthropic / Chat model – RAG agent that uses retrieved context and short-term memory to produce summaries.

- Google Sheets & Slack – lightweight observability stack for logging, review, and alerting.

- Webhook Trigger – receives inbound customer emails via HTTP POST.

- Text Splitter – segments the email body into overlapping chunks.

- Embeddings (Cohere) – generates vector embeddings for each chunk.

- Weaviate Insert – writes embedded chunks and metadata into a Weaviate index (

summarize_customer_emails). - Weaviate Query + Vector Tool – retrieves relevant chunks for the current summarization task.

- Window Memory – maintains short-term context across related messages.

- Chat Model + RAG Agent – composes the final summary and suggested response.

- Append Sheet (Google Sheets) – records summary results and status.

- Slack Alert (onError) – notifies a Slack channel when any downstream step fails.

- Configure your email system to forward incoming messages to the n8n webhook URL.

- Validate requests using HMAC signatures, API keys, or IP allowlists to protect the endpoint from abuse.

- Normalize the email payload so that the workflow can reliably access fields such as subject, body, sender, and message ID.

chunkSize: 400 characterschunkOverlap: 40 characters- Attach your Cohere API credentials in n8n and store them securely in the credentials vault.

- Monitor embedding usage because this step often becomes the main cost driver at scale.

- Consider batching chunks where possible to reduce network overhead and improve throughput.

- Original email ID or message ID

- Customer identifier or email (hashed if sensitive)

- Timestamp of receipt

- Optional labels, sentiment, or priority tags

- Use the same embedding model for both indexing and querying to maintain vector space consistency.

- Tune the number of retrieved results (for example, top 5 hits) to control prompt size and cost.

- Leverage metadata filters to restrict retrieval to the relevant customer or conversation.

- Coherence across multi-message summaries.

- Continuity of action items and decisions over time.

- Latency, since not all prior messages need to be reprocessed.

- System instructions that define the assistant behavior.

- Retrieved context from Weaviate via the Vector Tool.

- Short-term context from the Window Memory node.

- Use a concise system message, for example: You are an assistant for Summarize Customer Emails.

- Set temperature and safety parameters to favor stable, factual output over creativity.

- Ensure the prompt explicitly instructs the model to rely only on retrieved context to minimize hallucinations.

- Subject summary – a short description of the customer issue or request.

- Action items – a numbered list of tasks or follow-ups required.

- Suggested response – draft reply text that an agent can quickly review and send.

- Constrain the subject summary length, for example 50 to 150 words, to keep outputs scannable.

- Ask the model to enumerate action items explicitly with bullet points or numbering.

- Include retrieved context as an append-only section in the prompt so the model grounds its output in actual email content.

- Email ID or conversation ID

- Generated summary text

- Status (success, failure, needs review)

- Timestamp of processing

- Optional notes or reviewer comments

- Error message and stack or diagnostic details.

- The affected email ID or key metadata for quick lookup.

- A link to the relevant Google Sheet row if available.

- Hash or redact personally identifiable information (PII) before storing content in Weaviate, especially for long-term retention.

- Use role-based access control (RBAC) and network restrictions for both n8n and Weaviate instances.

- Store all API keys and credentials in the n8n credentials vault and rotate them regularly.

- Limit access to Google Sheets logs or anonymize fields where appropriate to reduce exposure.

- Batch embeddings – group multiple chunks into a single Cohere request to improve throughput and reduce per-request overhead.

- Incremental ingestion – only embed and store new or changed segments instead of reprocessing entire threads.

- Vector store maintenance – periodically prune stale vectors from Weaviate and rebalance indices to keep retrieval fast.

- Context window tuning – adjust the number of retrieved hits and chunk sizes to manage token consumption in the chat model.

- Sampling and human review – regularly review a percentage of auto-generated summaries to validate clarity and correctness.

- Error tracking – use the Google Sheets status column and Slack onError alerts to monitor failure patterns.

- Latency metrics – track response times for embedding creation and Weaviate queries to detect performance regressions.

- Increase

chunkOverlapfor emails where critical context spans multiple chunks. - Enrich Weaviate metadata with structured fields such as labels, sentiment, or priority to improve retrieval precision.

- Experiment with alternative or domain-specific embedding models if your content is highly specialized.

- Import the n8n template into your environment.

- Configure credentials for Cohere, Weaviate, Anthropic, Google Sheets, and Slack.

- Set up email forwarding to the n8n webhook.

- Manually review the first 100 summaries, adjust chunking, retrieval parameters, and prompts, then roll out more broadly.

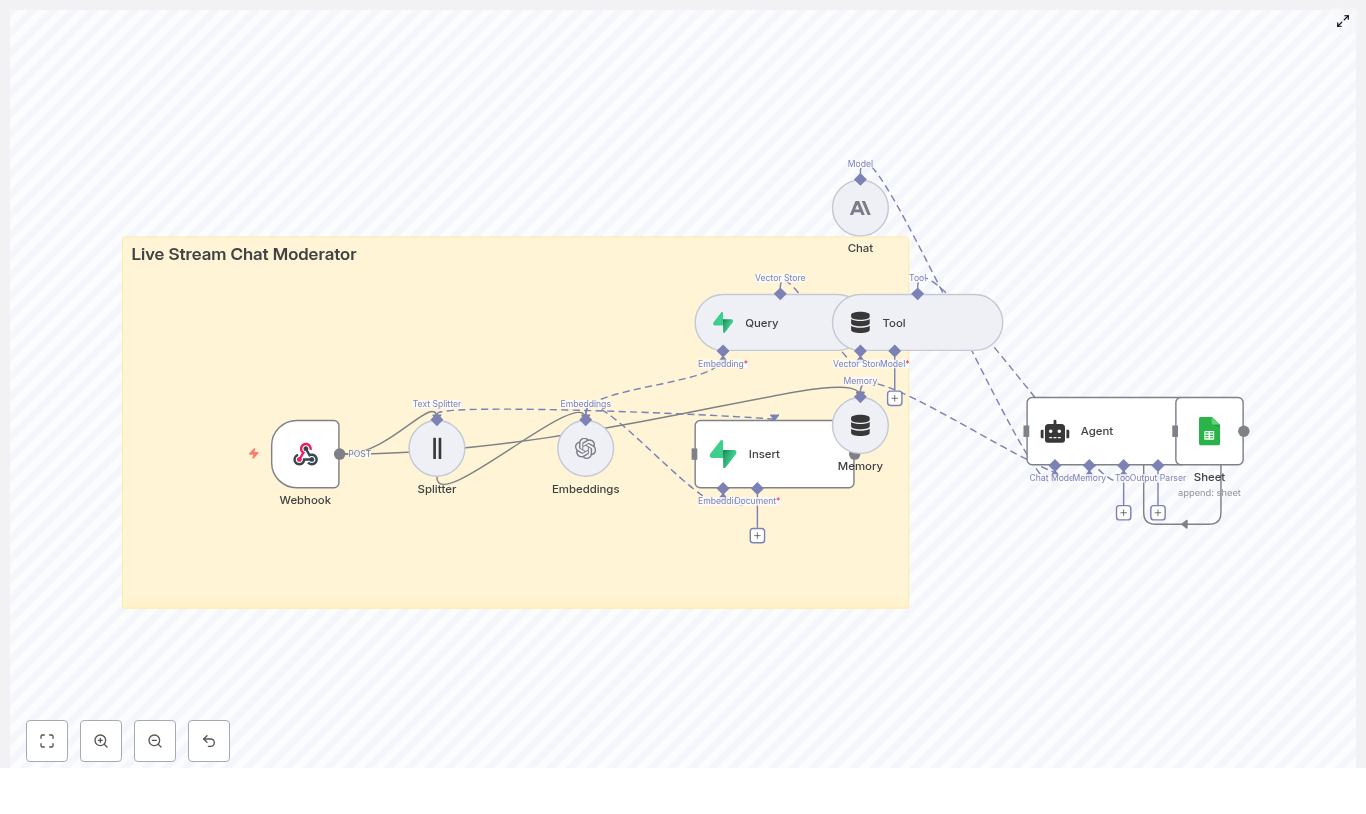

- React quickly to every message

- Understand context and user history

- Remember past incidents and patterns

- Provide an audit trail of decisions

- Delegate repetitive, high-volume decisions to a workflow

- Reserve human attention for edge cases and community care

- Turn your moderation process into something you can measure, refine, and scale

- n8n: visual workflow engine that accepts webhooks, routes data, and coordinates all steps

- Text Splitter & Embeddings: convert chat messages into vectors for semantic search and similarity matching

- Supabase Vector Store: fast, durable storage for embeddings and metadata using a vector database

- Anthropic (or other LLMs): conversational intelligence for classification, reasoning, and moderation decisions

- Google Sheets: simple, accessible audit log for transparency and human review

- Webhook – receives chat events (POST) from your streaming platform or bot

- Splitter – breaks long messages into smaller chunks for embedding and search

- Embeddings – calls OpenAI or another provider to create vector representations

- Insert – stores chunks and embeddings in a Supabase vector store

- Query – looks up similar past messages, rules, or patterns in the vector store

- Tool – exposes the vector store as a tool for the agent to call

- Memory – keeps short-term conversational context for recent chat events

- Chat – Anthropic LLM that analyzes the situation and suggests actions

- Agent – orchestrates tools, memory, and the LLM to produce the final moderation decision

- Sheet – appends the decision and context to a Google Sheet for logging and review

- message_id

- user_id and username

- channel

- timestamp

- moderation_label (if available)

- a short excerpt of the message

- The current message

- The Tool node, which gives access to the vector store

- The Memory node, which holds recent chat events

- The Chat node, powered by Anthropic or another LLM

- Ignore (no action)

- Warn (send a warning message)

- Delete message

- Timeout or ban (for more severe or repeated behavior)

- Flag for human review (and record everything for later inspection)

- The original message

- User and channel information

- The chosen moderation action

- A rationale or explanation from the LLM

- Nearest neighbor references from the vector store

- OpenAI or another embeddings provider key for the Embeddings node

- Supabase URL and service key for the vector store connection

- Anthropic API key for the Chat node, or credentials for your preferred LLM

- Google Sheets OAuth credentials for appending logs

- A secure webhook endpoint, ideally protected with a secret token or HMAC signature on incoming POST requests

- message_id

- user_id and username

- channel

- timestamp

- moderation_label

- a short text excerpt

- Filter by user or channel

- Search for similar incidents over time

- Build higher-level features like reputation scores or dashboards later

- Chunk size: 400 characters

- Chunk overlap: 40 characters

- Using conservative default actions like warnings or flags for review

- Escalating only the clearest or highest-risk cases to timeouts or bans

- Maintaining a clear, documented appeals process

- Reviewing the Google Sheets log regularly to catch misclassifications

- Batch embedding calls when you expect bursts of messages to reduce API overhead

- Use Supabase or another managed vector database with horizontal scaling, such as pgvector on Supabase clusters

- Cache recent queries in memory for ultra-low-latency checks, especially for repeated spam

- Monitor LLM latency and define fallback strategies, such as rules-based moderation when the model is slow or unavailable

- Automated user reputation scoring based on historical behavior in the vector store

- Deeper integration with your bot to post warnings, timeouts, or follow-up messages automatically

- A human moderation dashboard that reads from Google Sheets or Supabase for real-time oversight

- Language detection and language-specific moderation policies or assets

- Import the template into your n8n instance

- Configure your API keys and Supabase project

- Connect your streaming platform or bot to the webhook URL

- Monitor your Google Sheets log and refine your prompts and policies

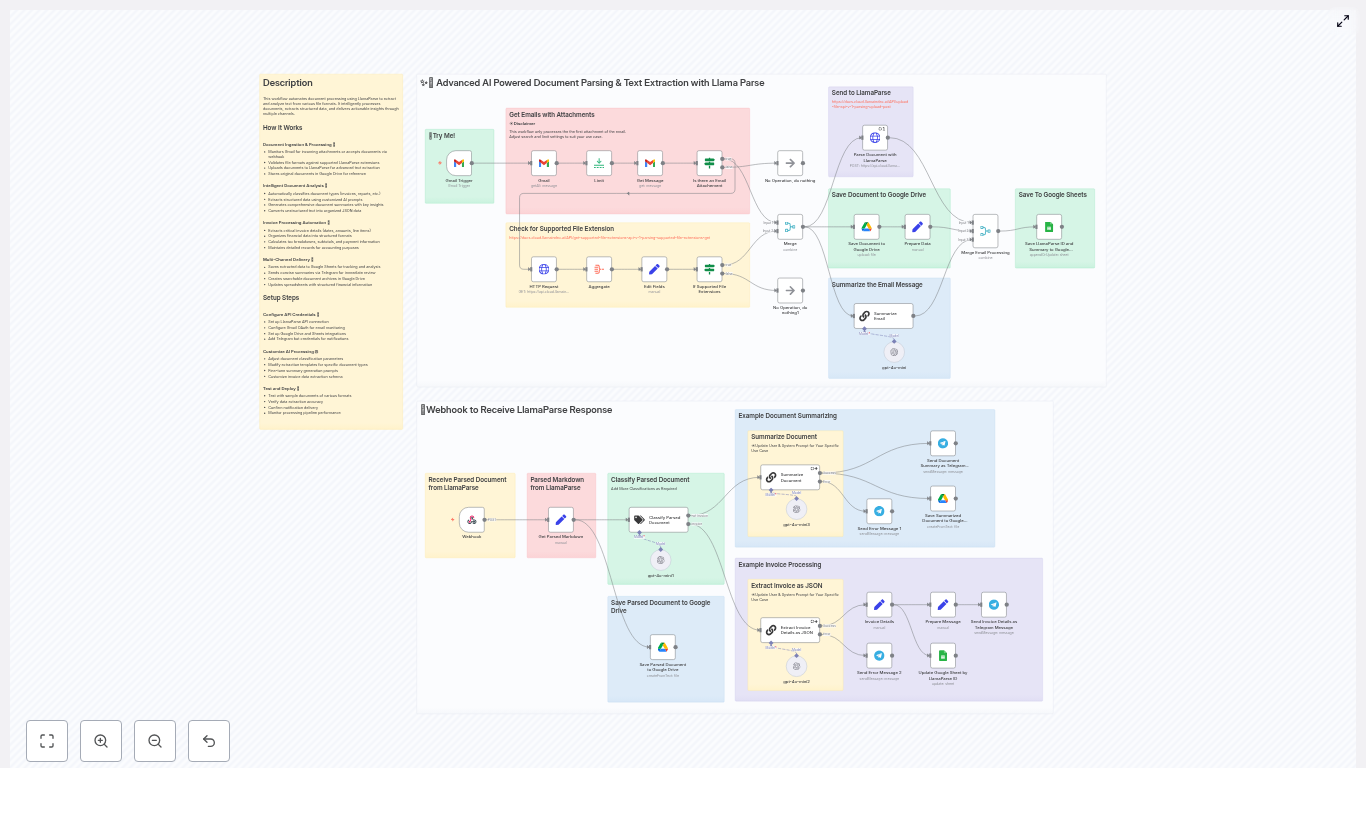

- Watches your Gmail for new emails with attachments

- Checks if the attachment can be parsed by LlamaParse

- Uploads supported files to LlamaParse for AI document parsing

- Receives parsed markdown or JSON back via an n8n webhook

- Decides whether the document is an invoice or something else

- Either extracts structured invoice data as JSON or creates a smart summary

- Saves everything in Google Drive, updates Google Sheets, and notifies you on Telegram

- Detect new emails with attachments as they arrive

- Upload only supported file types to LlamaParse

- Parse AI-generated markdown or JSON responses

- Trigger multiple downstream actions in parallel

- Handle many file formats automatically, without guessing what each attachment is

- Extract structured invoice and transactional data using tailored LLM prompts

- Keep full audit trails by storing raw files and summaries in Google Drive

- Feed Google Sheets with clean, consistent rows for reporting and accounting

- Alert your team in real time via Telegram when new documents are processed

- Receive invoices, receipts, or contracts by email

- Need structured data for accounting, analytics, or internal systems

- Want summaries instead of reading every long document yourself

- Prefer to keep everything organized in Google Drive and Google Sheets

- Like getting quick Telegram notifications instead of digging through your inbox

- Gmail Trigger listens for new messages and fetches attachments.

- The workflow checks the file extension against LlamaParse’s supported types.

- If supported, it uploads the file to LlamaParse using an HTTP multipart request and includes an n8n webhook URL.

- LlamaParse parses the document asynchronously and posts the result back to the webhook.

- n8n then classifies the parsed markdown as invoice or non-invoice.

- Invoices go through a strict JSON extraction flow, while other docs go through a summarization flow.

- Finally, the workflow saves artifacts in Google Drive, updates Google Sheets, and sends Telegram notifications.

has:attachment- A specific sender email address or domain

- file is the binary content of the attachment, often referenced as

file0in n8n - webhook_url is the URL of your n8n Webhook node that will receive the parsed result

- accurate_mode can be enabled when you care more about precision than speed

- Parsed markdown that represents the document content

- Any structured JSON returned by LlamaParse

- A specialized invoice extraction flow with strict JSON output

- A general summarization flow for non-invoice content

invoice_detailstransactionspayment_detailsinvoice_summary- And other invoice-specific sections you define

- Key insights or highlights

- Recommended actions or next steps

- Sent to a Telegram channel so your team sees new documents as they are processed

- Saved to Google Drive as text files for future reference

- The original email attachment

- The parsed markdown

- Summaries or extracted JSON content

- Job ID

- Statement date

- Organization name

- Subtotal

- GST or other tax values

- Payment references and totals

- Validate file types first. Always check against LlamaParse’s supported file extensions before uploading. This saves API usage and avoids avoidable errors.

- Use asynchronous webhooks for large files. Let LlamaParse process in the background and call your n8n webhook when done, instead of blocking a single request.

- Keep prompts strict for structured JSON. When extracting invoices or transactional data, define a schema, specify field types, and ask for consistent number formatting.

- Always keep the original file in Drive. This helps with compliance, audits, and investigating any parsing discrepancies.

- Add retry logic around external calls. For critical steps like LlamaParse uploads or Google Sheets updates, include retries to handle temporary network issues.

- Log and alert on errors. If parsing fails or an API call returns an error, send a message to a Telegram or Slack channel so a human can review it quickly.

- LlamaParse API keys

- Gmail OAuth credentials

- Google Drive and Google Sheets credentials

- Telegram bot tokens

- Invoice processing and AP automation – Extract line items, taxes, payment references and send them into your accounting system or finance dashboards.

- Expense receipt parsing – Handle employee reimbursement documents and feed structured data into your expense management process.

- Contract summarization and clause extraction – Give legal or operations teams quick overviews of long agreements.

- Customer-submitted forms and PDFs – Turn unstructured attachments into clean, structured records for CRMs or internal tools.

- Validate financial values. Check for suspicious numbers like negative totals or wildly out-of-range amounts.

- Reconcile totals. Compare computed totals from line items with the extracted

final_amount_dueor similar fields and flag mismatches. - Monitor workflow health. Keep an eye out for unauthorized errors, quota issues, or changes in LlamaParse’s supported formats.

- Adjust prompts to match your local tax rules, such as GST or PST distinctions

- Extend the invoice JSON schema with fields like booking numbers, event details, or container deposits

- Add downstream integrations such as ERP systems, QuickBooks, or custom databases

- Import the n8n template into your n8n instance.

- Set up credentials for LlamaParse, Gmail, Google Drive, Google Sheets, and Telegram.

- Run a few test emails with sample attachments to validate parsing and data mapping.

- Start small, limit the input sources and file types at first, then expand once you are happy with the extraction quality.

- Queries Edamam for recipes based on your preferences

- Picks a random set of matching recipes (or based on your criteria)

- Builds a simple HTML email with links

- Sends it on a schedule so recipes just show up in your inbox

- Save time – no more 20-minute “quick search” that somehow eats half your evening.

- Plan meals more easily – get a daily or weekly digest that fits your dietary rules.

- Keep variety – randomization keeps you from eating the same pasta 4 nights in a row.

- Use your tools smartly – combine cron scheduling, API calls, and HTML templating in n8n.

- Runs on a schedule using a Cron node (daily, weekly, whenever-you-like).

- Reads your search criteria like ingredients, diet, health filters, calories, and time.

- Prepares query parameters, including optional random Diet / Health selection.

- Asks Edamam how many recipes match your criteria.

- Picks a random window of results, then fetches that specific set of recipes.

- Builds an HTML email listing each recipe with a clickable link.

- Sends the email using your SMTP provider (Gmail, SendGrid, etc.).

- n8n instance – cloud or self-hosted, whichever you prefer.

- Edamam Recipe Search API credentials –

app_idandapp_key. - SMTP credentials – Gmail, SendGrid SMTP, or another email provider.

- Basic n8n familiarity – especially with nodes like Set, Function, HTTP Request, Cron, and Email.

- Cron node fires on your schedule.

- Set node stores your recipe preferences and API keys.

- Function node builds query values and handles random diet/health logic.

- HTTP Request asks Edamam how many recipes match.

- Set + Function decide which slice of results to fetch.

- HTTP Request fetches the actual recipes.

- Function builds an HTML email body with recipe links.

- Email node sends everything to your inbox.

- Daily at 10:00 for a “what’s for dinner tonight” email.

- Every Monday for weekly meal planning.

- RecipeCount – how many recipes you want per email.

- IngredientCount – maximum number of ingredients per recipe.

- CaloriesMin / CaloriesMax – calorie range.

- TimeMin / TimeMax – prep/cook time limits.

- Diet – for example,

balanced,low-carb, orrandomif you want n8n to surprise you. - Health – for example,

vegan,gluten-free, orrandom. - SearchItem – main search term like “chicken”, “tofu”, “pasta”, etc.

- AppID / AppKey – your Edamam credentials (ideally stored securely in n8n credentials).

- It converts your min/max values into Edamam-friendly ranges like

300-700for calories or time. - If you typed

randomfor Diet or Health, it picks one from a predefined list. q– your SearchItem.app_idandapp_key– your Edamam credentials.ingr– max ingredient count.diet– diet filter.calories– calorie range from the Function node.time– time range from the Function node.fromandto– a small window, for examplefrom=1,to=2.- RecipeCount – the total count from Edamam’s response.

- ReturnCount – how many recipes you want to fetch for this run (your per-email count).

- Picks a random

fromvalue somewhere inside the totalRecipeCount. - Sets

toasfrom + ReturnCountso you get a block of recipes starting at that offset. - Recipe labels (titles).

- shareAs links for sharing or opening the recipe.

- images.

- Nutritional info and more.

- Images

- Calories per serving

- Short ingredient lists

- Use your SMTP credentials (Gmail, SendGrid SMTP, or another provider).

- Set a dynamic subject line, for example including diet, health, calories, or time ranges.

- Insert the HTML body from the previous Function node using an expression (pointing to

emailBody). - Do not hard-code API keys in Function nodes. Store Edamam credentials in n8n credentials or environment variables and reference them securely.

- Respect Edamam rate limits. If you run the workflow frequently, consider adding delays or caching results.

- Validate responses before using fields like

countandhits. This avoids runtime errors if the API returns something unexpected. - Check your random ranges. Ensure

fromandtodo not exceed Edamam’s allowed limits or the totalRecipeCount. - Empty results

- Try a broader SearchItem.

- Relax filters like time or calories.

- Use a different Diet or Health setting if you are being very strict.

- Authentication errors

- Double check your

app_idandapp_key. - Confirm the credentials are active in your Edamam dashboard.

- Double check your

- SMTP send failures

- Test your email credentials in another SMTP client.

- Review n8n logs for the full error payload.

- Node errors parsing responses

- Add basic guard checks like

if (!items[0].json.hits)

- Add basic guard checks like

- Copying and pasting transaction details into QuickBooks

- Trying to remember how you classified a similar payment last month

- Digging through spreadsheets or email threads to confirm a customer or order ID

- Double checking everything because one typo can throw off your books

- Let software handle the repetitive work so you can focus on strategy, customers, and growth

- Capture your best decisions once, then reuse them automatically for similar cases

- Build workflows that get smarter over time using context and history

- Contextual memory using Supabase as a vector store, so your workflow can store and search transaction-related context

- Smart enrichment and classification that uses historical data to interpret new Stripe events more accurately

- LLM-driven decision making with OpenAI, plus logging to Google Sheets so you can review and refine your process

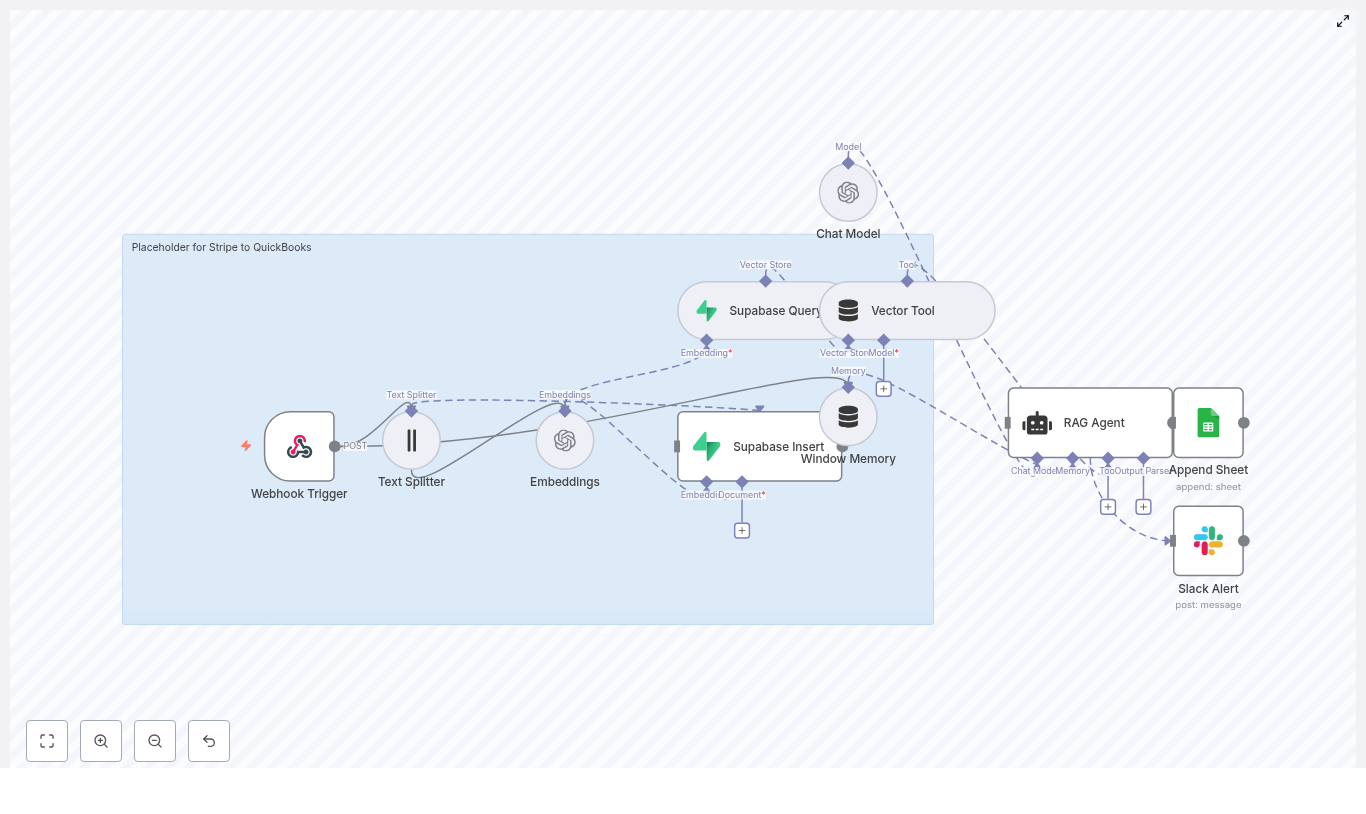

- Webhook Trigger receives the Stripe event

- Text Splitter prepares long text for embeddings

- Embeddings convert text into vectors

- Supabase Insert / Query stores and retrieves contextual vectors

- Window Memory + Vector Tool give the agent short-term and long-term context

- Chat Model + RAG Agent interpret the event and decide on QuickBooks mappings

- Append Sheet (Google Sheets) logs the outcome for traceability

- Slack Alert notifies you if something goes wrong

chunkSize = 400chunkOverlap = 40- Supabase Insert writes new vectors into your vector store so future events can reference them

- Supabase Query performs similarity searches to retrieve the most relevant past entries

- Mapping Stripe payments to the right QuickBooks accounts

- Identifying recurring customers or subscription patterns

- Reusing previous classification and mapping decisions

- Window Memory keeps track of the immediate conversational state and recent messages

- Vector Tool connects the agent to your Supabase vector store, so it can pull in relevant documents or historical entries

- Classify events as income, refunds, fees, or other categories

- Recommend QuickBooks mappings such as customer, account, or tax code

- Generate structured outputs ready for downstream processing

- Timestamp

- Stripe event ID

- Classification (income, refund, fee, etc.)

- Recommended QuickBooks mapping

- Status or notes

- An n8n instance, either self-hosted or cloud

- An OpenAI API key for embeddings and the chat model

- A Supabase project with vector store enabled and an API key

- Google Sheets OAuth2 credentials with access to your target sheet

- A Slack API token to post alerts to your chosen channel

- A Stripe webhook configured to send events to your n8n webhook endpoint with signature verification enabled

- Serve your n8n webhooks over HTTPS and verify Stripe signatures to block unauthorized requests

- Minimize stored PII in your vector store, and use anonymized or hashed IDs where possible

- Apply role-based access control for Supabase, Google Sheets, and Slack credentials

- Maintain an audit trail through logging, which the Google Sheets Append node already supports

- Send synthetic Stripe events to your webhook and verify text chunking, embeddings, and Supabase inserts

- Check that the RAG agent returns consistent QuickBooks mapping recommendations for different scenarios such as new customers, refunds, or fees

- Confirm that successful runs append correct rows to Google Sheets and that failures trigger Slack alerts as expected

- Batch or debounce events to avoid redundant embeddings and reduce unnecessary processing

- Use smaller or lower-cost embedding models for routine data, and reserve higher-cost models for complex reasoning or edge cases

- Prune or compress old vectors in Supabase once they fall outside your retention window, especially if historical context is no longer necessary

- Direct QuickBooks API integration: After the RAG agent recommends mappings, call the QuickBooks Online API to automatically create invoices, payments, or refunds.

- Human-in-the-loop review: For ambiguous or high-value transactions, add an approval step that notifies accounting via email or Slack, and only proceeds after a human signs off.

- Enriched customer profiles: Merge Stripe metadata with CRM data to improve customer matching and classification accuracy.

- Import the Stripe to QuickBooks n8n template into your workspace

- Connect your credentials for OpenAI, Supabase, Google Sheets, Slack, and Stripe

- Adjust chunk sizes or the embedding model if you want to fine tune cost and performance

- Send a few test webhooks, then inspect your Supabase entries and Google Sheets logs to confirm everything is working

- Reduces manual data entry and errors

- Creates consistent, reviewable recommendations for QuickBooks

- Captures an auditable trail of every decision

- Can be extended as your needs grow

Log Twitter Mentions in Notion with n8n

Log Twitter Mentions in Notion with n8n

This guide documents a production-ready n8n workflow template that captures Twitter mentions via webhook, enriches them with semantic embeddings, indexes them in Weaviate, applies a RAG (retrieval-augmented generation) agent for interpretation, and finally persists the processed data to Google Sheets or Notion. It also includes error reporting to Slack.

The focus here is on a detailed, technical walkthrough for users already familiar with n8n concepts such as nodes, credentials, and execution flows.

1. Workflow overview

The template implements an automated pipeline that:

This makes it suitable for teams that need a reliable way to log Twitter mentions, augment them with vector search, and apply LLM-based reasoning before storing them in a knowledge or tracking system.

2. System architecture

2.1 Core components

2.2 Data flow

3. Prerequisites and external services

3.1 Required services

3.2 Security considerations

4. Template import and global configuration

4.1 Importing the JSON template

Import the provided JSON template into your n8n instance. The workflow graph, node connections, and default parameters are already configured. You mainly need to:

4.2 Key node parameters to review

5. Node-by-node breakdown

5.1 Webhook Trigger

The Webhook Trigger node exposes an HTTP endpoint to receive Twitter mention events.

The resulting webhook URL is typically:

https://your-n8n-domain/webhook/log-twitter-mentions-in-notionConfigure your Twitter listener (Twitter API v2 filtered stream or third-party bridge) to send JSON payloads to this URL.

Example test payload:

{ "tweet_id": "123456", "author": "@example", "text": "Thanks for the tip!", "timestamp": "2025-01-01T12:00:00Z"

}Use this payload to validate the webhook and downstream nodes before connecting real Twitter data.

5.2 Text Splitter

The Text Splitter node segments the tweet content into smaller chunks so that embedding models and the RAG agent can process long texts or threads effectively.

Typical configuration goals:

For threaded tweets or long replies, you may increase overlap so that important context is not lost across chunks. Ensure that the input field is mapped to the tweet text (for example, text from the webhook payload).

5.3 Embeddings (Cohere)

The Embeddings node calls Cohere to generate semantic vectors for each text chunk.

Important notes:

5.4 Weaviate Insert

The Weaviate Insert node writes the embedding vectors and accompanying metadata into a Weaviate index.

Typical metadata fields might include tweet ID, author, timestamp, and original text. Ensure that these fields are mapped correctly in the node parameters so you can later query by tweet ID or other attributes.

If your Weaviate instance does not yet have the schema for this index, make sure to create it with a vector dimension that matches the Cohere embedding model you are using.

5.5 Weaviate Query

The Weaviate Query node retrieves relevant context vectors from the index for the current mention.

Common use in this workflow:

If you add deduplication logic later, you can also query by tweet_id before inserting new records.

5.6 Window Memory

The Window Memory node maintains a short history of interactions or context for the RAG agent. It is used to:

The memory window size should be chosen so that you do not exceed token limits when combined with retrieved Weaviate context and the current tweet text.

5.7 Chat Model and RAG Agent

The RAG Agent node is backed by a Chat Model (Anthropic in the template) and receives:

The RAG Agent uses a system message to guide its behavior. The template includes:

"systemMessage": "You are an assistant for Log Twitter Mentions in Notion"You are expected to refine this prompt so the agent outputs structured data that is easy to parse, for example:

That structure is critical for clean mapping into Google Sheets or Notion properties.

5.8 Append Sheet (Google Sheets)

The Append Sheet node is the default persistence layer. It appends a new row to a specified Google Sheet for each processed mention.

Map the RAG Agent output and original tweet fields into columns such as:

If you later switch to Notion, this node will typically be replaced, but the mapping logic remains conceptually similar.

5.9 Slack Alert

The Slack Alert node is connected via the workflow’s onError path. Whenever a node fails (for example, due to API errors, rate limits, or invalid input), this node posts a message to the configured Slack channel.

For better debugging, customize the Slack message to include fields such as:

This makes it easier to correlate Slack alerts with specific executions in n8n.

6. Twitter integration and webhook setup

6.1 Connecting Twitter to n8n

Use either the Twitter API v2 filtered stream or a third-party automation tool to monitor mentions of your account. Configure that service to send a POST request to:

https://your-n8n-domain/webhook/log-twitter-mentions-in-notionThe payload structure should at minimum include:

Run a test with the sample payload provided earlier to ensure the workflow triggers correctly and downstream nodes receive the expected fields.

7. Vector store configuration (Cohere + Weaviate)

7.1 Embeddings configuration

In n8n, configure your Cohere credentials and set the Embeddings node to use embed-english-v3.0, as in the template. If you choose a different model, verify that:

7.2 Weaviate configuration

For the Weaviate Insert and Query nodes:

If you do not yet have a Weaviate instance, you can:

Weaviate schema errors typically indicate mismatches in class names, index names, or vector dimensions. Check these carefully if you encounter issues.

8. RAG agent behavior and prompt design

8.1 System prompt configuration

The system message defines how the RAG Agent interprets mentions. The template uses a simple system message:

"You are an assistant for Log Twitter Mentions in Notion"For better structure and reliability, refine the prompt so the model consistently outputs fields that you can parse, such as:

Structured outputs reduce the need for complex parsing nodes downstream and improve the quality of your Notion or Sheets records.

8.2 Using retrieved context

The RAG Agent uses:

This enables more informed decisions about whether a mention needs follow-up or how it should be categorized. If you find the agent output irrelevant, consider:

Supply Chain Delay Monitor (n8n Workflow)

Supply Chain Delay Monitor: n8n Workflow Reference

Complex supply chains generate a constant stream of shipment updates, carrier exception messages, and supplier notifications. Manually reviewing these signals to detect delays is inefficient and often too slow to prevent downstream impact. This reference-style guide describes an n8n workflow template, Supply Chain Delay Monitor, that automates delay detection and classification using embeddings, a vector database, and an LLM-based agent, with results logged into Google Sheets for operational use.

The documentation below is organized for technical and operations engineers who want a clear view of the workflow architecture, node configuration, and customization options.

1. Workflow Overview

The Supply Chain Delay Monitor is an end-to-end n8n workflow that:

This pipeline is designed for:

2. High-Level Architecture

The workflow combines n8n nodes with several external services:

End-to-end flow:

3. Node-by-Node Breakdown

3.1 Webhook Node (Event Ingestion)

Role: Entry point for all supply chain events.

Typical configuration:

Data flow: The raw JSON body from the POST request is passed to downstream nodes. The unstructured text field is the primary input for embeddings, while structured fields become metadata in the vector store and in the final log.

Security considerations:

3.2 Splitter Node (Text Chunking)

Role: Converts long or composite carrier messages into smaller text segments suitable for embedding.

Typical parameters:

This approach:

Edge cases:

3.3 Embeddings Node (Cohere or Equivalent)

Role: Generates numeric vector representations for each text chunk.

Provider: Cohere is used in the template, but any compatible embeddings provider can be substituted with equivalent configuration.

Configuration highlights:

Output: Each input chunk produces a vector (array of floats) plus any additional metadata the node returns. These vectors are then associated with shipment-level metadata in the next step.

Error handling:

3.4 Insert + Vector Store Node (Supabase)

Role: Persists embeddings and associated metadata in a vector database backed by Supabase.

Key configuration elements:

Benefits:

Index management notes:

3.5 Query + Tool Nodes (Context Retrieval)

Role: Retrieve semantically similar historical events and expose them as a “tool” to the AI Agent.

Query node configuration:

Tool node role:

This pattern allows the Agent to reason over both the current event and a curated set of past events retrieved via vector search.

3.6 Memory Node (Conversation State)

Role: Maintain short-term conversational or workflow context across multiple related events.

Usage:

Typical configuration: